Would you buy a server containing no storage? What about a server containing no memory? These are just a few of the questions that were asked at the Storage Networking Industry Association (SNIA) Persistent Memory Summit last week in San Jose, California. Nick Principe, Technical Marketing Engineer at iXsystems, attended this one-day event, which brought people from all sides of the emerging persistent memory market.

Given the unrelenting growth of storage capacity shipped per quarter, it can be hard to buy into a vision of data centers without storage, or servers without local memory. However, with the serious productization and growing support for NVDIMM, it is becoming easier to believe in a vision where applications no longer worry about “storage” once they regard their memory as persistent. Today, there are already applications that fully support persistent memory, while many others are adding support in the near future for high-impact use cases.

A fundamental question to a discussion of persistent memory is: how does persistent memory differ from flash connected via NVMe or NVMe over fabrics? The major difference is that persistent memory is, fundamentally, byte-addressable. That did bring up a source of contention during one of the panel sessions, however. Must persistent memory only be addressed as memory in a byte-oriented fashion, or can a block-based storage layer be inserted on top of persistent memory to provide super-fast storage to legacy applications?

On one side, it is true that persistent memory only reaches its full potential when integrated into a memory-semantic data flow and usage pattern, but taking full advantage of this paradigm requires changes to applications and programming models. On the other side of the debate, the latency of NVDIMM-based and memory bus-attached persistent memory is so low, that even shimming it into a block-based storage-semantic data flow can present major performance improvements with far less transformational effort. The actual path forward will likely include both of these approaches, at least for the short to medium term.

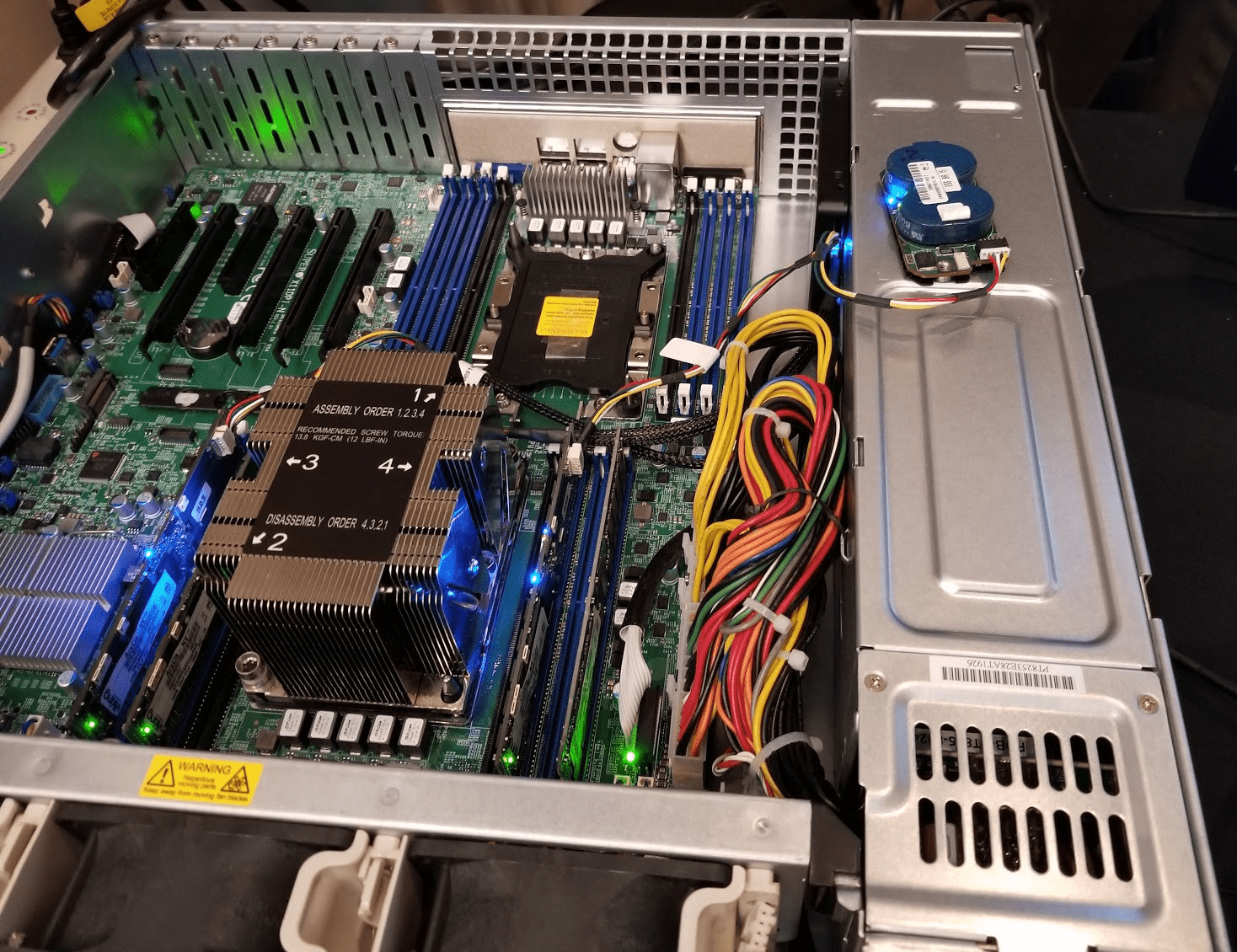

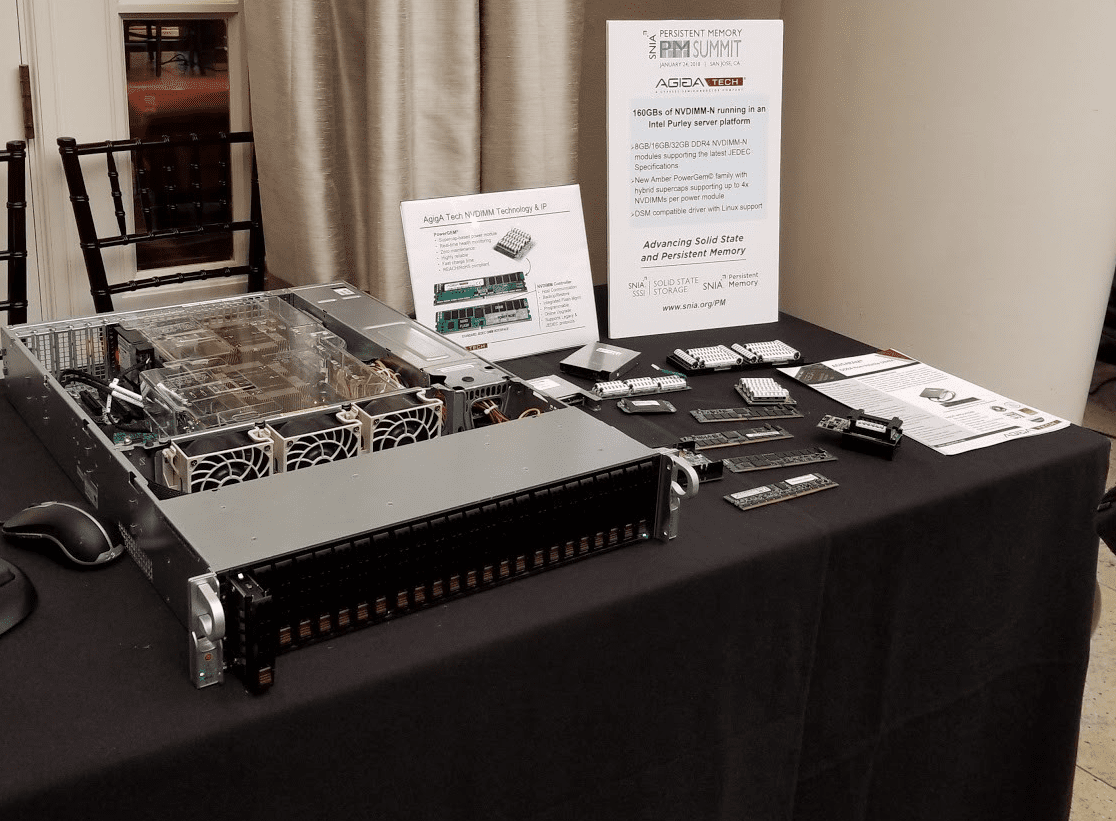

The only representation of persistent memory present in the vendor exhibition area was NVDIMM. Hardware and software support for this method seems to be approaching “reliable” rapidly, so expect to see enterprise vendors shipping products incorporating this technology soon. At iXsystems, we are actively researching how to best integrate NVDIMMs into our TrueNAS product line to accelerate performance beyond what flash memory is capable of achieving.

Currently, NVDIMMs are very effective at speeding up transactional workloads, such as databases, that are bound by redo log performance. By absorbing immediate requests that must be persistent to NVDIMM, per-request latency can be dramatically reduced and this persistent memory can then be quickly background flushed to slower persistent storage to free up space in the persistent memory. Does this sound a lot like a SLOG in ZFS to you, too?

While the current prevalent persistent memory interconnect is the CPU’s parallel memory bus via DIMM slots, there are other possibilities. There is also the potential to use a serial memory bus, which would still operate through the CPU’s memory controller. This would allow for high bandwidth and a significant pin count reduction but requires support from the memory controller. Industry efforts around serial memory bus architectures include CCIX, OpenCAPI, and Gen-Z.

The adoption and proliferation of NVMe for low-latency flash storage presents itself as an ideal peripheral interconnect for persistent memory as well, albeit with higher latency than the memory bus, with latencies around one microsecond. The NVMe interconnect has advantages in that it is standardized, platform-independent, and hot pluggable.

The efforts around NVMe over fabrics similarly leads directly into the persistent memory over fabrics discussion. Having a persistent memory fabric would be a requirement to eliminate the storage paradigm from applications forever, if that ever happens. The good news is that, using RDMA, latencies are still under two microseconds. Research suggests that about one microsecond could be saved if RNIC devices were embedded into CPUs on both sides of the persistent memory network.

These days, application support for persistent memory seems to be accelerating. Microsoft SQL Server has supported persistent memory since late 2016 and Hyper-V added support in fall 2017. VMware is working on the ability to map persistent memory devices into a virtualized SCSI device and is looking to leverage persistent memory for live VM migrations. FreeBSD support for persistent memory is still in the development phase, but libraries such as the Persistent Memory Development Kit (PMDK) support FreeBSD as a technical preview.

This is only a small sample of what was presented and discussed at SNIA’s Persistent Memory Summit 2018. One important thing to keep in mind is the user’s requirements. Two messages stood above all the others: users want their workflows accelerated but more natural, and for the storage industry to stick to clear standards.

For more information about SNIA and the Persistent Memory Summit 2018, and to download all the slides from the sessions, see the SNIA website for the event at: https://www.snia.org/pm-summit

Nick Principe, Technical Marketing Engineer