Nicolas_Studiokgb

Contributor

- Joined

- Aug 7, 2020

- Messages

- 130

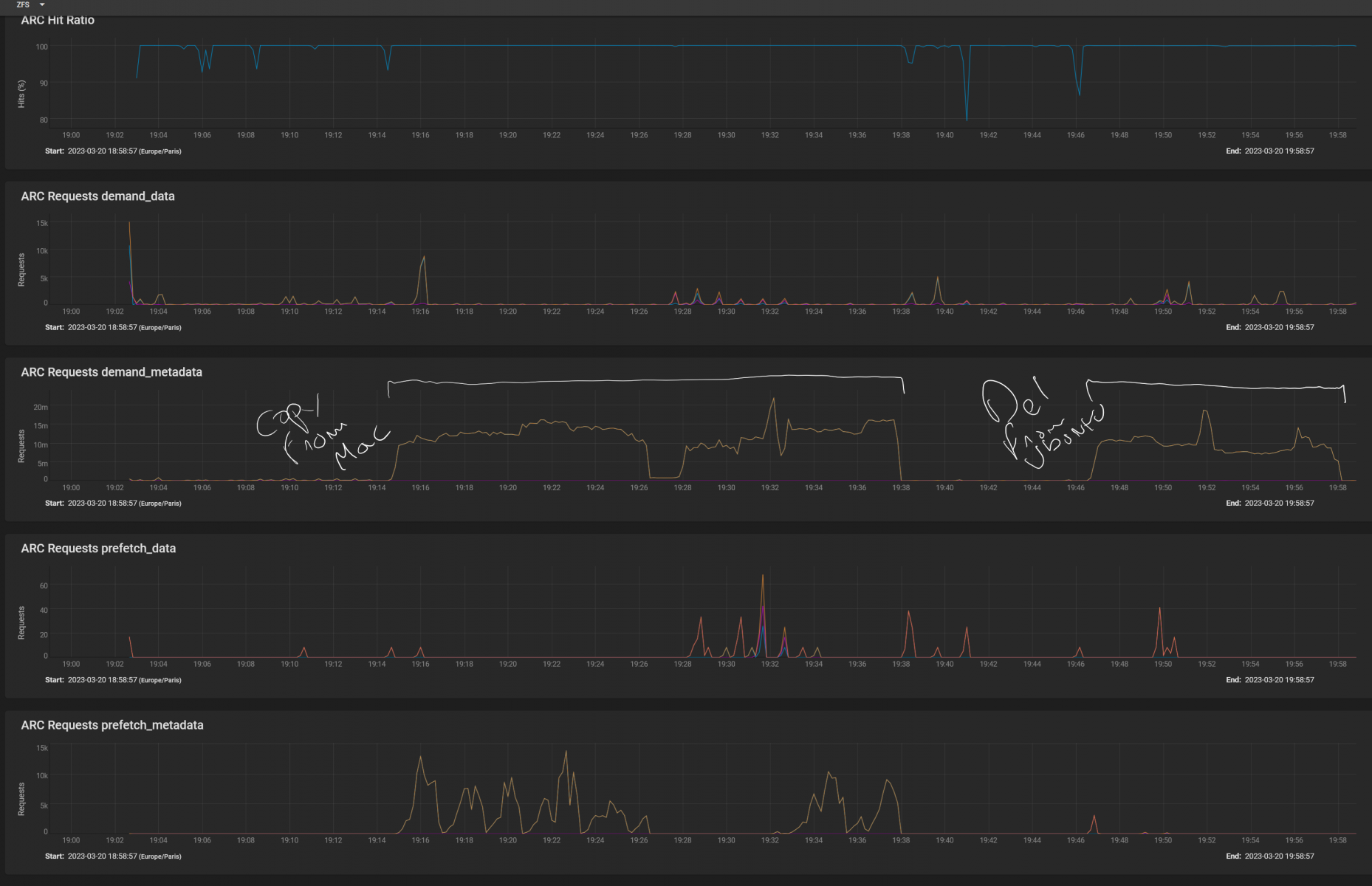

So another test still with my 670GB 55kitem set of audiofiles // Truenas v13u4

Clean empty dataset (always the same settings as previous tests without any aux param sync, atime disabled in dataset)

Copy from the mac : 24 minutes (as usual)

Delete from Ubuntu 22.04.1 Files app : 11 minutes (1h02 from mac os as a reminder)

So way faster (but still not as fast as between mac : 4minutes).

And SMBD on the server never go more than 30% on cores (instead of 100% when doing from mac os finder)

ARC Req demand_metadata mostly under 10M (instead of 65M when deleting from mac os finder)

My question is : Is Ubuntu takes care of all alternate stream/metadata/ressource fork/or whatever that finder copy with the files ? That would explain such a difference ?

So what is the solution

Clean empty dataset (always the same settings as previous tests without any aux param sync, atime disabled in dataset)

Copy from the mac : 24 minutes (as usual)

Delete from Ubuntu 22.04.1 Files app : 11 minutes (1h02 from mac os as a reminder)

So way faster (but still not as fast as between mac : 4minutes).

And SMBD on the server never go more than 30% on cores (instead of 100% when doing from mac os finder)

ARC Req demand_metadata mostly under 10M (instead of 65M when deleting from mac os finder)

My question is : Is Ubuntu takes care of all alternate stream/metadata/ressource fork/or whatever that finder copy with the files ? That would explain such a difference ?

So what is the solution