junior466

Explorer

- Joined

- Mar 26, 2018

- Messages

- 79

Greetings!

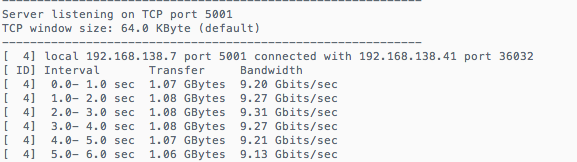

I've done a ton of reading on this subject and I think my bottleneck are my drives/pool but just wanted to see if you all agree. I have a FreeNAS host direct connected (DAC) to a ESXi 6.7 host with a handful of Virtual Machines connecting to FreeNAS over SMB and NFS. I've noticed that I can't

achieve greater speeds than 200MB/s when copying files over NFS and SMB. iPerf, as usual shows that there are no issues with the connections from the Virtual Machines to FreeNAS. I always see near 10Gb speeds.

FreeNAS host:

FreeNAS-11.1-U7

Intel(R) Xeon(R) CPU E5-2403 0 @ 1.80GHz

16GB

Mirror Pool with 4 2TB Seagates

The 10Gb NICs are Dell Broadcom 57810S Dual-Port 10GbE SFP+ with the latest drives installed. I have tried enabling jumbo frames with no luck. The ESXi host is running RAID10 with 15K RPM SASs.

Are my drives on the FreeNAS host the issue? I will gladly provide more information if needed.

Thank you.

I've done a ton of reading on this subject and I think my bottleneck are my drives/pool but just wanted to see if you all agree. I have a FreeNAS host direct connected (DAC) to a ESXi 6.7 host with a handful of Virtual Machines connecting to FreeNAS over SMB and NFS. I've noticed that I can't

achieve greater speeds than 200MB/s when copying files over NFS and SMB. iPerf, as usual shows that there are no issues with the connections from the Virtual Machines to FreeNAS. I always see near 10Gb speeds.

FreeNAS host:

FreeNAS-11.1-U7

Intel(R) Xeon(R) CPU E5-2403 0 @ 1.80GHz

16GB

Mirror Pool with 4 2TB Seagates

The 10Gb NICs are Dell Broadcom 57810S Dual-Port 10GbE SFP+ with the latest drives installed. I have tried enabling jumbo frames with no luck. The ESXi host is running RAID10 with 15K RPM SASs.

Are my drives on the FreeNAS host the issue? I will gladly provide more information if needed.

Thank you.