I just upgraded my home infrastructure to 10Gb and am seeing sub-par SMB transfer speeds between my desktop PC running Window 10 and my FreeNAS server. When I run iperf tests between the two I get ~9.4Gbits/sec but when I copy a file from an SMB share to a NVMe PCIe drive on my PC I only get about 215 MB - 350MB/s.

iperf client on on PC results

iperf on server results

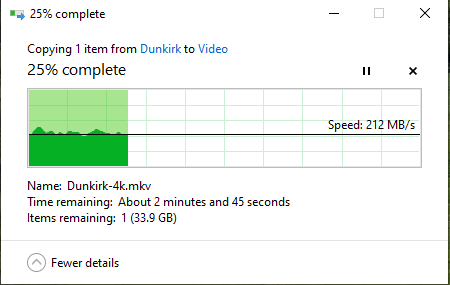

But when I copy large files from a SMB share to my PC I see the following: For this transfer let it run until it was about 30% complete

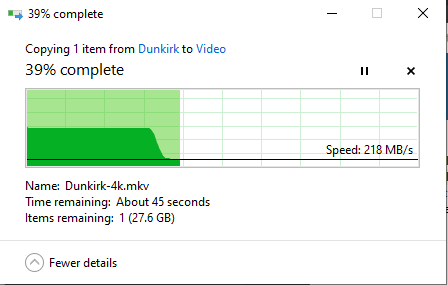

When I copy the same file a second time

You'll notice that the first 30% of the transfer runs at 10Gb/s then drops back to 218 MB/s second for the rest of the file. If I let the copy finish and copy the same file a third time, the entire file transfers 10Gb/s. Based on the results of the iperf tests, the network is capable 10Gbs, but I'm baffled by the SMB performance. Why would copying the file a second time be faster? Is the file getting cached somewhere on the FreeNAS side so it doesn't have to be pulled from disk? Does anyone have any suggestions for getting better SMB transfer speeds?

INFRASTRUCTURE DETAILS

FreeNAS Server specs are in my signature. The 10Gb card in the server is a iXsystems FreeNAS Mini XL+ 10Gb Dual-Port Upgrade SFP+ card. These are private-labeled Chelsio 520-SO-CR cards. The card is plugged into a PCIe 3.0 8x slot on the motherboard. The 12 drives are divided evenly between 2 RAIDZ2 vdevs

PC Specs: AMD 3950X CPU, 32GB RAM 2x M.2 Gen 4 NVME SSD Drives. The 10Gb network card is an Intel X550-T2

Both the FreeNAS server and the PC are connected to a Ubiquiti Networks US-16-XG. Jumbo frames are enabled on all the switches and the packet size is set to 9014 on both the PC and server.

I've tried several tuning options on the PC and server side and nothing seems to have any effect on the SMB performance.

My SMB Aux Parameters are

ea support = no

store dos attributes = no

map archive = no

map hidden = no

map readonly = no

map system = no

strict sync = no

I have not added any system tunables.

iperf client on on PC results

Code:

iperf.exe -c x.x.x.x -P 2 -t 30 -r -e ------------------------------------------------------------ Server listening on TCP port 5001 with pid 9864 TCP window size: 208 KByte (default) ------------------------------------------------------------ ------------------------------------------------------------ Client connecting to x.x.x.x, TCP port 5001 with pid 9864 TCP window size: 208 KByte (default) ------------------------------------------------------------ [ 5] local x.x.x.x port 51301 connected with x.x.x.x port 5001 [ 4] local x.x.x.x port 51300 connected with x.x.x.x port 5001 [ ID] Interval Transfer Bandwidth Write/Err [ 5] 0.00-30.00 sec 16.6 GBytes 4.75 Gbits/sec 1/0 [ 4] 0.00-30.00 sec 16.3 GBytes 4.67 Gbits/sec 1/0 [SUM] 0.00-30.00 sec 32.9 GBytes 9.42 Gbits/sec 2/0

iperf on server results

Code:

iperf -s -e ------------------------------------------------------------ Server listening on TCP port 5001 with pid 35021 Read buffer size: 128 KByte TCP window size: 64.0 KByte (default) ------------------------------------------------------------ [ 4] local x.x.x.x port 5001 connected with x.x.x.x port 51300 [ 5] local x.x.x.x port 5001 connected with x.x.x.x port 51301 [ ID] Interval Transfer Bandwidth Reads Dist(bin=16.0K) [ 4] 0.00-30.01 sec 16.3 GBytes 4.67 Gbits/sec 1022122 417223:536483:62233:2831:2926:199:192:35 [ 5] 0.00-30.01 sec 16.6 GBytes 4.75 Gbits/sec 1009944 395970:529811:78238:2604:2948:202:134:37 [SUM] 0.00-30.01 sec 32.9 GBytes 9.42 Gbits/sec 2032066 813193:1066294:140471:5435:5874:401:326:72

But when I copy large files from a SMB share to my PC I see the following: For this transfer let it run until it was about 30% complete

When I copy the same file a second time

You'll notice that the first 30% of the transfer runs at 10Gb/s then drops back to 218 MB/s second for the rest of the file. If I let the copy finish and copy the same file a third time, the entire file transfers 10Gb/s. Based on the results of the iperf tests, the network is capable 10Gbs, but I'm baffled by the SMB performance. Why would copying the file a second time be faster? Is the file getting cached somewhere on the FreeNAS side so it doesn't have to be pulled from disk? Does anyone have any suggestions for getting better SMB transfer speeds?

INFRASTRUCTURE DETAILS

FreeNAS Server specs are in my signature. The 10Gb card in the server is a iXsystems FreeNAS Mini XL+ 10Gb Dual-Port Upgrade SFP+ card. These are private-labeled Chelsio 520-SO-CR cards. The card is plugged into a PCIe 3.0 8x slot on the motherboard. The 12 drives are divided evenly between 2 RAIDZ2 vdevs

PC Specs: AMD 3950X CPU, 32GB RAM 2x M.2 Gen 4 NVME SSD Drives. The 10Gb network card is an Intel X550-T2

Both the FreeNAS server and the PC are connected to a Ubiquiti Networks US-16-XG. Jumbo frames are enabled on all the switches and the packet size is set to 9014 on both the PC and server.

I've tried several tuning options on the PC and server side and nothing seems to have any effect on the SMB performance.

My SMB Aux Parameters are

ea support = no

store dos attributes = no

map archive = no

map hidden = no

map readonly = no

map system = no

strict sync = no

I have not added any system tunables.