Hello to the community; this is my first post and it's regarding my proposed NAS build.

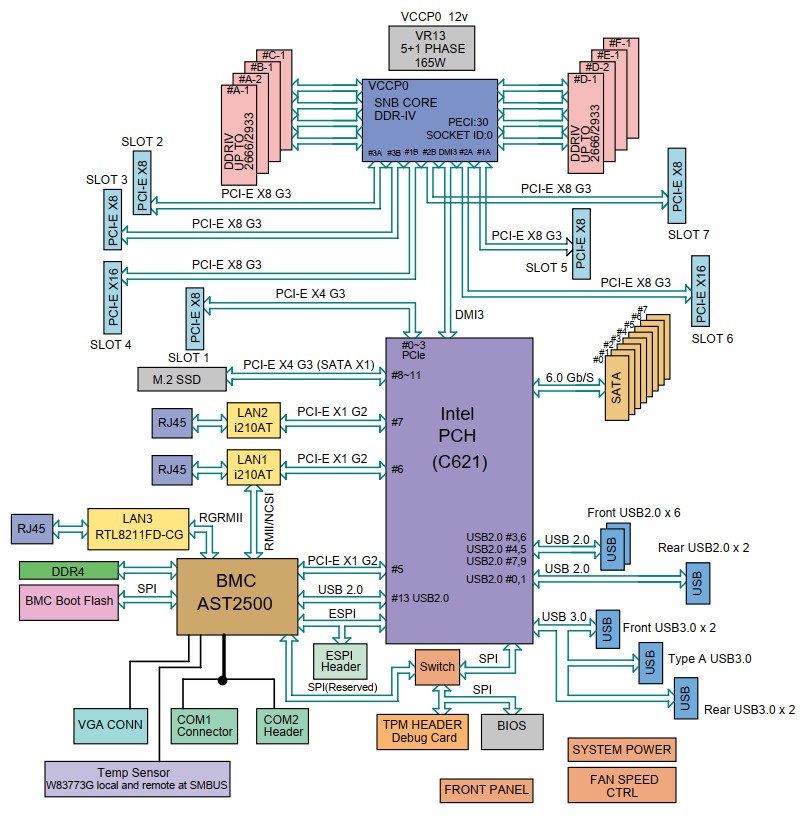

I've read all the pertinent info I can find in the TrueNAS documentation and on this forum, but would just like clarification that my understanding is correct involving the use of the onboard SATA ports (8 of them) that are connected to the C621 PCH.

My planned system is going to be used first and foremost as NAS, as I've outgrown the 8TB capacity of my current QNAP TS-451-4G. But I also want to run some container apps (Home Assistant, Jellyfin) and Blue Iris in a Win10 VM. My proposed system consists of:

So my first question is straightforward: any reason to avoid using the 8 onboard SATA ports? I know I will need to set them to AHCI in the BIOS.

If I want to add NVMe SSDs, I'm looking into PCIe adapters. The slots on this mobo are all x8, so a two-drive adapter makes sense. And the X11SPL-F supports bifurcation in the BIOS setup.

The Supermicro compatibility list shows two potential solutions: AOC-SLG3-2M2 to accept a pair of m.2 SSDs; and AOC-SLG3-2E4T to accept a pair of OCuLink cables. Then the OCuLink cables connect to a pair of U.2 drives. I'm leaning the latter direction because I'm seeing enterprise-grade SSDs such as the Intel D7-P5500 in U.2 format from ServerPartDeals in 1.92TB and 3.84TB capacities for not a lot more than consumer-grade m.2 SSDs.

I see that the P5500 is apparently now made by Solidigm, but the Intel product brief states, "Power-Loss imminent (PLI) protection scheme with built-in self-testing, guards against data loss if system power is suddenly lost. Coupled with end-to-end data path protection scheme, PLI features also enable ease of deployment into resilient data centers where data corruption from system-level glitches is not tolerated."

I'm not sure whether that means is has real PLP or something weaker. Does anyone know for sure?

So my second question is what is a robust way to add NVMe SSD storage: do these Intel P5500s make sense, requiring the U.2 interface adapters? Or is there an equivalent m.2 device that can slot into the AOC-SLG3-2M2 adapter? Am I making a good decision to avoid the very-low-cost adapters one can find on Amazon or Newegg to plug either m.2 or U.2 SSDs into a PCIe slot? And am I wise to avoid consumer-grade m.2 NVMe SSDs, such as Samsung 9x0 Pro or Crucial P3/P5?

Sorry for such a long-winded first post. Maybe I'm asking questions for which there is no single "right" answer.

I've read all the pertinent info I can find in the TrueNAS documentation and on this forum, but would just like clarification that my understanding is correct involving the use of the onboard SATA ports (8 of them) that are connected to the C621 PCH.

My planned system is going to be used first and foremost as NAS, as I've outgrown the 8TB capacity of my current QNAP TS-451-4G. But I also want to run some container apps (Home Assistant, Jellyfin) and Blue Iris in a Win10 VM. My proposed system consists of:

Supermicro X11SPL-F mobo (to be bought new from Newegg)

Intel Xeon 2nd Gen Scalable Gold 6230 (purchased used; 125W TDP)

6x 32GB RDIMM per SM memory compatibility list (purchased "open box" from A-Tech on Ebay)

GPU: TBD (possibly Intel Arc A380 for Jellyfin transcoding)

Antec 900 case (used full tower case with 9 external front bays @ 5.25"; purchased locally via Craigslist)

2x IstarUSA BPN-DE340HD 4-drive/3-bay Trayless Hot Swap 3.5" drive cages (new from Newegg)

TBD: 1x IstarUSA BPN-DE230HD 3-drive/2-bay Trayless Hot Swap 3.5" drive cages (new from Newegg)

Noctua NH-U12S DX-3647 dual-fan CPU cooler (fits in my case; its flow direction is upwards toward the Antec 200mm ceiling fan) (new)

Seasonic Prime TX-850 PSU

LSI 9207-8i 6Gbps SAS PCIe 3.0 HBA P20 IT Mode (sold and flashed by Art of Server on Ebay, used)

Hard drives: probably 4 to 6 WD HC530 WUH721414ALE6L4 (14TB, factory recertified, $144 ea) or WD HC550 WUH721818ALN604 (18TB, new, $240 ea)

[I'm looking at ServerPartDeals for these drives.]

Finally I'm considering a PCIe AOC in order to add two fast SAS drives for VM and Docker images (mirrored pair).

Intel Xeon 2nd Gen Scalable Gold 6230 (purchased used; 125W TDP)

6x 32GB RDIMM per SM memory compatibility list (purchased "open box" from A-Tech on Ebay)

GPU: TBD (possibly Intel Arc A380 for Jellyfin transcoding)

Antec 900 case (used full tower case with 9 external front bays @ 5.25"; purchased locally via Craigslist)

2x IstarUSA BPN-DE340HD 4-drive/3-bay Trayless Hot Swap 3.5" drive cages (new from Newegg)

TBD: 1x IstarUSA BPN-DE230HD 3-drive/2-bay Trayless Hot Swap 3.5" drive cages (new from Newegg)

Noctua NH-U12S DX-3647 dual-fan CPU cooler (fits in my case; its flow direction is upwards toward the Antec 200mm ceiling fan) (new)

Seasonic Prime TX-850 PSU

LSI 9207-8i 6Gbps SAS PCIe 3.0 HBA P20 IT Mode (sold and flashed by Art of Server on Ebay, used)

Hard drives: probably 4 to 6 WD HC530 WUH721414ALE6L4 (14TB, factory recertified, $144 ea) or WD HC550 WUH721818ALN604 (18TB, new, $240 ea)

[I'm looking at ServerPartDeals for these drives.]

Finally I'm considering a PCIe AOC in order to add two fast SAS drives for VM and Docker images (mirrored pair).

So my first question is straightforward: any reason to avoid using the 8 onboard SATA ports? I know I will need to set them to AHCI in the BIOS.

The only other reason I'm considering adding the LSI 9207-8i is to accommodate more drives. I have seven existing hard drives I could use: 4 @ 4TB SATA and 3 @ 2TB SAS (7200RPM Seagate Constallation ES ST2000NM0021 6 Gbps). However these 7 drives are all 10 years old; the SATAs have been in continuous use in my QNAP NAS, while the SAS drives were used for 7 years in my HP workstation, then shelved until now. So naturally I'm thinking it's perhaps false economy to install these old drives, as they can be replaced by 1.5 of the newer WD HC530 or HC550 drives, thus saving a bunch of power consumption, at a cost of an extra $200-300.

Oh and I also have 4 SATA SSDs, also 10 years old but only used 7 of those years: 2 @ Samsung 840 Pro 256GB and 2 @ Samsung 850 Pro 256GB. And I have the nice Icy Dock adapter cage that mounts all 4 of them in a single 5.25" drive bay, with hot-swap access. The two BPN-DE340HD cages will occupy 6 of my 9 front bays, this Icy Dock 2.5" cage will occupy 1 bay, and that leaves 2 front bays that could accept a BPN-DE230HD cage to hold three more 3.5" hard drives.

I could use the SATA SSDs in a mirror-stripe setup or in RaidZ2 to get 500GB of space for the VMs and Docker images, but that wouldn't be as fast as adding a pair of NVMe SSDs. I guess the wise course of action would be to check the SMART status of these older SSDs first, to determine what remaining life they have. I have very little experience doing the SMART checkup for SSDs, so will have to learn how to do that. [Maybe Samsung provides an app for this?]

Oh and I also have 4 SATA SSDs, also 10 years old but only used 7 of those years: 2 @ Samsung 840 Pro 256GB and 2 @ Samsung 850 Pro 256GB. And I have the nice Icy Dock adapter cage that mounts all 4 of them in a single 5.25" drive bay, with hot-swap access. The two BPN-DE340HD cages will occupy 6 of my 9 front bays, this Icy Dock 2.5" cage will occupy 1 bay, and that leaves 2 front bays that could accept a BPN-DE230HD cage to hold three more 3.5" hard drives.

I could use the SATA SSDs in a mirror-stripe setup or in RaidZ2 to get 500GB of space for the VMs and Docker images, but that wouldn't be as fast as adding a pair of NVMe SSDs. I guess the wise course of action would be to check the SMART status of these older SSDs first, to determine what remaining life they have. I have very little experience doing the SMART checkup for SSDs, so will have to learn how to do that. [Maybe Samsung provides an app for this?]

If I want to add NVMe SSDs, I'm looking into PCIe adapters. The slots on this mobo are all x8, so a two-drive adapter makes sense. And the X11SPL-F supports bifurcation in the BIOS setup.

Note that 6 of the PCIe slots on this board are x8 slots connected directly to the CPU, not via the PCH. The one x4 slot is attached to the PCH, and the PCH has only the DMI 3.0 link to the CPU, at, I believe, 4GBps bandwidth.

The Supermicro compatibility list shows two potential solutions: AOC-SLG3-2M2 to accept a pair of m.2 SSDs; and AOC-SLG3-2E4T to accept a pair of OCuLink cables. Then the OCuLink cables connect to a pair of U.2 drives. I'm leaning the latter direction because I'm seeing enterprise-grade SSDs such as the Intel D7-P5500 in U.2 format from ServerPartDeals in 1.92TB and 3.84TB capacities for not a lot more than consumer-grade m.2 SSDs.

I see that the P5500 is apparently now made by Solidigm, but the Intel product brief states, "Power-Loss imminent (PLI) protection scheme with built-in self-testing, guards against data loss if system power is suddenly lost. Coupled with end-to-end data path protection scheme, PLI features also enable ease of deployment into resilient data centers where data corruption from system-level glitches is not tolerated."

I'm not sure whether that means is has real PLP or something weaker. Does anyone know for sure?

So my second question is what is a robust way to add NVMe SSD storage: do these Intel P5500s make sense, requiring the U.2 interface adapters? Or is there an equivalent m.2 device that can slot into the AOC-SLG3-2M2 adapter? Am I making a good decision to avoid the very-low-cost adapters one can find on Amazon or Newegg to plug either m.2 or U.2 SSDs into a PCIe slot? And am I wise to avoid consumer-grade m.2 NVMe SSDs, such as Samsung 9x0 Pro or Crucial P3/P5?

Sorry for such a long-winded first post. Maybe I'm asking questions for which there is no single "right" answer.