kirkdickinson

Contributor

- Joined

- Jun 29, 2015

- Messages

- 174

I jumped into FreeNas in January of 2016. I built a box for my office to store database, documents, movies, etc... It has been happily purring along since then. No problems at all. I have done the point updates, but am still on 9.10 Stable. It is working fine... and if it ain't broke...

However I am running out of space. I have 6 4TB WD Reds in ZFS2 and right now am running at 84% which gives me a warning. I also would like to backup some other things to it that I currently can't.

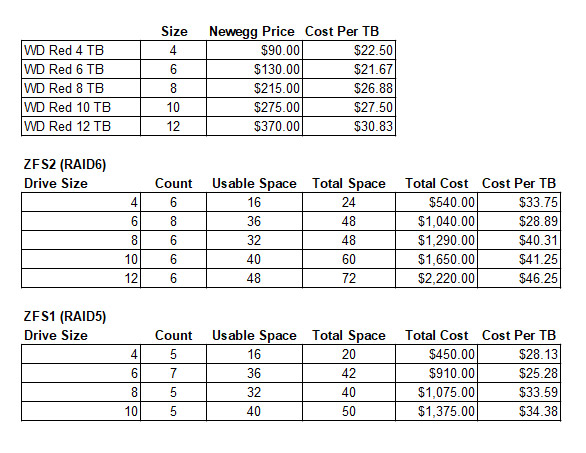

I keep thinking the best option is just to order 6 8TB WD Reds and double my capacity. I may have room for 8 drives and if I ordered 8 6TB drives they would be cheaper and have more usable capacity in ZFS2, but would require a complete rebuild of the array.

Just trying to figure out the best way forward. Here is a spreadsheet calculation that I put together.

Thanks,

Kirk

However I am running out of space. I have 6 4TB WD Reds in ZFS2 and right now am running at 84% which gives me a warning. I also would like to backup some other things to it that I currently can't.

I keep thinking the best option is just to order 6 8TB WD Reds and double my capacity. I may have room for 8 drives and if I ordered 8 6TB drives they would be cheaper and have more usable capacity in ZFS2, but would require a complete rebuild of the array.

Just trying to figure out the best way forward. Here is a spreadsheet calculation that I put together.

Thanks,

Kirk