Fab Sidoli

Contributor

- Joined

- May 15, 2019

- Messages

- 114

Dear All,

It seems as though my snapshot retention policy is being ignore. Before I open a bug report I would like to make sure I'm not being silly.

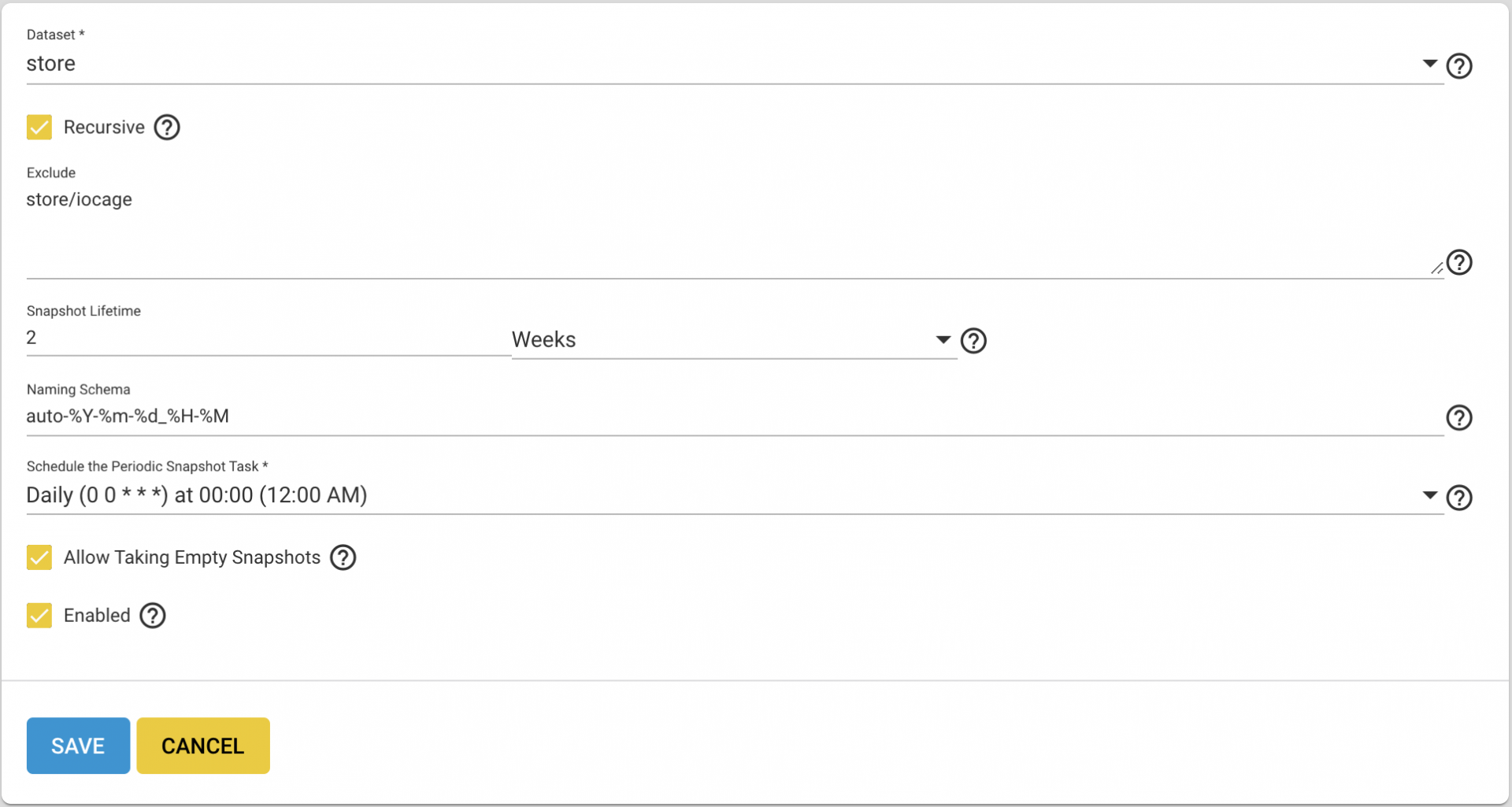

Under Tasks -> Periodic Snapshot Tasks I have set a snaphost lifetime of two weeks. As it stands, I have snapshots going back 19 days (i.e., more than two weeks). The snapshots were setup as part of a replication task and I wonder if this is somehow causing the issue.

I'm not sure what command line tools I can run to show you how this is configured, but attached is a screenshot if that helps?

Thanks,

Fab

It seems as though my snapshot retention policy is being ignore. Before I open a bug report I would like to make sure I'm not being silly.

Under Tasks -> Periodic Snapshot Tasks I have set a snaphost lifetime of two weeks. As it stands, I have snapshots going back 19 days (i.e., more than two weeks). The snapshots were setup as part of a replication task and I wonder if this is somehow causing the issue.

I'm not sure what command line tools I can run to show you how this is configured, but attached is a screenshot if that helps?

Thanks,

Fab