Chris Moore

Hall of Famer

- Joined

- May 2, 2015

- Messages

- 10,080

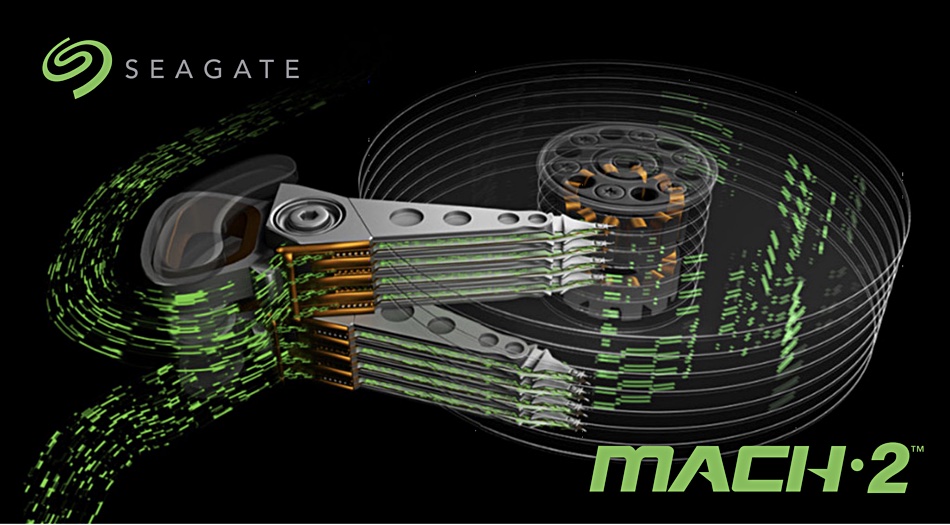

I recently got to look at some information in a presentation from Seagate about the new model drive they are releasing.

Here is a link: https://www.seagate.com/innovation/multi-actuator-hard-drives/

They say that each drive basically shows up to the operating system as two drives that are able to function fully independent and it nearly doubles the data rate to the drive. They said some of their industry partners have already been testing them. Anyone seen them in the wild?

They said they will send me 10 sample units for testing but they are supposed to be available on the market now.

Thoughts?

Here is a link: https://www.seagate.com/innovation/multi-actuator-hard-drives/

They say that each drive basically shows up to the operating system as two drives that are able to function fully independent and it nearly doubles the data rate to the drive. They said some of their industry partners have already been testing them. Anyone seen them in the wild?

They said they will send me 10 sample units for testing but they are supposed to be available on the market now.

Thoughts?