I have done replication of my entire array to my new array and I was using the CLI with the following, I just need to created a recursive snapshot of the zvol:

zfs send -vvR source@last_snaphot | zfs receive -F -d destination

According to Oracle there seem to be two major flavors of the replication process:

The -r or -R option, not too clear about the difference, except maybe the latter one seems to retain the source structure a dataset would have come from.

I was able to perform transfer in excess of 5TB of data in about 6-7 hours.

The -vv option allows to output dataset processed and size. The last step of the replication process updates all the dataset to make them active within the system, otherwise they would not expose their contents.

I had to restart Freenas otherwise I would not be able to get all the dataset listed.

One done I was able to compare presence of all the snapshots both on source and destination.

All dataset permission seems to have been updated as well on the destination dataset.

Doing the replication using Putty I had to log as root, and while creating some shell script and output to help check the replication step, these files where not visible once I exited Root. The dataset I was working in would look like empty.

Going on Freenas Web interface, I then was able to reassign dataset to use my user name and made the entire content available again.

Before I tried the above command, I went a different route and wasn't too successful.

I was actually replicating the main zvol using:

zfs send -vv source@first_snaphot | zfs receive -F destination@first_snaphot

But only the dataset next in the tree were replicated, some having a dataset of there own would created, but no snapshot would have been replicated.

To correct for it I would have to run

zfs send -vv source/dataset1@first_snaphot | zfs receive -F destination/dataset1@first_snaphot

I would have to run the command for every dataset and then run an incremental replication:

zfs send -vv -i source@first_snaphot source@last_snaphot| zfs receive destination@first_snaphot

zfs send -vv -i source/dataset1@first_snaphot source/dataset1@last_snaphot| zfs receive destination/dataset1@first_snaphot

There are major issues with this sequence, is that it is easy to get confused, and extremely tedious to proceed.

I didn't get everything right and doing a single dataset replication could esealy takes hours to finalyze.

Also as an experiment, I kill the replication task after a few snapshot went through and checking dataset availability, the dataset may not show in the Freenas storage tree but the snapshot would be there still.=, as they would appear running the "zfs list -t snapshot" command.

Cloning would work, and contents seemed accessible as well. As as said , this method was a bit unclear and confusing.

However, it is clear that replicating the recursive zvol (not using the -R option of the top commnand ) dataset will not replicate the content of the underlying dataset, it will just create the dataset, but not copy the snapshots to it.

Overall, before stating that replication was successful, comparing list of snapshots is the safest way to go.

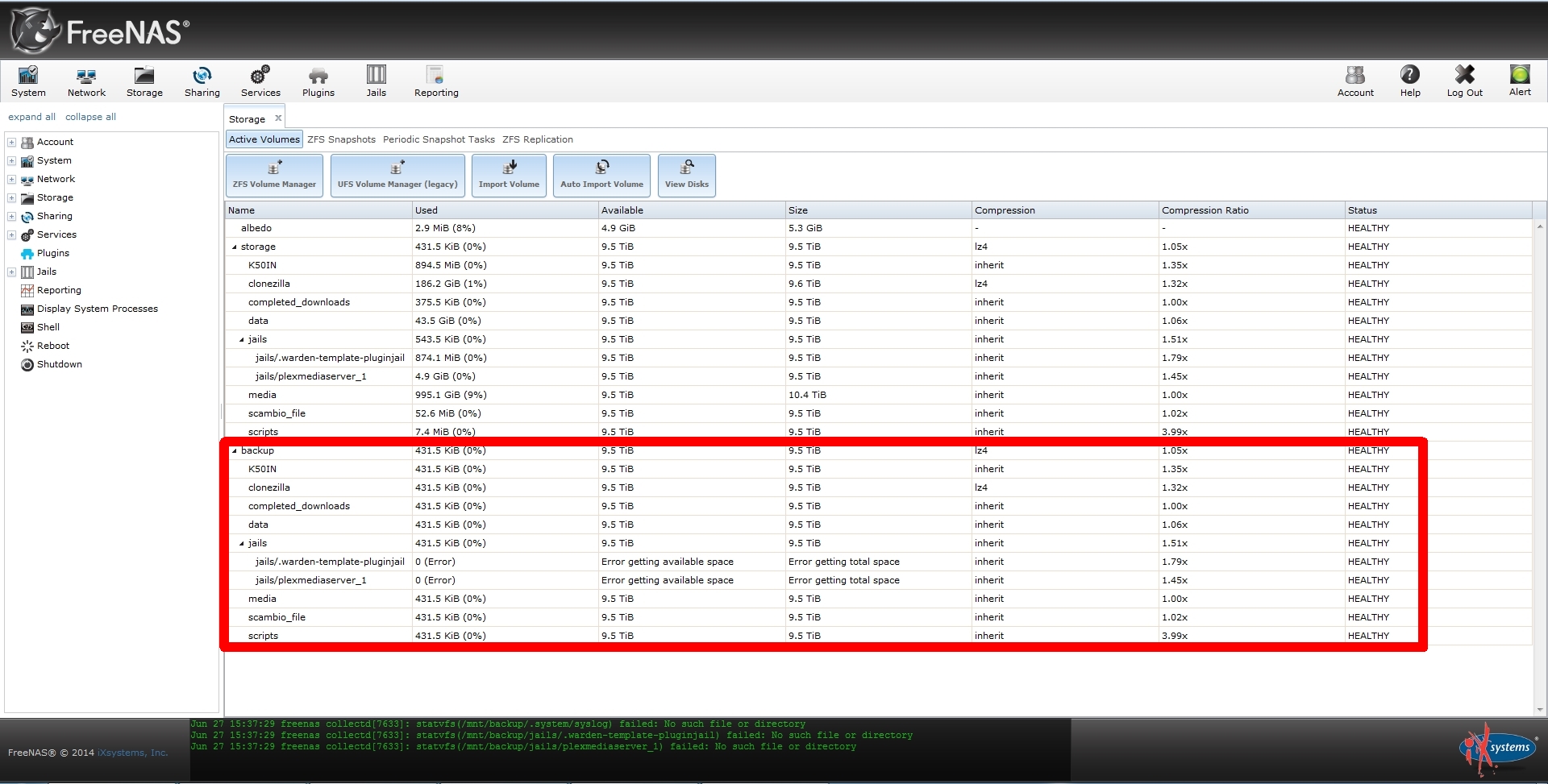

Also, using the -R option, the "error getting the available space" will show until the last snapshot within that dataset has been commited to the destination zvol. It will show even when snapshots have been committed, but the last one.