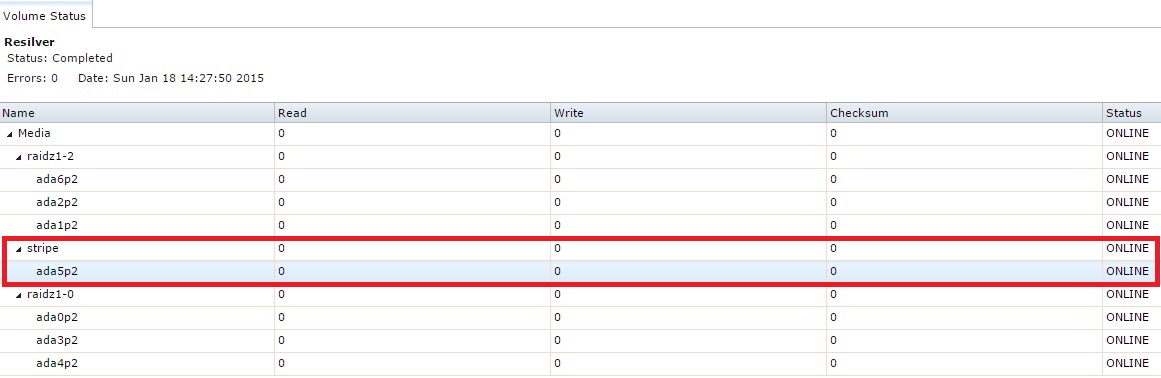

I started with a RAIDZ-1 of 3 disks. When I started to run out of room a added a single striped disk as a temp solution. Now that I have 3 new disks I expanded the volume with a second RAIDZ-1. My question is, how do I remove the single striped disk? i Understand it is a liability since it is a single point of failure. can I populate the data in that disk to the new RAIDZ-1?

-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

How to remove Striped drive from a ZFS volume?

- Thread starter rumdr19

- Start date

- Status

- Not open for further replies.

gpsguy

Active Member

- Joined

- Jan 22, 2012

- Messages

- 4,472

As Jailer said, you can't remove the drive, without destroying your pool and starting over.

However, you could mitigate the risk by adding a mirror to ada5. Unfortunately, you can't add the mirror via the webGUI. To do it, you'd need to use the command line. Read this thread to see how another use did it: https://forums.freenas.org/index.ph...-existing-stripe-to-mirror.26326/#post-166645

If you decide to go this route - be very careful. Doing stuff from the command line isn't recommended. But, it's the only way you can create a mirror after the fact.

However, you could mitigate the risk by adding a mirror to ada5. Unfortunately, you can't add the mirror via the webGUI. To do it, you'd need to use the command line. Read this thread to see how another use did it: https://forums.freenas.org/index.ph...-existing-stripe-to-mirror.26326/#post-166645

If you decide to go this route - be very careful. Doing stuff from the command line isn't recommended. But, it's the only way you can create a mirror after the fact.

Last edited:

As Jailer said, you can't remove the drive, without destroying your pool and starting over.

However, you could mitigate the risk by adding a mirror to ada5. Unfortunately, you can't add the mirror via the webGUI. To do it, you'd need to use the command line. Read this thread to see how another use did it: https://forums.freenas.org/index.ph...-existing-stripe-to-mirror.26326/#post-166645

If you decide to go this route - be very careful. Doing stuff from the command line isn't recommended. But, it's the only way you can crate a mirror after the fact.

Thanks a lot for the info. I will review the post a few times and make a determination on how to proceed

I was hoping it didn't have to come down to that. Is there really no other solution?

This is the primary reason why I created my "noob presentation". Gotta do things right or you end up doing them twice.

This is the primary reason why I created my "noob presentation". Gotta do things right or you end up doing them twice.

i knew the risks when i did it. I just needed to expand the pool and did not have the time to wait

i knew the risks when i did it. I just needed to expand the pool and did not have the time to wait

You are lucky. We've had people add a disk to a pool like you did, and within hours that thing fails, taking the entire pool with it. :(

SirMaster

Patron

- Joined

- Mar 19, 2014

- Messages

- 241

Fortunately ZFS has gained this feature now. However I'm sure it is a long ways away from being released in FreeNAS as it has some more testing yet before it's pulled into production Illumos and then down into BSD, Linux, and OSX, so no help to the OP in this case, but someday users will get to take advantage of this for issues such as this :)

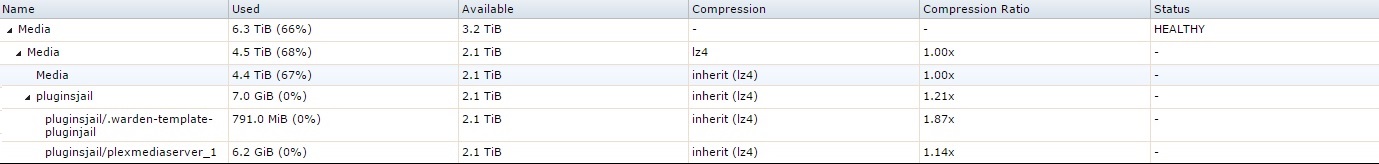

So I purchased 3x 4TB WD Reds to replace this set up. I plan on setting up a RAIDZ1 pool. I know RAIDZ1 is not the safest, but this is a media zpool. It would not be the end of the world if doomsday happens. I will still have a bit if redundancy with this setup.

My question is, how do I migrate the data to the new zpool without loosing my jails and plugins settings? I am mainly concerned with keeping the integrity of my Plex server. As I understand it I will not be able to use autoexpand due to the difference in current and planned drive configuration.

Basically I want the new zpool to look like the current one

UPDATE: Once I received the new drives I followed THIS THREAD to copy my pool to the new drives. pretty easy... I left the system do its thing over night. When I got up in the morning, It had moved just under 5TB.

My question is, how do I migrate the data to the new zpool without loosing my jails and plugins settings? I am mainly concerned with keeping the integrity of my Plex server. As I understand it I will not be able to use autoexpand due to the difference in current and planned drive configuration.

Basically I want the new zpool to look like the current one

UPDATE: Once I received the new drives I followed THIS THREAD to copy my pool to the new drives. pretty easy... I left the system do its thing over night. When I got up in the morning, It had moved just under 5TB.

Last edited:

I've got a "similar" issue... Ran into issues with corruption on my FreeNAS 9.10 running on 8 GB USB thumb drive (HP NL-40)...

3 x 3 TB Seagate drives in raidz1-0...

I booted up in FreeNAS 11.0 and imported... Then restored a backup of my 9.10... This all seemed to go "okay" - until :

I attempted to add a 4 TB drive to that raidz1-0 - however it instead striped / appended onto the zpool!

I've done this before... This same raidz1-0 zpool started life as 4 x 1.5 TB disks, until one of the 1.5 TB drives died... I slowly (over a period of days) replaced each remaining 1.5 one with the 3 x 3 TB drives... I did all this on the CLI using zfs and zpool commands...

My plan was to add the 4 TB disk (but only use 3 TB of it - i.e. size of the smallest disk in the zpool) into that pool - NOT append it at the end. Is there a way to get this off the end of the "stripe", and instead added to the raidz1-0?

Apologies in advance for the "swearingness" of my pool name...

Or am I looking at having to backup my data and recreate from scratch?

3 x 3 TB Seagate drives in raidz1-0...

I booted up in FreeNAS 11.0 and imported... Then restored a backup of my 9.10... This all seemed to go "okay" - until :

I attempted to add a 4 TB drive to that raidz1-0 - however it instead striped / appended onto the zpool!

I've done this before... This same raidz1-0 zpool started life as 4 x 1.5 TB disks, until one of the 1.5 TB drives died... I slowly (over a period of days) replaced each remaining 1.5 one with the 3 x 3 TB drives... I did all this on the CLI using zfs and zpool commands...

My plan was to add the 4 TB disk (but only use 3 TB of it - i.e. size of the smallest disk in the zpool) into that pool - NOT append it at the end. Is there a way to get this off the end of the "stripe", and instead added to the raidz1-0?

Apologies in advance for the "swearingness" of my pool name...

Code:

root@rhea:~ # zpool status UCNTUFCK pool: UCNTUFCK state: ONLINE status: Some supported features are not enabled on the pool. The pool can still be used, but some features are unavailable. action: Enable all features using 'zpool upgrade'. Once this is done, the pool may no longer be accessible by software that does not support the features. See zpool-features(7) for details. scan: scrub repaired 0 in 10h40m with 0 errors on Sun Aug 6 10:40:26 2017 config: NAME STATE READ WRITE CKSUM UCNTUFCK ONLINE 0 0 0 raidz1-0 ONLINE 0 0 0 gptid/baa844fc-f24e-11e5-8de6-f46d0421b2ff ONLINE 0 0 0 gptid/bb7776aa-f24e-11e5-8de6-f46d0421b2ff ONLINE 0 0 0 gptid/bc43b270-f24e-11e5-8de6-f46d0421b2ff ONLINE 0 0 0 gptid/61b1da58-8cbd-11e7-9f09-001517730fdd ONLINE 0 0 0 errors: No known data errors

Or am I looking at having to backup my data and recreate from scratch?

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

I've got a "similar" issue... Ran into issues with corruption on my FreeNAS 9.10 running on 8 GB USB thumb drive (HP NL-40)...

3 x 3 TB Seagate drives in raidz1-0...

I booted up in FreeNAS 11.0 and imported... Then restored a backup of my 9.10... This all seemed to go "okay" - until :

I attempted to add a 4 TB drive to that raidz1-0 - however it instead striped / appended onto the zpool!

I've done this before... This same raidz1-0 zpool started life as 4 x 1.5 TB disks, until one of the 1.5 TB drives died... I slowly (over a period of days) replaced each remaining 1.5 one with the 3 x 3 TB drives... I did all this on the CLI using zfs and zpool commands...

My plan was to add the 4 TB disk (but only use 3 TB of it - i.e. size of the smallest disk in the zpool) into that pool - NOT append it at the end. Is there a way to get this off the end of the "stripe", and instead added to the raidz1-0?

Apologies in advance for the "swearingness" of my pool name...

Code:root@rhea:~ # zpool status UCNTUFCK pool: UCNTUFCK state: ONLINE status: Some supported features are not enabled on the pool. The pool can still be used, but some features are unavailable. action: Enable all features using 'zpool upgrade'. Once this is done, the pool may no longer be accessible by software that does not support the features. See zpool-features(7) for details. scan: scrub repaired 0 in 10h40m with 0 errors on Sun Aug 6 10:40:26 2017 config: NAME STATE READ WRITE CKSUM UCNTUFCK ONLINE 0 0 0 raidz1-0 ONLINE 0 0 0 gptid/baa844fc-f24e-11e5-8de6-f46d0421b2ff ONLINE 0 0 0 gptid/bb7776aa-f24e-11e5-8de6-f46d0421b2ff ONLINE 0 0 0 gptid/bc43b270-f24e-11e5-8de6-f46d0421b2ff ONLINE 0 0 0 gptid/61b1da58-8cbd-11e7-9f09-001517730fdd ONLINE 0 0 0 errors: No known data errors

Or am I looking at having to backup my data and recreate from scratch?

Same answer as last time. You can either backup your data, recreate how its supposed to be, and then restore. Or create a new pool with new disks and transfer your data from the old pool.

OR, you can mitigate the risk by adding an additional drive as a mirror to the stripe. Which is not really a good solution.

Thanks for the quick answer - I'm already doing that (backing up my old data over NFS to USB connected hard drives on a Linux machine using rsync - I'm guessing I'd probably get better throughput using rsync directly - but NFS seems okay over 1 Gbit)... I'm figuring once I've got my data backed up elsewhere - I might start FreeNAS from scratch instead of building on the legacy of my old setup from configuration restores...

The biggest PITA being that the largest hard drives I have are no bigger than 1.5 TB - so I'm having to juggle stuff around... fortunately I keep my movies, tv-shows, binaries, music and "data" in separate folders...

The biggest PITA being that the largest hard drives I have are no bigger than 1.5 TB - so I'm having to juggle stuff around... fortunately I keep my movies, tv-shows, binaries, music and "data" in separate folders...

Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

Sorry for you pain.

The OpenZFS guys are working on device removal. But its not there yet. And when it is, it'll work by redirecting requests to the missing device.

https://www.delphix.com/blog/delphix-engineering/openzfs-device-removal

The good news is, when it is there, if you just added it and made a mistake, there won't be too many requests to redirect.

The OpenZFS guys are working on device removal. But its not there yet. And when it is, it'll work by redirecting requests to the missing device.

https://www.delphix.com/blog/delphix-engineering/openzfs-device-removal

The good news is, when it is there, if you just added it and made a mistake, there won't be too many requests to redirect.

- Status

- Not open for further replies.

Similar threads

- Replies

- 7

- Views

- 4K