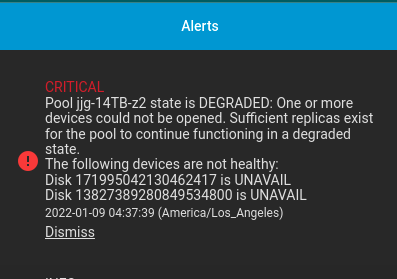

I unplugged 2 disks and got this error message.

But these serial numbers do not match the serial numbers when all disks are attached.

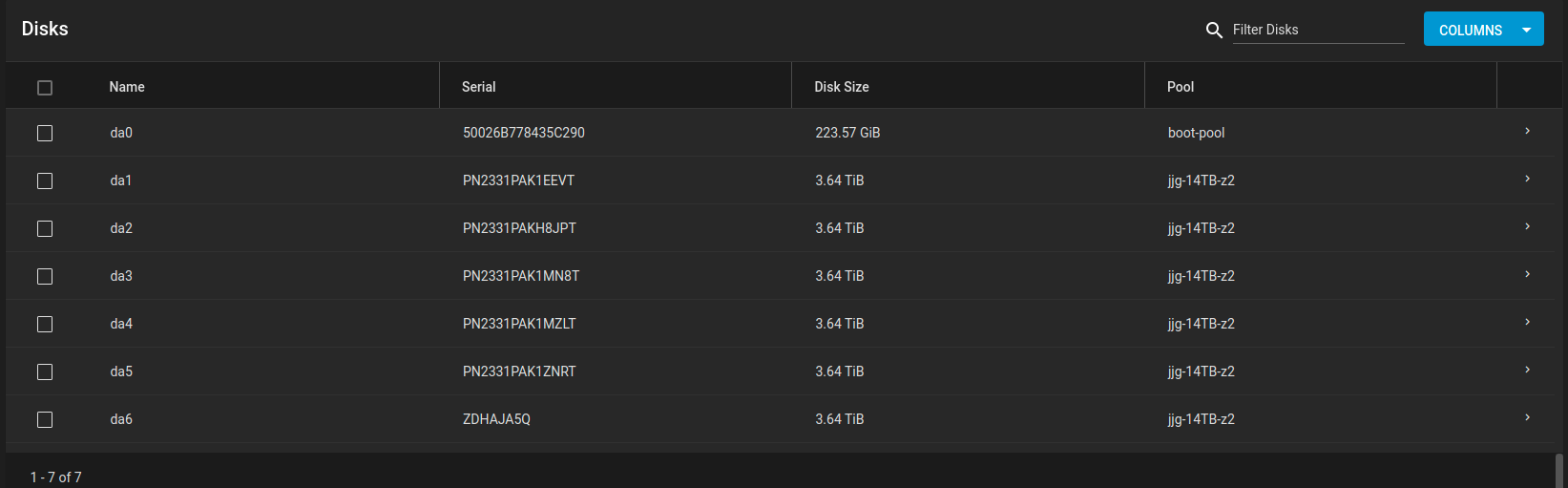

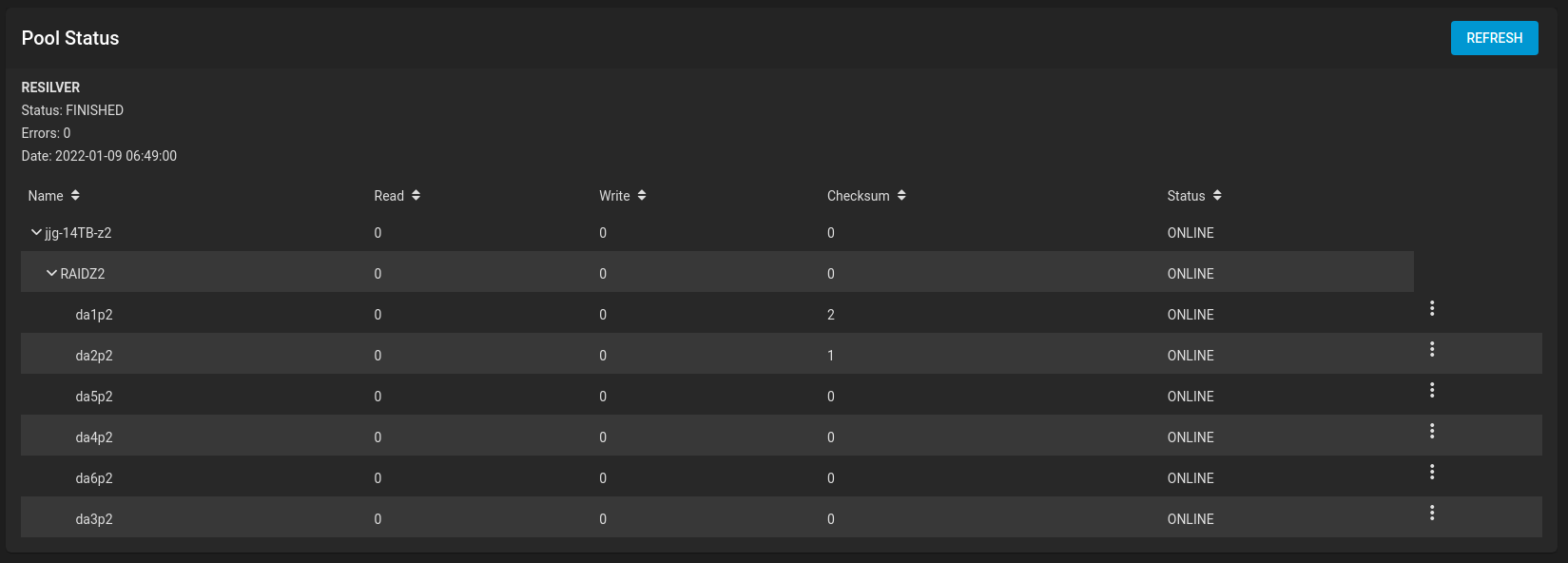

Here is the image after I re-plugged in the 2 disks.

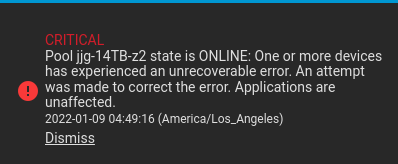

Also, an alert appeared after I inserted saying something did not recover.

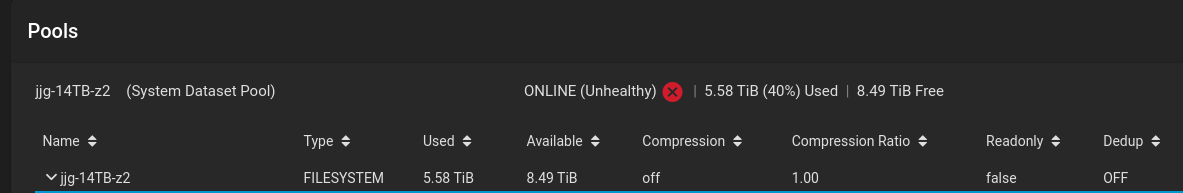

Also my pools show an alert.

Question 1: How do I convert the serial number in the disk failure alert to the serial numbers displayed in the disk window?

Question 2: How do I clear the red x in the pool window or do I still have a problem? Are the checksum because I disconnected the disk or does it mean something really got messed up when I disconnected my disk for the experiment?

But these serial numbers do not match the serial numbers when all disks are attached.

Here is the image after I re-plugged in the 2 disks.

Also, an alert appeared after I inserted saying something did not recover.

Also my pools show an alert.

Question 1: How do I convert the serial number in the disk failure alert to the serial numbers displayed in the disk window?

Question 2: How do I clear the red x in the pool window or do I still have a problem? Are the checksum because I disconnected the disk or does it mean something really got messed up when I disconnected my disk for the experiment?

Last edited: