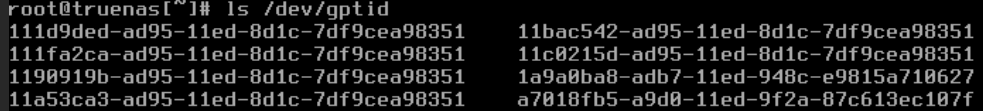

My TrueNAS setup:

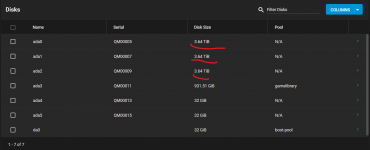

4 days ago I had one of my 4TB hard drives fail on me. I instantly ordered a new one, it came in yesterday. Threw it into my Proxmox server. Located it and wiped it through the Proxmox interface (or so I thought). Turns out I wiped one of the dives that were in the ZFS pool (which is no longer redundant), and not the new one. The dumbest mistake I've made in my life!

So what I have right now, is 3 working 4TB drives. One of them is new, with no data on it. The other one is wiped (used to have all my data on it), and the last one's data is still intact.

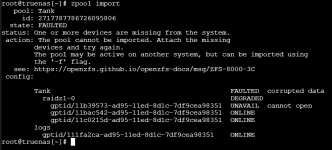

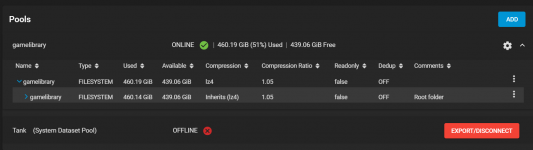

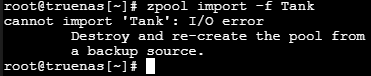

Is there any chance I can recover the data on the drive I wiped so I can get the pool back up and running? Currently, TrueNAS says the pool is unavailable.

- Lives on Proxmox VM

- Three 4TB hard drives passed through to the VM

- Running in RaidZ1

- File system: ZFS

4 days ago I had one of my 4TB hard drives fail on me. I instantly ordered a new one, it came in yesterday. Threw it into my Proxmox server. Located it and wiped it through the Proxmox interface (or so I thought). Turns out I wiped one of the dives that were in the ZFS pool (which is no longer redundant), and not the new one. The dumbest mistake I've made in my life!

So what I have right now, is 3 working 4TB drives. One of them is new, with no data on it. The other one is wiped (used to have all my data on it), and the last one's data is still intact.

Is there any chance I can recover the data on the drive I wiped so I can get the pool back up and running? Currently, TrueNAS says the pool is unavailable.