Brezlord

Contributor

- Joined

- Jan 7, 2017

- Messages

- 189

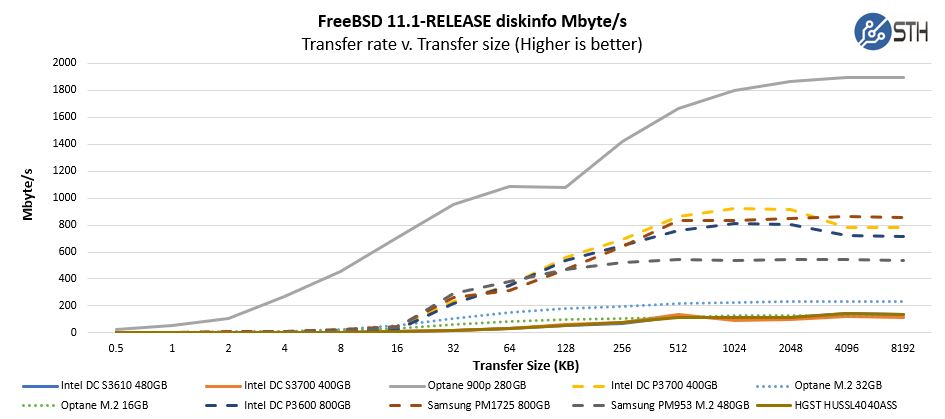

Hi all. I was hoping someone could help me with slow performance issues with my NVMe SLOG. I am only getting 230MB/s write to the drive but I get between 696MB/s to 1164MB/s when testing the drive locally. I have 10GiB networking and get expected speeds with MTU set to 1518, just over 9.8GiB/s. When I write to the pool with sync off I get 545MB/s. Below are the test results for the NMVe drive.

Any help in solving this would be greatly appreciated.

Simon

edit: add code tags

Code:

root@nas1:~ # diskinfo -t /dev/nvd0 /dev/nvd0 512 # sectorsize 480103981056 # mediasize in bytes (447G) 937703088 # mediasize in sectors 0 # stripesize 0 # stripeoffset SAMSUNG MZ1LV480HCHP-000MU # Disk descr. S2C1NAAH600033 # Disk ident. Yes # TRIM/UNMAP support 0 # Rotation rate in RPM Seek times: Full stroke: 250 iter in 0.012374 sec = 0.049 msec Half stroke: 250 iter in 0.011606 sec = 0.046 msec Quarter stroke: 500 iter in 0.018414 sec = 0.037 msec Short forward: 400 iter in 0.015944 sec = 0.040 msec Short backward: 400 iter in 0.017356 sec = 0.043 msec Seq outer: 2048 iter in 0.058376 sec = 0.029 msec Seq inner: 2048 iter in 0.062954 sec = 0.031 msec Transfer rates: outside: 102400 kbytes in 0.093565 sec = 1094426 kbytes/sec middle: 102400 kbytes in 0.080247 sec = 1276060 kbytes/sec inside: 102400 kbytes in 0.167414 sec = 611657 kbytes/sec

Any help in solving this would be greatly appreciated.

Simon

edit: add code tags

Last edited: