Just had my

- DL360 arrive with a Smart Array P440ar

- DL380 arrive with a Smart HBA H240ar

I've set it to HBA mode and its installing, just not sure if I should load a better firmware?

What's the best firmware and method to upgrade these to?

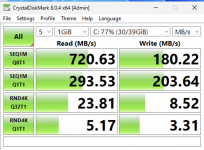

Will report performance soon

- DL360 arrive with a Smart Array P440ar

- DL380 arrive with a Smart HBA H240ar

I've set it to HBA mode and its installing, just not sure if I should load a better firmware?

What's the best firmware and method to upgrade these to?

Will report performance soon

Last edited: