Brezlord

Contributor

- Joined

- Jan 7, 2017

- Messages

- 189

I'm at loss to work out why I cant get SLOG write performance with an Intel Optane 900p. I have no turntables set.

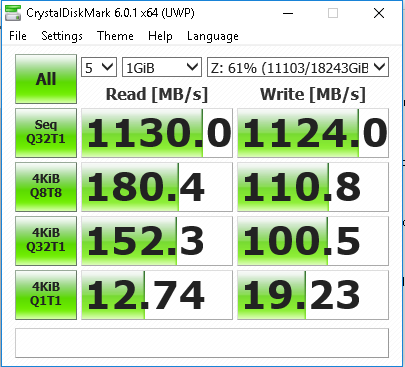

SMB Data set Sync Off. Great results.

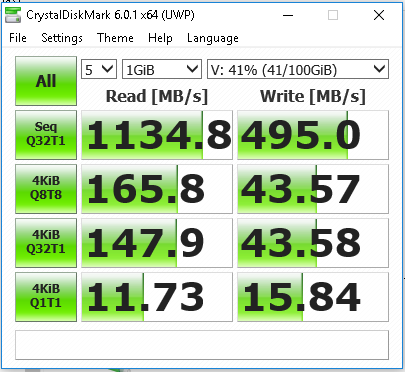

SMB Data set Sync On. Not Good

Gstat backs this up. The Optane is running in a X8 pcie 3 slot at x4 speed direct to CPU not through PHC. Have tried a different x8 slot no change.

Any suggestions are welcome.

SMB Data set Sync Off. Great results.

SMB Data set Sync On. Not Good

Gstat backs this up. The Optane is running in a X8 pcie 3 slot at x4 speed direct to CPU not through PHC. Have tried a different x8 slot no change.

Code:

root@nas1:~ # diskinfo -wS /dev/nvd0

/dev/nvd0

512 # sectorsize

280065171456 # mediasize in bytes (261G)

547002288 # mediasize in sectors

0 # stripesize

0 # stripeoffset

INTEL SSDPED1D280GA # Disk descr.

PHMB742301K1280CGN # Disk ident.

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

0.5 kbytes: 16.7 usec/IO = 29.2 Mbytes/s

1 kbytes: 16.8 usec/IO = 58.3 Mbytes/s

2 kbytes: 17.1 usec/IO = 114.1 Mbytes/s

4 kbytes: 14.5 usec/IO = 268.7 Mbytes/s

8 kbytes: 16.7 usec/IO = 468.6 Mbytes/s

16 kbytes: 21.4 usec/IO = 729.7 Mbytes/s

32 kbytes: 30.2 usec/IO = 1035.4 Mbytes/s

64 kbytes: 47.7 usec/IO = 1309.7 Mbytes/s

128 kbytes: 83.4 usec/IO = 1499.0 Mbytes/s

256 kbytes: 151.2 usec/IO = 1653.9 Mbytes/s

512 kbytes: 282.9 usec/IO = 1767.1 Mbytes/s

1024 kbytes: 546.1 usec/IO = 1831.1 Mbytes/s

2048 kbytes: 1075.3 usec/IO = 1860.0 Mbytes/s

4096 kbytes: 2112.9 usec/IO = 1893.1 Mbytes/s

8192 kbytes: 4192.1 usec/IO = 1908.4 Mbytes/sAny suggestions are welcome.

Last edited: