mikesoultanian

Dabbler

- Joined

- Aug 3, 2017

- Messages

- 43

Hi,

I'm new to FreeNAS, so I apologize if I'm not quite understanding things quite right. I've been reading through a lot of forum posts and the manual and I think I'm starting to get a grip on things. At my company we've inherited a FreeNAS box so I've needed to learn how to get up to speed very quickly because we had a big Hyper-V failure and we were moving data all over the place. The current storage configuration is really whacky so this is my opportunity to clean things up.

I have a Hyper-V server with ~60 VMs and it is connected to the FreeNAS box over 10Gb Ethernet. We currently have 24 1TB SSDs and a bunch of other spinning disks - of the remaining 48 spinning disks I plan to change 24 of them also to 1TB SSDs - I want all of the SSDs to be in a RAID 10 configuration (the first 24 already seem to be). This is where I am not totally sure, but want confirmation.

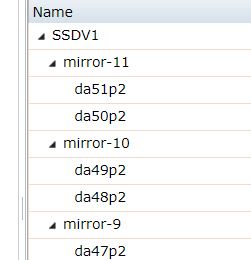

When I go to volume status of the SSD volume, this is what it looks like:

Does that mean it is in fact striped mirrors (RAID 10)? It continues all the way to mirror-0 which accounts for all 24 1TB drives

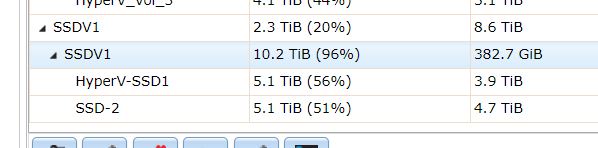

If that's the case, it looks like that volume has one Dataset and two 5TB zvols for the iSCSI connections:

Here's what I would like to do:

1. create a new 1GB zvol for the Hyper-V witness

2. get rid of SSD-2 (there is no data on it now)

3. extend HyperV-SSD1 to fill the volume (except for the witness disk above)

4. add 24 more 1TB SSDs and extend the size of the SSDV1 volume and also the HyperV-SSD1 zvol so we max out that zvol (actually just found this - http://doc.freenas.org/9.10/sharing.html#growing-luns). Is there a reason that the zvol can't use up more than 80% of the volume (per the link)?

5. is there a way to upgrade to larger 2TB drives?

Does all this seem doable? If I understand correctly, I should be able to extend the volume with the Volume Manager by extending the current volume with pairs of mirrors until I use up all of the new drives. To extend the zvol I just update the size in the GUI and then use the command-line to update the size of the associated extent. Does this all sound doable while not losing any data that already exists in HyperV-SSD1 (yes, I will have it backed up)?

Thanks!

Mike

I'm new to FreeNAS, so I apologize if I'm not quite understanding things quite right. I've been reading through a lot of forum posts and the manual and I think I'm starting to get a grip on things. At my company we've inherited a FreeNAS box so I've needed to learn how to get up to speed very quickly because we had a big Hyper-V failure and we were moving data all over the place. The current storage configuration is really whacky so this is my opportunity to clean things up.

I have a Hyper-V server with ~60 VMs and it is connected to the FreeNAS box over 10Gb Ethernet. We currently have 24 1TB SSDs and a bunch of other spinning disks - of the remaining 48 spinning disks I plan to change 24 of them also to 1TB SSDs - I want all of the SSDs to be in a RAID 10 configuration (the first 24 already seem to be). This is where I am not totally sure, but want confirmation.

When I go to volume status of the SSD volume, this is what it looks like:

Does that mean it is in fact striped mirrors (RAID 10)? It continues all the way to mirror-0 which accounts for all 24 1TB drives

If that's the case, it looks like that volume has one Dataset and two 5TB zvols for the iSCSI connections:

Here's what I would like to do:

1. create a new 1GB zvol for the Hyper-V witness

2. get rid of SSD-2 (there is no data on it now)

3. extend HyperV-SSD1 to fill the volume (except for the witness disk above)

4. add 24 more 1TB SSDs and extend the size of the SSDV1 volume and also the HyperV-SSD1 zvol so we max out that zvol (actually just found this - http://doc.freenas.org/9.10/sharing.html#growing-luns). Is there a reason that the zvol can't use up more than 80% of the volume (per the link)?

5. is there a way to upgrade to larger 2TB drives?

Does all this seem doable? If I understand correctly, I should be able to extend the volume with the Volume Manager by extending the current volume with pairs of mirrors until I use up all of the new drives. To extend the zvol I just update the size in the GUI and then use the command-line to update the size of the associated extent. Does this all sound doable while not losing any data that already exists in HyperV-SSD1 (yes, I will have it backed up)?

Thanks!

Mike