Scampicfx

Contributor

- Joined

- Jul 4, 2016

- Messages

- 125

Dear guys,

I know there are tons of threads out there discussing the storage-stats about "used" and "available" in the FreeNAS Web-GUI. Although I spent hours reading them, I still can not solve my questions regarding zvol usage.

I was pushed to inform me about these stats more detailed because tonight I received "out of space"-errors from my snapshot tasks.

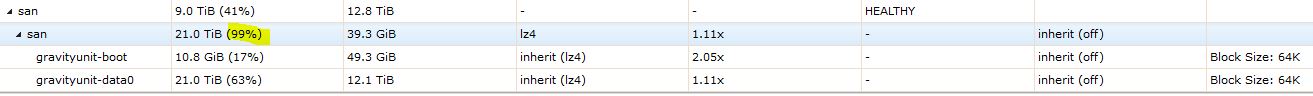

The "san"-zpool in the picture above houses two zvols which serve ESXi. The zpool itself consists of 3 mirrored vdevs each containing 2x 8TB disks with 4kn. This gives a total capacity of 24 TB (48 TB raw capacity). Additionally, there is one SLOG-PCIe-SSD with a configured capacity of 20 GB.

I think I understand "used" and "available" correctly when looking at my RAIDZ2 / RAIDZ3 pools. However, when looking at my iscsi-zvol-pool (see picture above), I don't understand these 4 lines completely... xD So, to be sure:

- First line: 9.0 TiB (41%) | 12.8 TiB

9,0 TiB + 12,8 TiB results in = 21,8 TiB

The zpool consists of: 2x 8TByte (mirror) + 2x 8TByte (mirror) + 2x 8TByte (mirror). This results in 48 TByte raw capacity and 24 TByte storage which is equal to 21,8 TiB. So, everything should be fine at this point.

However, FYI:

When looking at my other RAIDZ2 / RAIDZ3 pools, this first line represents the raw capacity of all disks.

When looking at this san-zpool (see picture above), this is not the raw capacity.

I guess, the reason herefore is, because no parity is used in this zpool, is this correct?

Regarding the first line-statistics of a zpool, I can also recommend this posting from @jgreco: Is my available space correct?

- Second Line: 21,0 TiB (99%) | 39,3 GiB

What I think I understand: A usage of 21,0 TiB (data0) and 10,8 GiB (boot) results in 21,0 TiB. This is nearly the complete capacity of this whole zpool. Therefore 99% are used, correct?

Because 99% are used, only 39,3 GiB are marked as "available", correct?

- Third Line: 10,8 GiB (17%) | 49,3 GiB

This is a small 10 G volume. It serves as boot volume for ESXi.

What I think I understand: I guess 10,8 GiB get used due to the facts that the zvol itself has a capacity of 10G. Additionally, there are some snapshots of this zvol with only minor changes, so only little data consumption. This results in a total usage of 10,8 GiB.

What I don't understand: Why are 49,3 GiB available? How do they get calculated?

- Fourth Line: 21,0 TiB (63%) | 12,1 TiB

This is a 12T zvol. It serves as data volume for ESXi.

What I don't understand: How is it possible for this volume to use 21,0 TiB?

Calculating referenced + usedbysnapshots + usedbydataset + written + logicalused doesn't lead to 21,0 TiB... what am I missing?

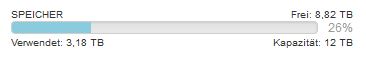

The "real" usage of this 12T data0 zvol is 3,18 TB, as you can see here:

What I understand: I received the "out of space"-error tonight because the available-stat was less than 12 TiB. Therefore, right now, I deleted some snapshots and now the available-stats shows 12,1 TiB. This means the next snapshot should work. But maybe the overnext snapshots errors again ;)

I learned this from this thread: https://forums.freenas.org/index.ph...space-outputs-to-fix-failing-snapshots.52740/

When typing ...

... I notice, that my zvol data0 has a REFRESERV of 12T.

This means, a snapshot only gets triggered, when at least 12.0T are free.

I have to admit, I really like this REFRESERV-feature, because it ensures, that at all times 12T may get written to this zvol - at any time :) However, I hope that writes coming from users from iSCSI don't get blocked by this feature and just snapshots are affected from this REFRESERV-feature - is that correct?

However, what I don't understand: when this zvol is "using" 21,0 TiB - how can it be, that there are still 12,1 TiB available, when the whole zpool only offers a total capacity of 24 TiB? Furthermore, this zvol only has a size of 12T. I don't understand the mathematics ;)

Well... There are a lot of questions in this posting. I would like to say thanks for reading!

I know there are tons of threads out there discussing the storage-stats about "used" and "available" in the FreeNAS Web-GUI. Although I spent hours reading them, I still can not solve my questions regarding zvol usage.

I was pushed to inform me about these stats more detailed because tonight I received "out of space"-errors from my snapshot tasks.

The "san"-zpool in the picture above houses two zvols which serve ESXi. The zpool itself consists of 3 mirrored vdevs each containing 2x 8TB disks with 4kn. This gives a total capacity of 24 TB (48 TB raw capacity). Additionally, there is one SLOG-PCIe-SSD with a configured capacity of 20 GB.

I think I understand "used" and "available" correctly when looking at my RAIDZ2 / RAIDZ3 pools. However, when looking at my iscsi-zvol-pool (see picture above), I don't understand these 4 lines completely... xD So, to be sure:

- First line: 9.0 TiB (41%) | 12.8 TiB

9,0 TiB + 12,8 TiB results in = 21,8 TiB

The zpool consists of: 2x 8TByte (mirror) + 2x 8TByte (mirror) + 2x 8TByte (mirror). This results in 48 TByte raw capacity and 24 TByte storage which is equal to 21,8 TiB. So, everything should be fine at this point.

However, FYI:

When looking at my other RAIDZ2 / RAIDZ3 pools, this first line represents the raw capacity of all disks.

When looking at this san-zpool (see picture above), this is not the raw capacity.

I guess, the reason herefore is, because no parity is used in this zpool, is this correct?

Regarding the first line-statistics of a zpool, I can also recommend this posting from @jgreco: Is my available space correct?

- Second Line: 21,0 TiB (99%) | 39,3 GiB

What I think I understand: A usage of 21,0 TiB (data0) and 10,8 GiB (boot) results in 21,0 TiB. This is nearly the complete capacity of this whole zpool. Therefore 99% are used, correct?

Because 99% are used, only 39,3 GiB are marked as "available", correct?

- Third Line: 10,8 GiB (17%) | 49,3 GiB

This is a small 10 G volume. It serves as boot volume for ESXi.

Code:

# zfs get used,available,referenced,quota,usedbysnapshots,us edbydataset,written,logicalused san/gravityunit-boot NAME PROPERTY VALUE SOURCE san/gravityunit-boot used 10.8G - san/gravityunit-boot available 49.3G - san/gravityunit-boot referenced 493M - san/gravityunit-boot quota - - san/gravityunit-boot usedbysnapshots 313M - san/gravityunit-boot usedbydataset 493M - san/gravityunit-boot written 6.18M - san/gravityunit-boot logicalused 1.60G -

What I think I understand: I guess 10,8 GiB get used due to the facts that the zvol itself has a capacity of 10G. Additionally, there are some snapshots of this zvol with only minor changes, so only little data consumption. This results in a total usage of 10,8 GiB.

What I don't understand: Why are 49,3 GiB available? How do they get calculated?

- Fourth Line: 21,0 TiB (63%) | 12,1 TiB

This is a 12T zvol. It serves as data volume for ESXi.

Code:

zfs get used,available,referenced,quota,usedbysnapshots,usedbydataset,written,logicalused san/gravityunit-data0 NAME PROPERTY VALUE SOURCE san/gravityunit-data0 used 21.0T - san/gravityunit-data0 available 12.1T - san/gravityunit-data0 referenced 2.52T - san/gravityunit-data0 quota - - san/gravityunit-data0 usedbysnapshots 6.47T - san/gravityunit-data0 usedbydataset 2.52T - san/gravityunit-data0 written 21.1G - san/gravityunit-data0 logicalused 9.98T -

What I don't understand: How is it possible for this volume to use 21,0 TiB?

Calculating referenced + usedbysnapshots + usedbydataset + written + logicalused doesn't lead to 21,0 TiB... what am I missing?

The "real" usage of this 12T data0 zvol is 3,18 TB, as you can see here:

What I understand: I received the "out of space"-error tonight because the available-stat was less than 12 TiB. Therefore, right now, I deleted some snapshots and now the available-stats shows 12,1 TiB. This means the next snapshot should work. But maybe the overnext snapshots errors again ;)

I learned this from this thread: https://forums.freenas.org/index.ph...space-outputs-to-fix-failing-snapshots.52740/

When typing ...

Code:

zfs list -o name,quota,refquota,reservation,refreservation

... I notice, that my zvol data0 has a REFRESERV of 12T.

Code:

san none none none none san/gravityunit-boot - - none 10.0G san/gravityunit-data0 - - none 12.0T

This means, a snapshot only gets triggered, when at least 12.0T are free.

I have to admit, I really like this REFRESERV-feature, because it ensures, that at all times 12T may get written to this zvol - at any time :) However, I hope that writes coming from users from iSCSI don't get blocked by this feature and just snapshots are affected from this REFRESERV-feature - is that correct?

However, what I don't understand: when this zvol is "using" 21,0 TiB - how can it be, that there are still 12,1 TiB available, when the whole zpool only offers a total capacity of 24 TiB? Furthermore, this zvol only has a size of 12T. I don't understand the mathematics ;)

Well... There are a lot of questions in this posting. I would like to say thanks for reading!

Last edited: