ITOperative

Dabbler

- Joined

- Feb 11, 2023

- Messages

- 20

Hey guys,

I had two drives failing on long SMART testing, so I decided to swap them out for replacement drives.

Since the drives were in separate vdevs (both raidz2), I popped them both in and started them both on replacement.

I waited for resilvering to hit 100% and in vdev1, da1 was replaced and seems to be fine.

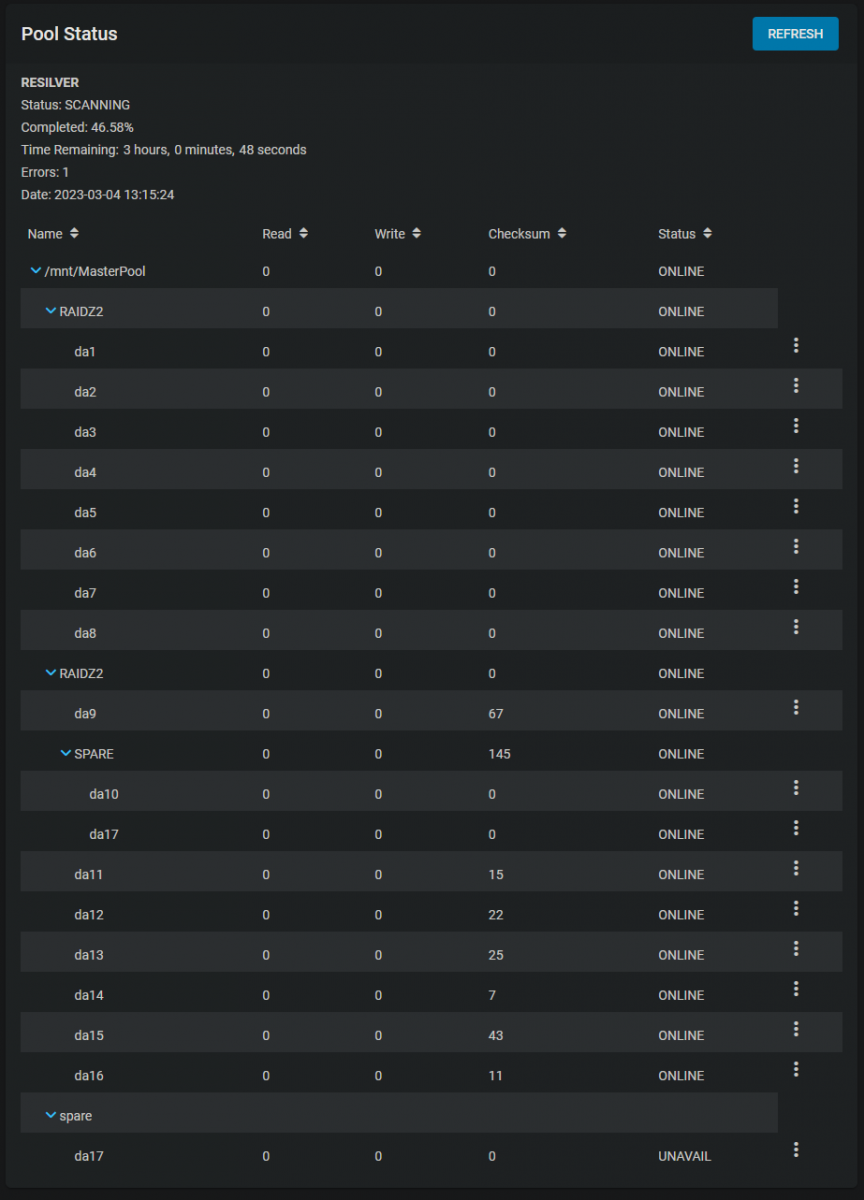

But resilvering now shows at 46.4% as of writing, I noticed it showed "Errors: 1" and when I checked vdev2, it shows two spares instead of one (da10 is now listed as a spare), da17 under the spare section shows as unavailable, and every other drive in vdev2 shows checksum errors.

Additionally, I checked my notifications and there's no new errors listed.

What on earth happened; does anyone know what might be going on?

I saw similar checksum issues before, before I switched out my PERC h730p for a 330 mini, but it has been fine since.

I could only assume something went bad and it had to pull the spare in to help resilver?

Here's a picture of my pool, which oddly doesn't show as being degrades:

Any ideas as to what is going on, and what level of concern I should be having, would be greatly appreciated!

I had two drives failing on long SMART testing, so I decided to swap them out for replacement drives.

Since the drives were in separate vdevs (both raidz2), I popped them both in and started them both on replacement.

I waited for resilvering to hit 100% and in vdev1, da1 was replaced and seems to be fine.

But resilvering now shows at 46.4% as of writing, I noticed it showed "Errors: 1" and when I checked vdev2, it shows two spares instead of one (da10 is now listed as a spare), da17 under the spare section shows as unavailable, and every other drive in vdev2 shows checksum errors.

Additionally, I checked my notifications and there's no new errors listed.

What on earth happened; does anyone know what might be going on?

I saw similar checksum issues before, before I switched out my PERC h730p for a 330 mini, but it has been fine since.

I could only assume something went bad and it had to pull the spare in to help resilver?

Here's a picture of my pool, which oddly doesn't show as being degrades:

Any ideas as to what is going on, and what level of concern I should be having, would be greatly appreciated!