Paul Morris

Dabbler

- Joined

- Sep 3, 2014

- Messages

- 14

Running Freenas 9.3-STABLE-201601181840 on iX-systems 24 bay filer.

I just add 11 new drives to the 13 drives I had originally purchased with the machine. I'm trying to extend the original pool and I'm getting some odd behavior in the Volume Manager. Here is the original configuration:

-----------

------------

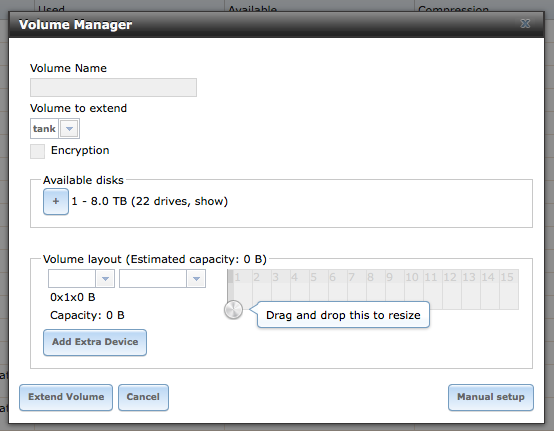

I wanting to extend the pool with the 11 new disks as 1 raidz2 vdev. When I go into the Volume to do this I select "tank" as the Volume to extend it shows the following information:

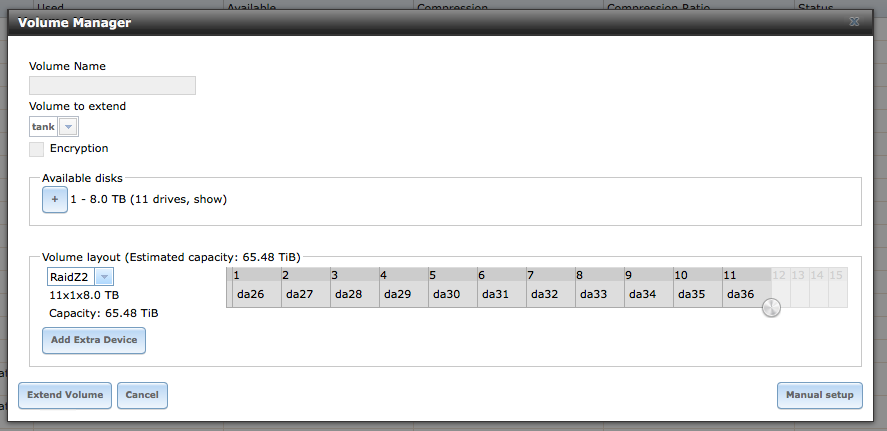

I'm presented with all 22 disks as available. If I select the first 11 disks it seems as if it is referencing the first 11 disks in the array and not the 11 new drives I just installed:

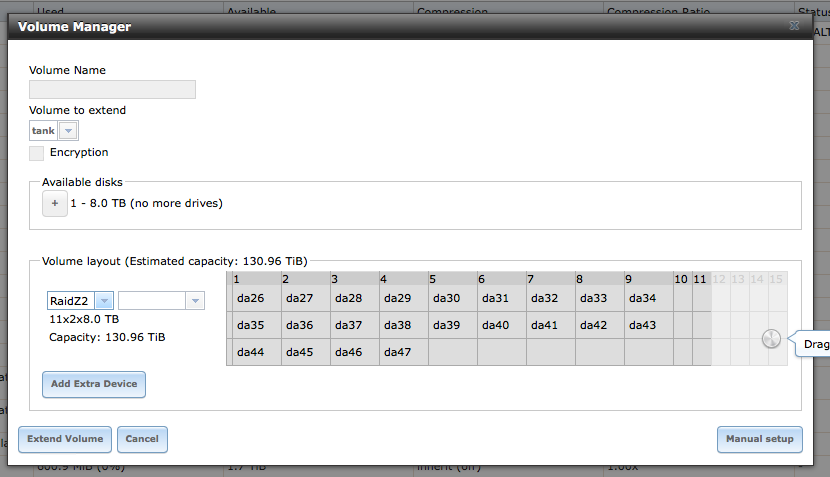

If I select the + button under available disks I get all 22 disks added to the volume. In an 11x2x8.0TB configuration:

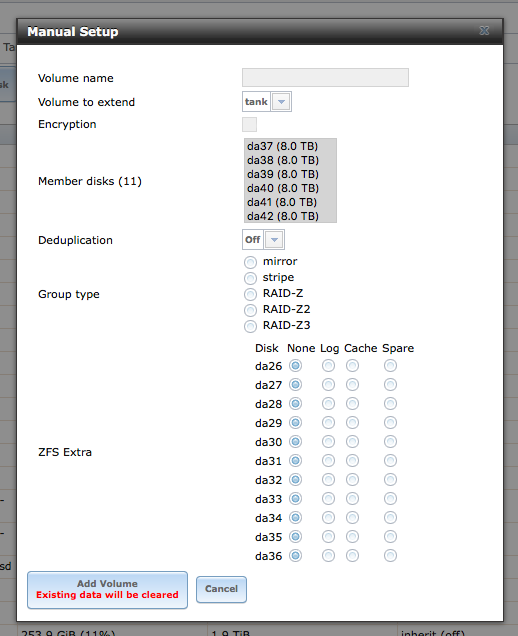

This is a bit unnerving because it look like the Volume Manager has no idea about the underlying ZFS structure already in place and is about to wipe out all the data currently on the pool. Now if I try this manually I'm still presented with all the disks as if they are available. I can select the last 11 drives:

I'm not sure what will happen here if I select add volume. For some reason this just doesn't look right. Am I doing something wrong here to extend this volume or is the GUI out of sync with the underlying ZFS system?

I just add 11 new drives to the 13 drives I had originally purchased with the machine. I'm trying to extend the original pool and I'm getting some odd behavior in the Volume Manager. Here is the original configuration:

-----------

Code:

[root@anas2] /mnt/tank# zpool status tank

pool: tank

state: ONLINE

scan: scrub repaired 0 in 26h12m with 0 errors on Mon Aug 22 02:12:56 2016

config:

NAME STATE READ WRITE CKSUM

tank ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/0bb6b55d-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/0c23d38a-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/0c8c6999-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/0cf6f473-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/0d63d000-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/0dd36558-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

raidz2-2 ONLINE 0 0 0

gptid/57a455b2-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/5816fdad-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/58820272-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/58fc2a4d-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/596aae61-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

gptid/59d60b15-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

logs

gptid/0e4e13f7-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

cache

gptid/0e085869-c6ab-11e5-9be4-0cc47a204b38 ONLINE 0 0 0

spares

gptid/6b6a49c2-c6ab-11e5-9be4-0cc47a204b38 AVAIL

errors: No known data errors ------------

I wanting to extend the pool with the 11 new disks as 1 raidz2 vdev. When I go into the Volume to do this I select "tank" as the Volume to extend it shows the following information:

I'm presented with all 22 disks as available. If I select the first 11 disks it seems as if it is referencing the first 11 disks in the array and not the 11 new drives I just installed:

If I select the + button under available disks I get all 22 disks added to the volume. In an 11x2x8.0TB configuration:

This is a bit unnerving because it look like the Volume Manager has no idea about the underlying ZFS structure already in place and is about to wipe out all the data currently on the pool. Now if I try this manually I'm still presented with all the disks as if they are available. I can select the last 11 drives:

I'm not sure what will happen here if I select add volume. For some reason this just doesn't look right. Am I doing something wrong here to extend this volume or is the GUI out of sync with the underlying ZFS system?

Last edited: