Sawtaytoes

Patron

- Joined

- Jul 9, 2022

- Messages

- 221

I noticed some size discrepancies that I'd like to figure out regarding ZFS. From what I'm seeing, it's eating terabytes (tebibytes) of disk space.

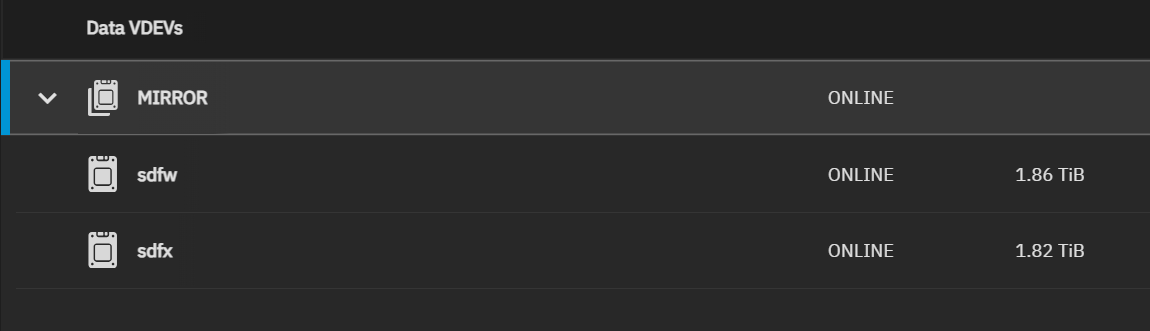

I have 2 x 2TB drives. That's 1.82 TiB per drive. If I mirror them, I'd expect to get 1.82 TiB of usable capacity:

I understand that there's 2GB of swap space reserved, but 1000GB, it's a negligible 0.002TB.

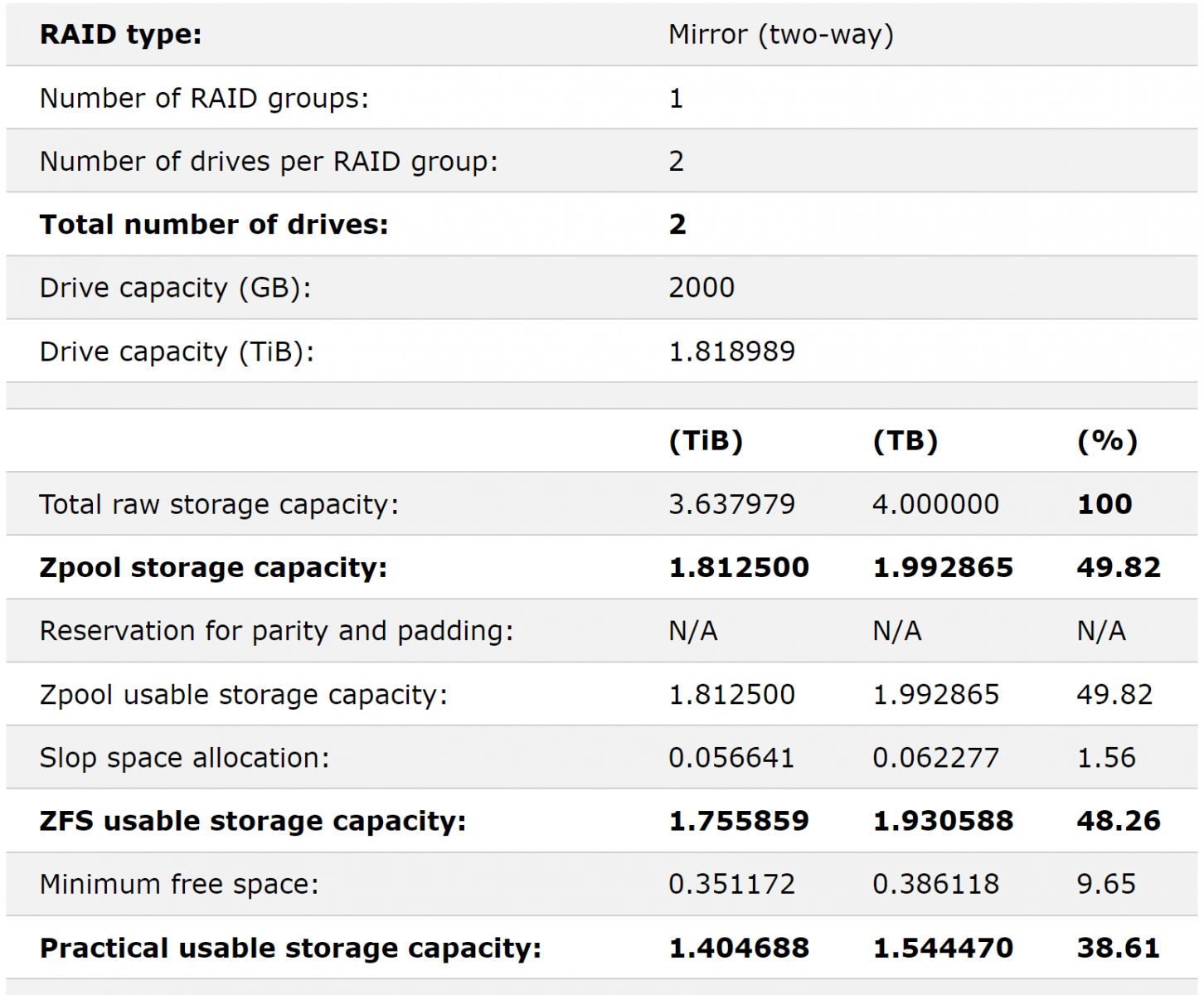

We can verify that with the OpenZFS calculator (https://www.truenas.com/community/resources/openzfs-capacity-calculator.185/):

It says because of slop (whatever that is), I should only get 1.76 TiB of usable capacity with about 1.4 TiB being usable if we avoid going over 80% capacity.

But what do I actually see?

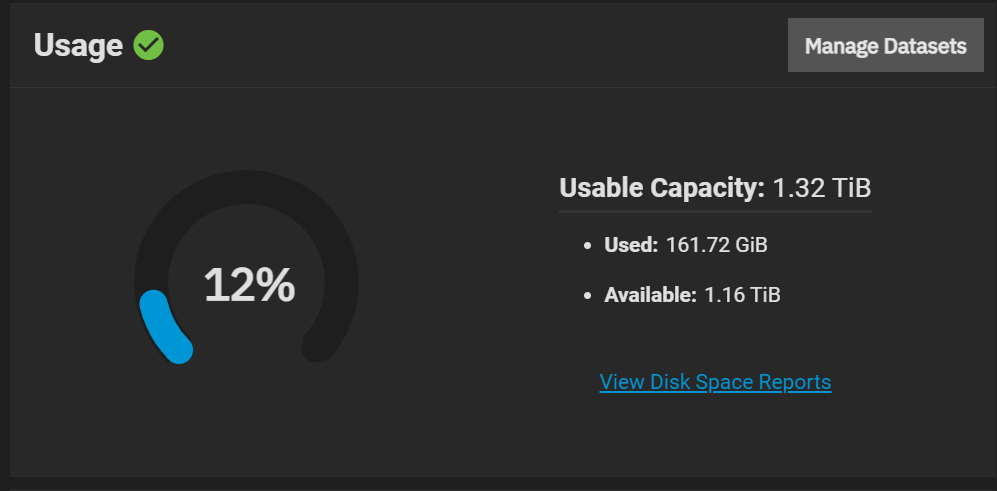

1.32 TiB of usable capacity. WHAT? That's 73% of the total capacity. What's going on here?

TrueNAS will start emailing me if I get close to 80%, but we're already down 27%! Meaning, at the point we get the 80% warning, we're at only 58% drive capacity.

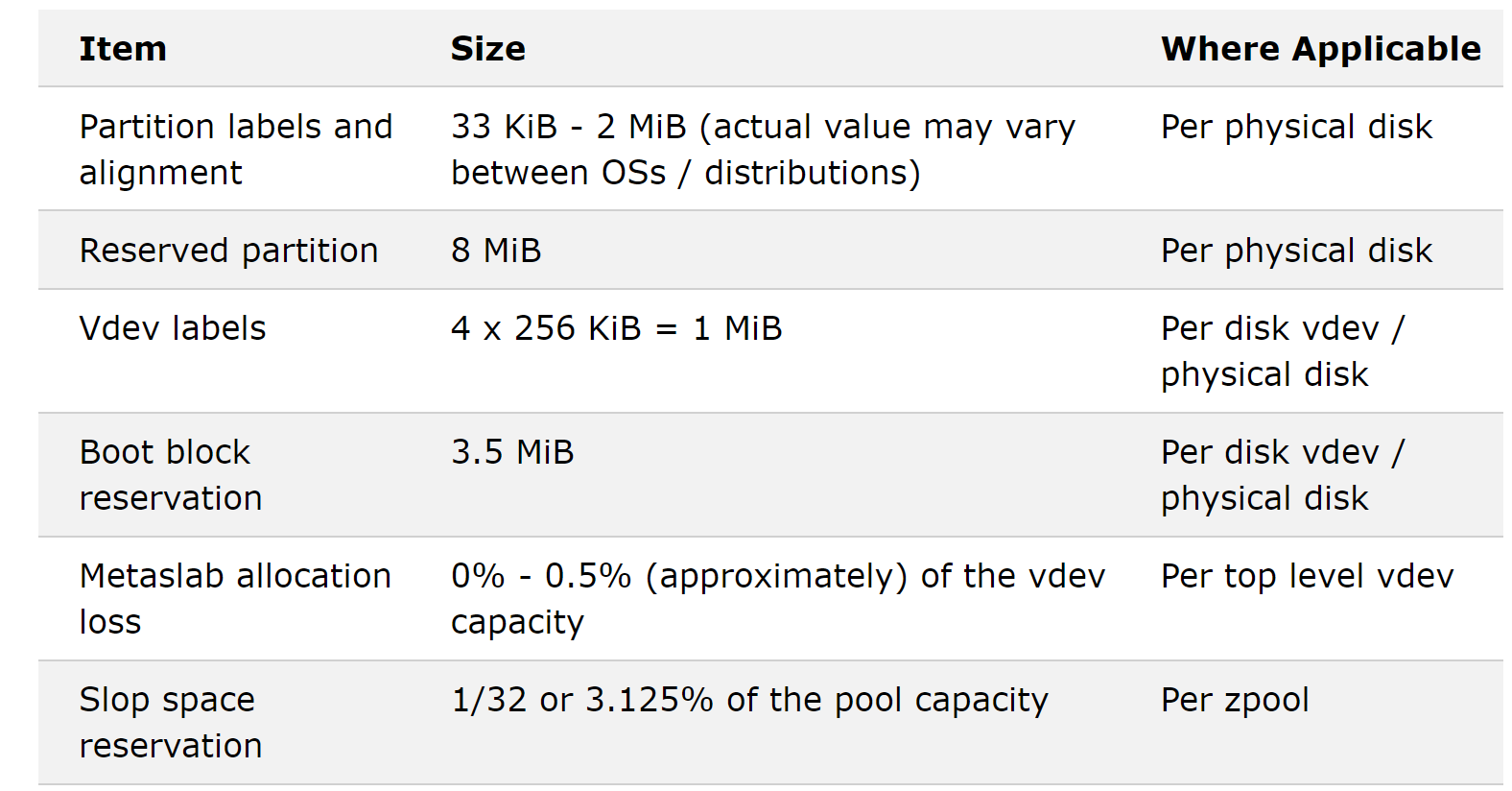

I saw an article about all this space taken from a zpool including slop space taking up 3.125%. This is by the same guy that made that calculator:

Even with the right numbers, none of this adds up.

It's worse with my dRAID zpools. I'm losing ~10 TiB for every 100 TiB (after using the dRAID calculation against my TiB); that's nearly 10%!

Even

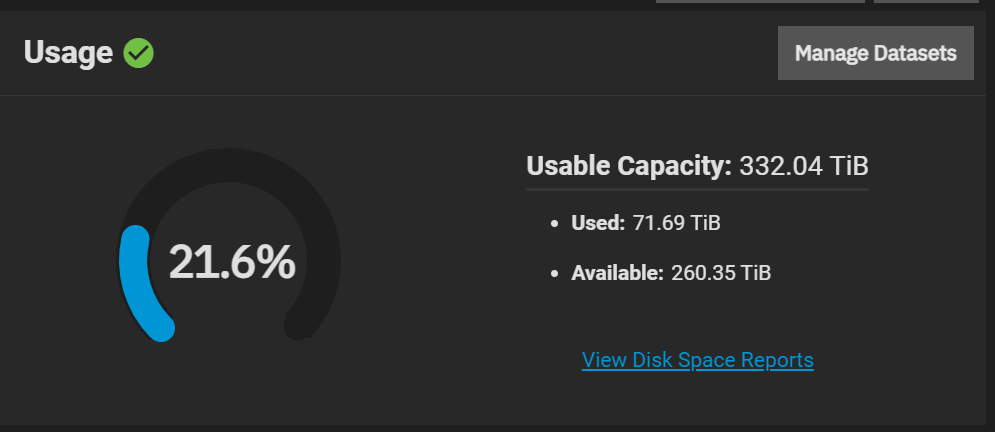

All these numbers are off. Wolves is made up of 60 x 9.1 TiB drives (546 TiB), but with this dRAID2:5d:15c:1s config, it should be 364 TiB. I can see losing a few TiB because of rounding errors, but the available space is 332 TiB (a loss of 32 TiB)!

TrueNAS will error at 80% usage, but I've already lost 9% in the first place. So that leaves me with only 266 TiB at 80% where I should've had 291 TiB. Still 25 TiB difference not counting the ~10 TiB of slop.

What's going on here? Where'd all my drive capacity go?

I have 2 x 2TB drives. That's 1.82 TiB per drive. If I mirror them, I'd expect to get 1.82 TiB of usable capacity:

I understand that there's 2GB of swap space reserved, but 1000GB, it's a negligible 0.002TB.

We can verify that with the OpenZFS calculator (https://www.truenas.com/community/resources/openzfs-capacity-calculator.185/):

It says because of slop (whatever that is), I should only get 1.76 TiB of usable capacity with about 1.4 TiB being usable if we avoid going over 80% capacity.

But what do I actually see?

1.32 TiB of usable capacity. WHAT? That's 73% of the total capacity. What's going on here?

TrueNAS will start emailing me if I get close to 80%, but we're already down 27%! Meaning, at the point we get the 80% warning, we're at only 58% drive capacity.

I saw an article about all this space taken from a zpool including slop space taking up 3.125%. This is by the same guy that made that calculator:

Even with the right numbers, none of this adds up.

It's worse with my dRAID zpools. I'm losing ~10 TiB for every 100 TiB (after using the dRAID calculation against my TiB); that's nearly 10%!

Even

zpool get size doesn't show them the same:Code:

# zpool get size NAME PROPERTY VALUE SOURCE Bunnies size 329T - TrueNAS-Apps size 1.36T - Wolves size 511T - boot-pool size 55G -

All these numbers are off. Wolves is made up of 60 x 9.1 TiB drives (546 TiB), but with this dRAID2:5d:15c:1s config, it should be 364 TiB. I can see losing a few TiB because of rounding errors, but the available space is 332 TiB (a loss of 32 TiB)!

TrueNAS will error at 80% usage, but I've already lost 9% in the first place. So that leaves me with only 266 TiB at 80% where I should've had 291 TiB. Still 25 TiB difference not counting the ~10 TiB of slop.

What's going on here? Where'd all my drive capacity go?

Last edited: