pillbox

Cadet

- Joined

- Aug 18, 2019

- Messages

- 1

I had been running a FreeNAS 11-STABLE system for a while. While trying to update my Plex Media Server jail, I discovered that the new package requires 11.2 or newer (something about libdl.so.1).

After going to the System -> Update and selected 11.2-STABLE, clicked the buttons to check for updates and then installed. The update took about an hour total after that.

When it came back up, I noticed that one of my pools was showing to be invalid. This particular pool is not important and it has a history of health issues. It's called "das-temp" and is composed of 10x 500G harddrives. I was using it to move around several hundred gigabytes of data; I don't think there's anything important on it.

I removed the pool (preserving the data and configuration) and tried to import it. Importing through the WebUI doesn't show it as an option; importing it via SSH CLI shows that it's missing 3 volumes. The disks in this pool are unreliable and after each reboot, I have to resliver back and forth between two or three different disks which means I expected to see only 1. There are a total of 12 500G drives in the system, so each time I reboot, the pool is important as 11 disks and I resliver onto one of the spare disks (yes, it's a mess but it was a stopgap solution)

What has me confused now is that in the WebUI, two of the drives that are listed as 500G show up as members of "Boot Pool". The system should be booting from a pair of 32G USB drives.

I really am not sure what's happening. It's almost as if the WebUI sees something different than camcontrol devlist does:

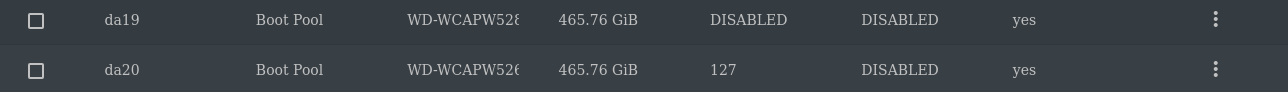

da19 and da20 showing as

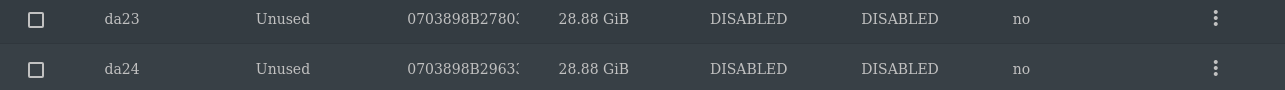

The WebUI shows the USB drives as da23 and da24 and unused:

So, my primary concern is that I don't want to be booting from these 500G drives because they are unreliable.

Secondarily, I would like to be able to import the "das-temp" pool again just to verify that I had finished copying data off of it (I haven't touched it in 2 months (more? Less? Who can remember that long ago) due to our second baby arriving at the beginning of July and the hectic lifestyle of having a 19 month old and a wife in her 3rd trimester prior to that)

Thanks in advance,

Tyler

After going to the System -> Update and selected 11.2-STABLE, clicked the buttons to check for updates and then installed. The update took about an hour total after that.

When it came back up, I noticed that one of my pools was showing to be invalid. This particular pool is not important and it has a history of health issues. It's called "das-temp" and is composed of 10x 500G harddrives. I was using it to move around several hundred gigabytes of data; I don't think there's anything important on it.

I removed the pool (preserving the data and configuration) and tried to import it. Importing through the WebUI doesn't show it as an option; importing it via SSH CLI shows that it's missing 3 volumes. The disks in this pool are unreliable and after each reboot, I have to resliver back and forth between two or three different disks which means I expected to see only 1. There are a total of 12 500G drives in the system, so each time I reboot, the pool is important as 11 disks and I resliver onto one of the spare disks (yes, it's a mess but it was a stopgap solution)

Code:

[tmcgeorge@gnosis ~]$ sudo zpool import

pool: das-temp

id: 10051433617479478656

state: UNAVAIL

status: One or more devices are missing from the system.

action: The pool cannot be imported. Attach the missing

devices and try again.

see: http://illumos.org/msg/ZFS-8000-3C

config:

das-temp UNAVAIL insufficient replicas

raidz2-0 UNAVAIL insufficient replicas

gptid/136e4b45-2f7e-11e8-a614-a0369f039be1 ONLINE

gptid/4121e1fb-39c9-11e8-87fb-a0369f039be1 ONLINE

gptid/f694e434-3286-11e8-8700-a0369f039be1 ONLINE

gptid/302efe83-3227-11e8-8700-a0369f039be1 ONLINE

gptid/19493ef0-2f7e-11e8-a614-a0369f039be1 ONLINE

12004337200713602199 UNAVAIL cannot open

gptid/1c3769a2-2f7e-11e8-a614-a0369f039be1 ONLINE

13048637244342766036 UNAVAIL cannot open

7064651067791550041 UNAVAIL cannot open

gptid/2063b361-2f7e-11e8-a614-a0369f039be1 ONLINE

What has me confused now is that in the WebUI, two of the drives that are listed as 500G show up as members of "Boot Pool". The system should be booting from a pair of 32G USB drives.

Code:

[tmcgeorge@gnosis ~]$ zpool list | grep freenas-boot

freenas-boot 28.8G 2.44G 26.3G - - - 8% 1.00x ONLINE -

[tmcgeorge@gnosis ~]$ zpool status freenas-boot

pool: freenas-boot

state: ONLINE

scan: scrub repaired 0 in 0 days 00:05:04 with 0 errors on Tue Aug 13 03:50:06 2019

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/18204fbd-e1f1-11e8-b4a8-a0369f039be1 ONLINE 0 0 0

gptid/1849fc72-e1f1-11e8-b4a8-a0369f039be1 ONLINE 0 0 0

errors: No known data errorsI really am not sure what's happening. It's almost as if the WebUI sees something different than camcontrol devlist does:

Code:

[tmcgeorge@gnosis ~]$ sudo camcontrol devlist <ATA WDC WD80EMAZ-00W 0A83> at scbus0 target 4 lun 0 (da0,pass0) <HP EF0300FATFD HPD7> at scbus0 target 6 lun 0 (da1,pass1) <HP EF0300FATFD HPD7> at scbus0 target 7 lun 0 (da2,pass2) <ATA Hitachi HUA72303 A5C0> at scbus0 target 8 lun 0 (da3,pass3) <ATA Hitachi HUA72303 A5C0> at scbus0 target 9 lun 0 (da4,pass4) <ATA Hitachi HUA72303 AA10> at scbus0 target 10 lun 0 (da5,pass5) <ATA Hitachi HUS72403 A5F0> at scbus0 target 11 lun 0 (da6,pass6) <ATA WDC WD80EMAZ-00W 0A83> at scbus1 target 0 lun 0 (da7,pass7) <ATA WDC WD80EMAZ-00W 0A83> at scbus1 target 1 lun 0 (da8,pass8) <ATA WDC WD80EMAZ-00W 0A83> at scbus1 target 2 lun 0 (da9,pass9) <ATA WDC WD80EMAZ-00W 0A83> at scbus1 target 3 lun 0 (da10,pass10) <ATA WDC WD5000ABYS-0 1C01> at scbus1 target 4 lun 0 (da11,pass11) <ATA WDC WD5000ABYS-0 1C01> at scbus1 target 5 lun 0 (da12,pass12) <ATA WDC WD5000ABYS-0 1C01> at scbus1 target 6 lun 0 (da13,pass13) <ATA WDC WD5000ABYS-0 1C01> at scbus1 target 8 lun 0 (da14,pass14) <ATA WDC WD5000ABYS-0 1C01> at scbus1 target 9 lun 0 (da15,pass15) <ATA WDC WD5000ABYS-0 1C01> at scbus1 target 17 lun 0 (da16,pass16) <ATA WDC WD5000ABYS-0 1C01> at scbus1 target 18 lun 0 (da17,pass17) <ATA WDC WD5000ABYS-0 1C01> at scbus1 target 19 lun 0 (da18,pass18) <HP HP SAS EXP Card 2.06> at scbus1 target 20 lun 0 (ses0,pass19) < PMAP> at scbus3 target 0 lun 0 (da19,pass20) < PMAP> at scbus4 target 0 lun 0 (da20,pass21)

da19 and da20 showing as

"< PMAP>" is normal for USB drives (based on what I remember when setting this system up). But when I look in the WebUI, da19 and ds20 show up as "Boot Pool" but also show up as 500G:The WebUI shows the USB drives as da23 and da24 and unused:

So, my primary concern is that I don't want to be booting from these 500G drives because they are unreliable.

Secondarily, I would like to be able to import the "das-temp" pool again just to verify that I had finished copying data off of it (I haven't touched it in 2 months (more? Less? Who can remember that long ago) due to our second baby arriving at the beginning of July and the hectic lifestyle of having a 19 month old and a wife in her 3rd trimester prior to that)

Thanks in advance,

Tyler