pechkin000

Explorer

- Joined

- Jan 24, 2014

- Messages

- 59

Hi,

I would really appreciate any guidance in this.

I had a bad disk. I was unable to offline it before replacing it. I kept getting error that particular device was not available.

So i shut it down and inserted a new disk. Here is what my

In GUI if I try to online it in GUI i get this:

If i do

AFter I do this, im GUI it shows up as unavailable:

And if I try to do DIsk Replace in GUI it asks me if I want to replace Disk Member da7, I say yes (tired both forced and not forced option, i get something like this:

I tried

replicator#

but all i get is

I would really appreciate any help with this. Thank you guys in advance!!!

I would really appreciate any guidance in this.

I had a bad disk. I was unable to offline it before replacing it. I kept getting error that particular device was not available.

So i shut it down and inserted a new disk. Here is what my

zpool status looks like now:Code:

replicator# zpool status

pool: backups

state: DEGRADED

status: One or more devices has been taken offline by the administrator.

Sufficient replicas exist for the pool to continue functioning in a

degraded state.

action: Online the device using 'zpool online' or replace the device with

'zpool replace'.

scan: scrub repaired 540K in 0 days 05:28:45 with 0 errors on Wed Mar 6 18:51:22 2019

config:

NAME STATE READ WRITE CKSUM

backups DEGRADED 0 0 0

raidz3-0 DEGRADED 0 0 0

gptid/a38d9d54-2470-11e7-be70-ac220b8c944c ONLINE 0 0 0

gptid/6ba58636-4588-11e7-867f-ac220b8c944c ONLINE 0 0 0

gptid/e3acea0a-8574-11e4-9c86-ac220b8c944c ONLINE 0 0 0

gptid/18b749b3-c0b6-11e7-81f8-ac220b8c944c ONLINE 0 0 0

15678995806359064346 OFFLINE 0 0 0 was /dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944c

gptid/a0fb3bb7-c685-11e4-acbc-ac220b8c944c ONLINE 0 0 0

gptid/80f31598-5557-11e4-a84e-ac220b8c944c ONLINE 0 0 0

gptid/8163ab25-5557-11e4-a84e-ac220b8c944c ONLINE 0 0 0

errors: No known data errors

pool: freenas-boot

state: ONLINE

scan: scrub repaired 0 in 0 days 00:01:56 with 0 errors on Sat Mar 2 03:46:56 2019

config:

NAME STATE READ WRITE CKSUM

freenas-boot ONLINE 0 0 0

ada0p2 ONLINE 0 0 0

errors: No known data errors

In GUI if I try to online it in GUI i get this:

Code:

File "./freenasUI/middleware/notifier.py", line 287, in zfs_online_disk

c.call('pool.online', volume.id, {'label': label})

File "./freenasUI/middleware/notifier.py", line 287, in zfs_online_disk

c.call('pool.online', volume.id, {'label': label})

File "/usr/local/lib/python3.7/site-packages/middlewared/client/client.py", line 453, in call

raise ValidationErrors(c.extra)

middlewared.client.client.ValidationErrors: [EINVAL] options.label: Label /dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944c not found on this pool.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/tastypie/resources.py", line 219, in wrapper

response = callback(request, *args, **kwargs)

File "./freenasUI/api/resources.py", line 899, in online_disk

notifier().zfs_online_disk(obj, deserialized.get('label'))

File "./freenasUI/middleware/notifier.py", line 289, in zfs_online_disk

raise MiddlewareError(f'Disk online failed: {str(e)}')

freenasUI.middleware.exceptions.MiddlewareError: [MiddlewareError: Disk online failed: [EINVAL] options.label: Label /dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944c not found on this pool.]If i do

zpool online backups /dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944c then i get this:Code:

replicator# zpool online backups /dev/gptid/806359064346 cannot online /dev/gptid/806359064346: no such device in pool replicator# zpool online backups /dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944c warning: device '/dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944c' onlined, but remains in faulted state use 'zpool replace' to replace devices that are no longer present replicator#

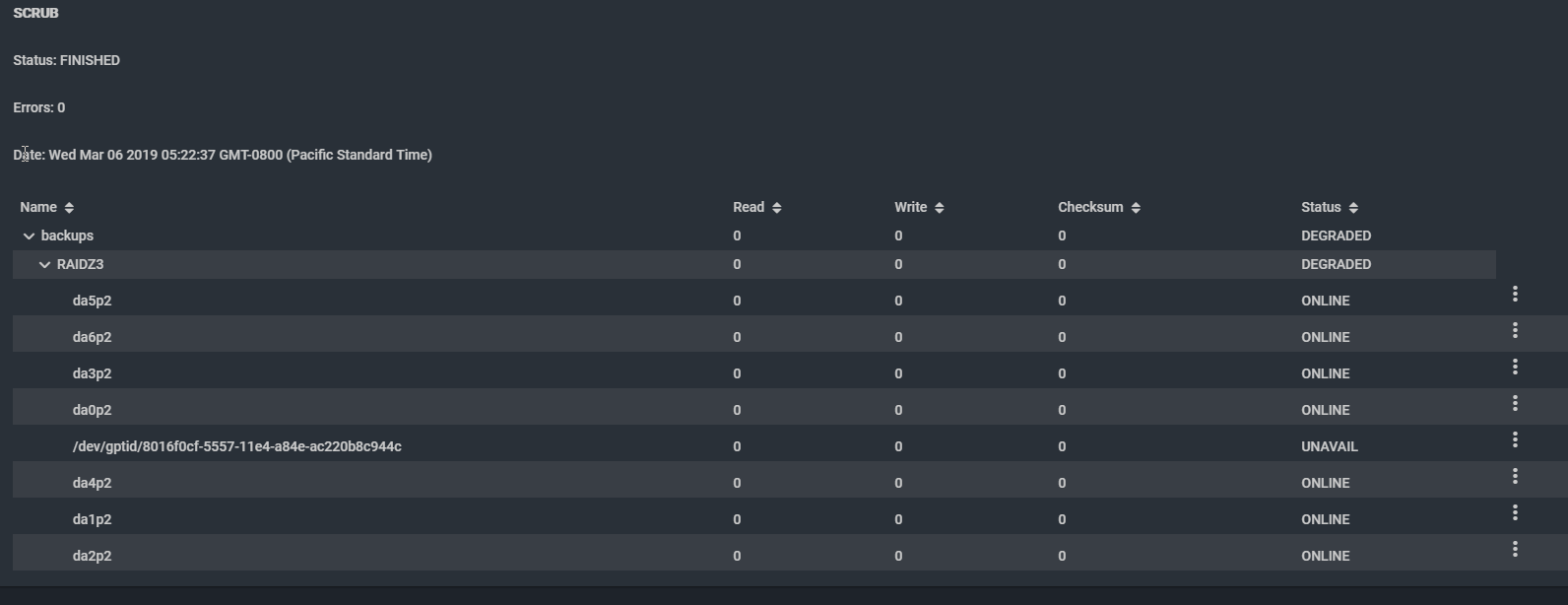

AFter I do this, im GUI it shows up as unavailable:

And if I try to do DIsk Replace in GUI it asks me if I want to replace Disk Member da7, I say yes (tired both forced and not forced option, i get something like this:

Code:

Mar 8 09:58:14 replicator uwsgi: [middleware.exceptions:36] [MiddlewareError: Disk online failed: [EINVAL] options.label: Label /dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944c not found on this pool.] Mar 8 10:00:08 replicator ZFS: vdev state changed, pool_guid=7276832981028910459 vdev_guid=13712933058586299289 Mar 8 10:00:08 replicator ZFS: vdev state changed, pool_guid=7276832981028910459 vdev_guid=4742708301625801581 Mar 8 10:00:08 replicator ZFS: vdev state changed, pool_guid=7276832981028910459 vdev_guid=10722137843091395938 Mar 8 10:00:08 replicator ZFS: vdev state changed, pool_guid=7276832981028910459 vdev_guid=10312215785613305283 Mar 8 10:00:08 replicator ZFS: vdev state changed, pool_guid=7276832981028910459 vdev_guid=15678995806359064346 Mar 8 10:00:08 replicator ZFS: vdev state changed, pool_guid=7276832981028910459 vdev_guid=18414390688458562224 Mar 8 10:00:08 replicator ZFS: vdev state changed, pool_guid=7276832981028910459 vdev_guid=7372792234020972046 Mar 8 10:00:08 replicator ZFS: vdev state changed, pool_guid=7276832981028910459 vdev_guid=14887578890026946931

I tried

replicator#

zpool replace backups /dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944cbut all i get is

zpool replace backups /dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944c

cannot open '/dev/gptid/8016f0cf-5557-11e4-a84e-ac220b8c944c': No such file or directory

replicator#

I would really appreciate any help with this. Thank you guys in advance!!!

Last edited: