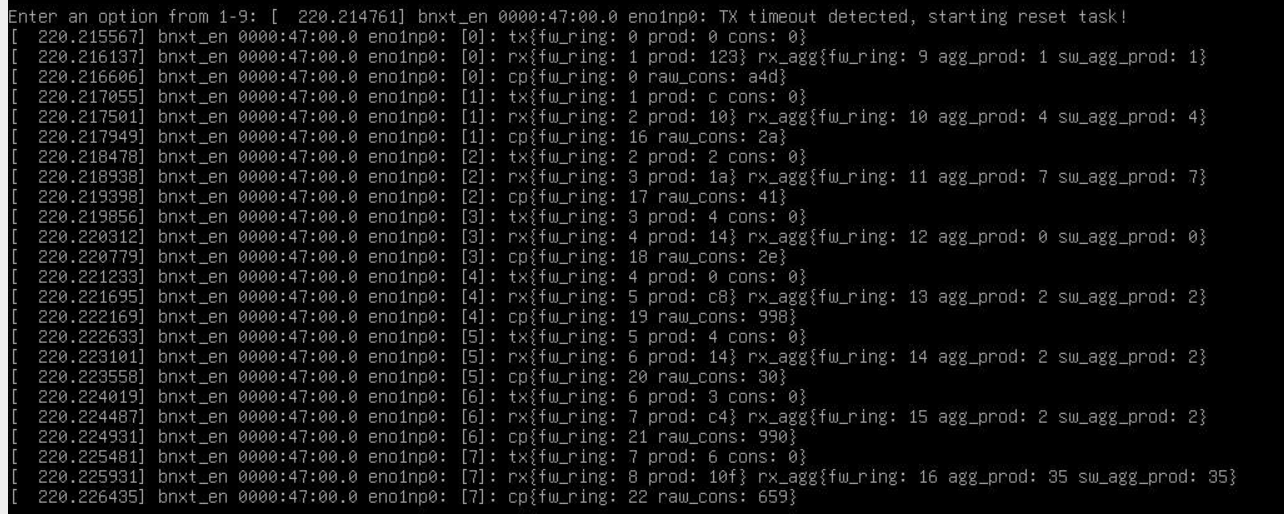

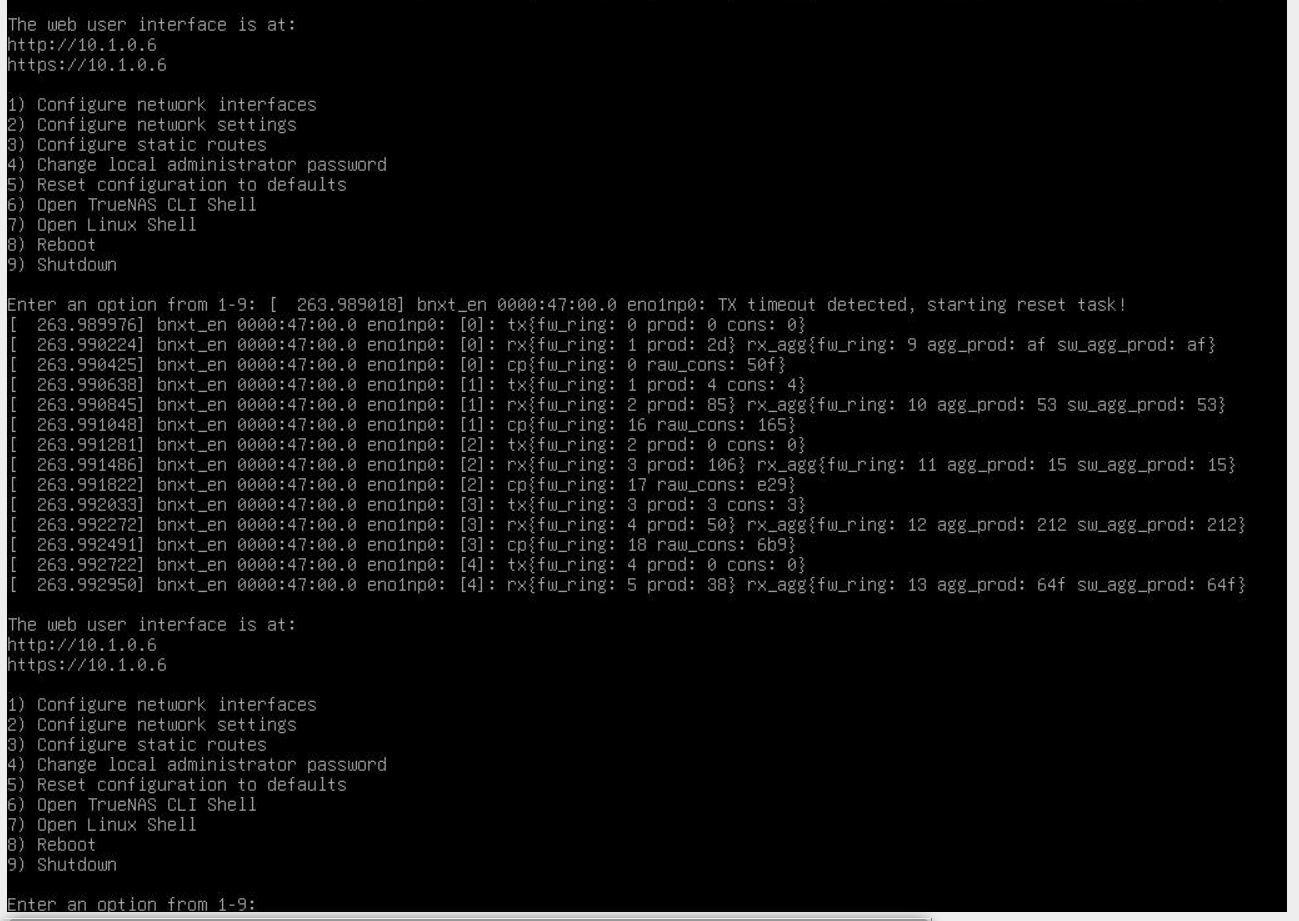

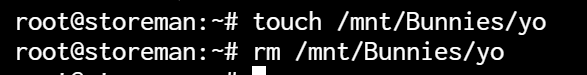

This was enough to cause it to reboot:

A few seconds after performing this task, that's when it rebooted. Is there something telling it "after writing a file, go ahead and force-reboot the system"?

An Optane metadata vdev with a SSD pool? That's borderline crazy.

You have not fully described your system and how everything is wired and powered. From the symptoms, it could be that there's enough power for reading but not for writing.

Hardware Specs

- Chassis: 45Drives Storinator XL60.

- Motherboard: Supermicro HT12SSL-NT.

- CPU: AMD Epyc 7313p (16-core).

- RAM: 256GB -> 8 sticks of 32GB DDR4 3200.

- PCIe1: ConnectX-6 -> PCIe 4.0 x4 -> 2-port 25Gb SFP28.

- PCIe2: LSI 9305 24i -> PCIe 3.0 x8 (all 6 ports plugged into direct-attach backplanes with 24 SSDs).

- PCIe3: LSI 9305 24i -> PCIe 3.0 x8 (all 6 ports plugged into direct-attach backplanes with 24 SSDs).

- PCIe4: LSI 9305 24i -> PCIe 3.0 x8 (all 6 ports plugged into direct-attach backplanes with 24 SSDs).

- PCIe5: LSI 9305 24i -> PCIe 3.0 x8 (all 6 ports plugged into direct-attach backplanes with 24 SSDs).

- PCIe6: LSI 9305 24i -> PCIe 3.0 x8 (all 6 ports plugged into direct-attach backplanes with 24 SSDs).

- PCIe7: LSI 9305 16e -> PCIe 3.0 x8 (3 ports plugged into SAS expanders in another Storinator XL60 chassis with 60 HDDs.

- NVMe1: 960GB Intel Optane 905p -> PCIe 3.0 x4

- NVMe1: 960GB Intel Optane 905p -> PCIe 3.0 x4

- SlimSAS1: SlimSAS to 2 x U.2 -> 2 x 960GB Intel Optane 905p -> PCIe 3.0 x4

- SlimSAS2: SlimSAS to 2 x miniSAS HD (only 6 SSDs connected, but both cables plugged in)

There are no free PCIe ports in this system; although, if I wanted to use bifurcation, there are an additional 20 lanes on the x16 PCIe ports.

zpool Specs

My main Bunnies zpool is gonna change to a multi-vdev dRAID configuration once I figure out what's wrong. When copying snapshots to my HDD pool, some were missed, so I need to copy those again.

- boot-pool -> 2 x 60GB Corsair Force SSDs

- TrueNAS-Apps -> 2 x 2TB Crucial MX500 SSDs

- Bunnies

-> 80 x 2TB and 4TB Crucial MX500 SSDs and 4 x 960GB Intel Optane 905p

-> This is where I store my files.

-> I backup this pool to Wolves and also to another zpool on an offsite NAS.

- Wolves

-> 60 x 10TB HGST Helium HDDs and 2 x 2TB Crucial MX500 SSDs as metadata.

-> All data in this pool is a backup of Bunnies and another pool on my offsite NAS.

Code:

# zpool status -vL

pool: Bunnies

state: ONLINE

scan: scrub repaired 0B in 08:58:36 with 0 errors on Wed Dec 6 14:05:11 2023

remove: Removal of vdev 1 copied 1.79T in 1h37m, completed on Tue Oct 31 07:02:38 2023

955M memory used for removed device mappings

config:

NAME STATE READ WRITE CKSUM

Bunnies ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdch2 ONLINE 0 0 0

sdcp2 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

sdfc2 ONLINE 0 0 0

sdw2 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

sdg2 ONLINE 0 0 0

sdi2 ONLINE 0 0 0

mirror-4 ONLINE 0 0 0

sdfj2 ONLINE 0 0 0

sde2 ONLINE 0 0 0

mirror-5 ONLINE 0 0 0

sdfo2 ONLINE 0 0 0

sdaa2 ONLINE 0 0 0

mirror-8 ONLINE 0 0 0

sdae2 ONLINE 0 0 0

sdff2 ONLINE 0 0 0

mirror-11 ONLINE 0 0 0

sdfi2 ONLINE 0 0 0

sdz2 ONLINE 0 0 0

mirror-15 ONLINE 0 0 0

sdfp2 ONLINE 0 0 0

sdfe2 ONLINE 0 0 0

mirror-16 ONLINE 0 0 0

sdh2 ONLINE 0 0 0

sdfr2 ONLINE 0 0 0

mirror-17 ONLINE 0 0 0

sdv2 ONLINE 0 0 0

sdfg2 ONLINE 0 0 0

mirror-18 ONLINE 0 0 0

sdfh2 ONLINE 0 0 0

sdfd2 ONLINE 0 0 0

mirror-20 ONLINE 0 0 0

sdf2 ONLINE 0 0 0

sdfq2 ONLINE 0 0 0

mirror-24 ONLINE 0 0 0

sdk2 ONLINE 0 0 0

sdcq2 ONLINE 0 0 0

mirror-26 ONLINE 0 0 0

sdad2 ONLINE 0 0 0

sdd2 ONLINE 0 0 0

mirror-27 ONLINE 0 0 0

sdco2 ONLINE 0 0 0

sdca2 ONLINE 0 0 0

mirror-28 ONLINE 0 0 0

sdcn2 ONLINE 0 0 0

sdal2 ONLINE 0 0 0

mirror-29 ONLINE 0 0 0

sdce2 ONLINE 0 0 0

sdn2 ONLINE 0 0 0

mirror-31 ONLINE 0 0 0

sdeh2 ONLINE 0 0 0

sdan2 ONLINE 0 0 0

mirror-32 ONLINE 0 0 0

sdcg2 ONLINE 0 0 0

sda2 ONLINE 0 0 0

mirror-33 ONLINE 0 0 0

sdc2 ONLINE 0 0 0

sdb2 ONLINE 0 0 0

mirror-34 ONLINE 0 0 0

sdcb2 ONLINE 0 0 0

sdp2 ONLINE 0 0 0

mirror-35 ONLINE 0 0 0

sdcf2 ONLINE 0 0 0

sdq2 ONLINE 0 0 0

mirror-36 ONLINE 0 0 0

sdfb2 ONLINE 0 0 0

sdao2 ONLINE 0 0 0

mirror-37 ONLINE 0 0 0

sdai2 ONLINE 0 0 0

sdcv2 ONLINE 0 0 0

mirror-38 ONLINE 0 0 0

sdcl2 ONLINE 0 0 0

sdaq2 ONLINE 0 0 0

mirror-39 ONLINE 0 0 0

sdap2 ONLINE 0 0 0

sdfk2 ONLINE 0 0 0

mirror-40 ONLINE 0 0 0

sdfn2 ONLINE 0 0 0

sdfl2 ONLINE 0 0 0

mirror-41 ONLINE 0 0 0

sdfm2 ONLINE 0 0 0

sdcj2 ONLINE 0 0 0

mirror-42 ONLINE 0 0 0

sdcm2 ONLINE 0 0 0

sdck2 ONLINE 0 0 0

mirror-43 ONLINE 0 0 0

sdj2 ONLINE 0 0 0

sdah2 ONLINE 0 0 0

mirror-44 ONLINE 0 0 0

sdde2 ONLINE 0 0 0

sdcu2 ONLINE 0 0 0

mirror-45 ONLINE 0 0 0

sdcs2 ONLINE 0 0 0

sdeo2 ONLINE 0 0 0

mirror-46 ONLINE 0 0 0

sdaj2 ONLINE 0 0 0

sdu2 ONLINE 0 0 0

mirror-47 ONLINE 0 0 0

sdt2 ONLINE 0 0 0

sdac2 ONLINE 0 0 0

mirror-48 ONLINE 0 0 0

sdaf2 ONLINE 0 0 0

sdcd2 ONLINE 0 0 0

mirror-49 ONLINE 0 0 0

sdci2 ONLINE 0 0 0

sdak2 ONLINE 0 0 0

mirror-50 ONLINE 0 0 0

sdam2 ONLINE 0 0 0

sdcc2 ONLINE 0 0 0

mirror-51 ONLINE 0 0 0

sdo2 ONLINE 0 0 0

sdep2 ONLINE 0 0 0

mirror-52 ONLINE 0 0 0

sdr2 ONLINE 0 0 0

sdag2 ONLINE 0 0 0

mirror-53 ONLINE 0 0 0

sdl2 ONLINE 0 0 0

sdm2 ONLINE 0 0 0

special

mirror-13 ONLINE 0 0 0

nvme1n1p1 ONLINE 0 0 0

nvme0n1p1 ONLINE 0 0 0

mirror-14 ONLINE 0 0 0

nvme3n1p1 ONLINE 0 0 0

nvme2n1p1 ONLINE 0 0 0

spares

sds2 AVAIL

errors: No known data errors

pool: TrueNAS-Apps

state: ONLINE

scan: resilvered 232K in 00:00:00 with 0 errors on Wed Dec 6 03:07:23 2023

config:

NAME STATE READ WRITE CKSUM

TrueNAS-Apps ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdfw1 ONLINE 0 0 0

sdfx2 ONLINE 0 0 0

errors: No known data errors

pool: Wolves

state: ONLINE

scan: scrub repaired 0B in 05:11:50 with 0 errors on Thu Nov 23 10:07:44 2023

config:

NAME STATE READ WRITE CKSUM

Wolves ONLINE 0 0 0

draid2:5d:15c:1s-0 ONLINE 0 0 0

sdbt2 ONLINE 0 0 0

sdbs2 ONLINE 0 0 0

sdbu2 ONLINE 0 0 0

sddv2 ONLINE 0 0 0

sded2 ONLINE 0 0 0

sdas2 ONLINE 0 0 0

sddp2 ONLINE 0 0 0

sdbg2 ONLINE 0 0 0

sddn2 ONLINE 0 0 0

sdbl2 ONLINE 0 0 0

sdbm2 ONLINE 0 0 0

sdbn2 ONLINE 0 0 0

sdbi2 ONLINE 0 0 0

sdbj2 ONLINE 0 0 0

sdaw2 ONLINE 0 0 0

draid2:5d:15c:1s-1 ONLINE 0 0 0

sddx2 ONLINE 0 0 0

sdax2 ONLINE 0 0 0

sdda2 ONLINE 0 0 0

sday2 ONLINE 0 0 0

sdby2 ONLINE 0 0 0

sdbv2 ONLINE 0 0 0

sdbz2 ONLINE 0 0 0

sdds2 ONLINE 0 0 0

sdbw2 ONLINE 0 0 0

sdbx2 ONLINE 0 0 0

sddt2 ONLINE 0 0 0

sddq2 ONLINE 0 0 0

sdar2 ONLINE 0 0 0

sdbc2 ONLINE 0 0 0

sddo2 ONLINE 0 0 0

draid2:5d:15c:1s-2 ONLINE 0 0 0

sdaz2 ONLINE 0 0 0

sdcx2 ONLINE 0 0 0

sdba2 ONLINE 0 0 0

sdbb2 ONLINE 0 0 0

sddr2 ONLINE 0 0 0

sdbd2 ONLINE 0 0 0

sdbf2 ONLINE 0 0 0

sdbe2 ONLINE 0 0 0

sdcr2 ONLINE 0 0 0

sdbk2 ONLINE 0 0 0

sdbp2 ONLINE 0 0 0

sdbh2 ONLINE 0 0 0

sdea2 ONLINE 0 0 0

sddz2 ONLINE 0 0 0

sdee2 ONLINE 0 0 0

draid2:5d:15c:1s-3 ONLINE 0 0 0

sdeg2 ONLINE 0 0 0

sdct2 ONLINE 0 0 0

sdef2 ONLINE 0 0 0

sdeq2 ONLINE 0 0 0

sddu2 ONLINE 0 0 0

sdei2 ONLINE 0 0 0

sdat2 ONLINE 0 0 0

sdec2 ONLINE 0 0 0

sdau2 ONLINE 0 0 0

sdeb2 ONLINE 0 0 0

sdav2 ONLINE 0 0 0

sddw2 ONLINE 0 0 0

sdbo2 ONLINE 0 0 0

sdbq2 ONLINE 0 0 0

sdbr2 ONLINE 0 0 0

special

mirror-4 ONLINE 0 0 0

sdab ONLINE 0 0 0

sdfs ONLINE 0 0 0

spares

draid2-0-0 AVAIL

draid2-1-0 AVAIL

draid2-2-0 AVAIL

draid2-3-0 AVAIL

errors: No known data errors

pool: boot-pool

state: ONLINE

status: Some supported and requested features are not enabled on the pool.

The pool can still be used, but some features are unavailable.

action: Enable all features using 'zpool upgrade'. Once this is done,

the pool may no longer be accessible by software that does not support

the features. See zpool-features(7) for details.

scan: scrub repaired 0B in 00:00:15 with 0 errors on Sat Dec 2 03:45:17 2023

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sdft3 ONLINE 0 0 0

sdfu3 ONLINE 0 0 0

errors: No known data errors when writing to it. This began happening 3, maybe 4 days ago now?

I thought it was a power issue

2 days before, I had already removed 15 SSDs that I currently wasn't using. After it started happening, I thought it was a power issue, so I removed 48 SSDs (of 85), but still had the problem.

I already planned to add 128 SSD bays in here for my 123 SSDs, so I completely redid the power wiring and removed every one of the 128 SSD slots from the +5V rail on the PSUs. Also note, I swapped the PSUs in this server with the one in the other Storinator XL60 chassis, again, thinking power was the issue.

I thought it was a heat issue

At some point, I noticed heat issues because I shifted all the fans to Noctua NF-12s during this 128 SSD bay transition. I put them all back to stock, and the heat issues went away. Still, I removed the ConnectX-6 card, and the reboots became less frequent.

I only recently found out why:

The

real problem occurs

when writing to Bunnies.

Because my PCs backup their data every 12 hours and on idle, when I stepped away from one, it would start writing data to Bunnies; forcing the reboot situation. When I removed the ConnectX-6 and switched to the onboard NICs, the DNS hostname didn't match, so I could only access my NAS by IP. Because of this, my Windows boxes stopped backing up, lengthening the time between reboots to whenever my snapshots ran.

It was the writes

Based on the fact that reboots started occurring about every hour, it was pretty clear those were causing forced reboots since

I take hourly snapshots.

After disabling all snapshots and backup tasks, and now since Windows can't access the NAS, it was able to successfully stay on for over 10 hours doing a `zpool scrub`. I'm 100% certain now that writes are the issue and only to the Bunnies pool.

I'm not yet certain if the issue is physical (NVMe or SATA SSDs) or TrueNAS.

How to figure out what's wrong?

Is there a zdb way I can check this out? If it's just a corrupt pool, I already planned to nix it and convert it to dRAID, but I need to first move off some more recent snapshots. That requires manually running `zfs send` to Wolves.