Space

Cadet

- Joined

- Apr 27, 2017

- Messages

- 9

Hello to all TrueNAs/FreeNas community,

Long time FreeNAS user, long live the king!

If there are inconsistencies and/or bad formatting in the post, let me know I will try to do my best to correct them.

I was, until now, able to resolve all my system issues from the documentation provided. This really great for the community and I hope long life for all.

History: returning from holidays I found my Nas system in a boot loop...

I have two mirrored VDEVS: 2x 3TB and 2x 4TB, before you ask all CMR.

After identifying the drive which caused the reboot loop, I was finally able to boot into a stable system.

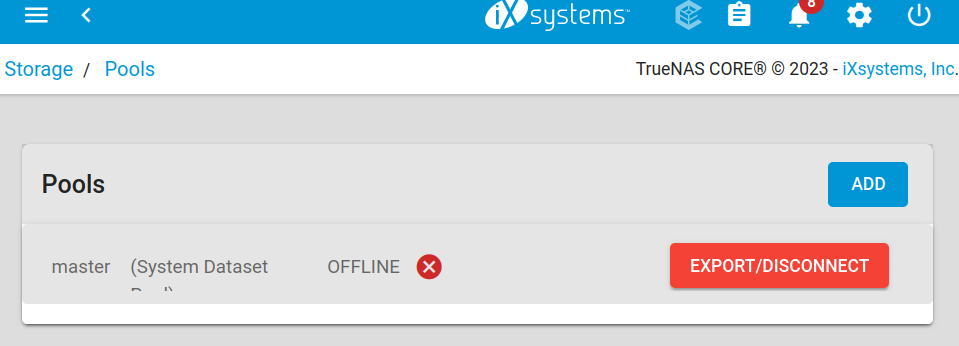

I found this status:

I am unable to make any changes besides export/disconnect from WEB GUI.

I already in the past had one of the member drives going down, but I was able to replace it from the WEB UI. Not this time.

So, after past two weeks reading the forums and waiting for a drive, recap of what I did:

What do you suggest I do next?

Any help is really appriciated.

Tnx.

Long time FreeNAS user, long live the king!

If there are inconsistencies and/or bad formatting in the post, let me know I will try to do my best to correct them.

I was, until now, able to resolve all my system issues from the documentation provided. This really great for the community and I hope long life for all.

History: returning from holidays I found my Nas system in a boot loop...

I have two mirrored VDEVS: 2x 3TB and 2x 4TB, before you ask all CMR.

After identifying the drive which caused the reboot loop, I was finally able to boot into a stable system.

I found this status:

Code:

Total Disks : Unknown

Pool Status: OFFLINE

Used Space: UnknownCode:

# zpool status -v

pool: boot-pool

state: ONLINE

scan: scrub repaired 0B in 00:00:02 with 0 errors on Tue Dec 12 03:45:02 2023

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

ada0p2 ONLINE 0 0 0Code:

# zpool status master cannot open 'master': no such pool

Code:

# zpool import

pool: master

id: 13967929381008612991

state: DEGRADED

status: One or more devices are missing from the system.

action: The pool can be imported despite missing or damaged devices. The

fault tolerance of the pool may be compromised if imported.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-2Q

config:

master DEGRADED

mirror-0 DEGRADED

gptid/28fbc45a-a51e-11eb-8d62-000c2998f3af UNAVAIL cannot open

gptid/8508d98d-43da-11eb-bce6-000c2998f3af ONLINE

mirror-1 ONLINE

gptid/189e1eda-ffa6-11e7-b58a-000c295d413f ONLINE

gptid/19db538b-ffa6-11e7-b58a-000c295d413f ONLINE

I am unable to make any changes besides export/disconnect from WEB GUI.

I already in the past had one of the member drives going down, but I was able to replace it from the WEB UI. Not this time.

So, after past two weeks reading the forums and waiting for a drive, recap of what I did:

- Took out the faulty drive (UID 28fbc45a-a51e-11eb-8d62-000c2998f3af)

- No errors on zpool import -F -n -R /mnt master

- Replaced the faulty drive with the new 4TB hdd

- Copied the partition table from the healthy 4TB drive with the UID 8508d98d-43da-11eb-bce6-000c2998f3af

- In Linux(as this was I was accoustomed to more):

- sfdisk -d /dev/sdc > part_table-sdc-4tb

- grep -v ^label-id part_table-sdc-4tb | sed -e 's/, *uuid=[0-9A-F-]*//' | sfdisk /dev/sdd

Checking that no-one is using this disk right now ... OK

Disk /dev/sdd: 3.64 TiB, 4000787030016 bytes, 7814037168 sectors

Disk model: ST4000VN006-3CW1

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 4096 bytes

I/O size (minimum/optimal): 4096 bytes / 4096 bytes

>>> Script header accepted.

>>> Script header accepted.

>>> Script header accepted.

>>> Script header accepted.

>>> Script header accepted.

>>> Script header accepted.

>>> Created a new GPT disklabel (GUID: 801DF633-1F79-734C-B19A-BFF618DF5616).

/dev/sdd1: Created a new partition 1 of type 'FreeBSD swap' and of size 2 GiB.

/dev/sdd2: Created a new partition 2 of type 'FreeBSD ZFS' and of size 3.6 TiB.

/dev/sdd3: Done.

New situation:

Disklabel type: gpt

Disk identifier: 801DF633-1F79-734C-B19A-BFF618DF5616

Device Start End Sectors Size Type

/dev/sdd1 128 4194431 4194304 2G FreeBSD swap

/dev/sdd2 4194432 7814037127 7809842696 3.6T FreeBSD ZFS

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

- Drive status:

gpart listGeom name: ada0

modified: false

state: OK

fwheads: 16

fwsectors: 63

last: 67108823

first: 40

entries: 128

scheme: GPT

Providers:

1. Name: ada0p1

Mediasize: 272629760 (260M)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 20480

Mode: r0w0e0

efimedia: HD(1,GPT,24647464-7aec-11ee-968e-5526027064af,0x28,0x82000)

rawuuid: 24647464-7aec-11ee-968e-5526027064af

rawtype: c12a7328-f81f-11d2-ba4b-00a0c93ec93b

label: (null)

length: 272629760

offset: 20480

type: efi

index: 1

end: 532519

start: 40

2. Name: ada0p2

Mediasize: 34074525696 (32G)

Sectorsize: 512

Stripesize: 0

Stripeoffset: 272650240

Mode: r1w1e1

efimedia: HD(2,GPT,2466c8f3-7aec-11ee-968e-5526027064af,0x82028,0x3f78000)

rawuuid: 2466c8f3-7aec-11ee-968e-5526027064af

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 34074525696

offset: 272650240

type: freebsd-zfs

index: 2

end: 67084327

start: 532520

Consumers:

1. Name: ada0

Mediasize: 34359738368 (32G)

Sectorsize: 512

Mode: r1w1e2

Geom name: da0

modified: false

state: OK

fwheads: 255

fwsectors: 63

last: 5860533127

first: 40

entries: 128

scheme: GPT

Providers:

1. Name: da0p1

Mediasize: 2147483648 (2.0G)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

efimedia: HD(1,GPT,1890ce7b-ffa6-11e7-b58a-000c295d413f,0x80,0x400000)

rawuuid: 1890ce7b-ffa6-11e7-b58a-000c295d413f

rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 2147483648

offset: 65536

type: freebsd-swap

index: 1

end: 4194431

start: 128

2. Name: da0p2

Mediasize: 2998445408256 (2.7T)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

efimedia: HD(2,GPT,189e1eda-ffa6-11e7-b58a-000c295d413f,0x400080,0x15d10a300)

rawuuid: 189e1eda-ffa6-11e7-b58a-000c295d413f

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 2998445408256

offset: 2147549184

type: freebsd-zfs

index: 2

end: 5860533119

start: 4194432

Consumers:

1. Name: da0

Mediasize: 3000592982016 (2.7T)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

Geom name: da2

modified: false

state: OK

fwheads: 255

fwsectors: 63

last: 5860533127

first: 40

entries: 128

scheme: GPT

Providers:

1. Name: da2p1

Mediasize: 2147483648 (2.0G)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

efimedia: HD(1,GPT,19ce5026-ffa6-11e7-b58a-000c295d413f,0x80,0x400000)

rawuuid: 19ce5026-ffa6-11e7-b58a-000c295d413f

rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 2147483648

offset: 65536

type: freebsd-swap

index: 1

end: 4194431

start: 128

2. Name: da2p2

Mediasize: 2998445408256 (2.7T)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

efimedia: HD(2,GPT,19db538b-ffa6-11e7-b58a-000c295d413f,0x400080,0x15d10a300)

rawuuid: 19db538b-ffa6-11e7-b58a-000c295d413f

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 2998445408256

offset: 2147549184

type: freebsd-zfs

index: 2

end: 5860533119

start: 4194432

Consumers:

1. Name: da2

Mediasize: 3000592982016 (2.7T)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

Geom name: da3

modified: false

state: OK

fwheads: 255

fwsectors: 63

last: 7814037127

first: 40

entries: 128

scheme: GPT

Providers:

1. Name: da3p1

Mediasize: 2147483648 (2.0G)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

efimedia: HD(1,GPT,db09d188-ca4b-e04e-a055-612289c0dd90,0x80,0x400000)

rawuuid: db09d188-ca4b-e04e-a055-612289c0dd90

rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 2147483648

offset: 65536

type: freebsd-swap

index: 1

end: 4194431

start: 128

2. Name: da3p2

Mediasize: 3998639460352 (3.6T)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

efimedia: HD(2,GPT,6e82fe61-6a0a-4940-aef0-b961bc6a23ab,0x400080,0x1d180be08)

rawuuid: 6e82fe61-6a0a-4940-aef0-b961bc6a23ab

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 3998639460352

offset: 2147549184

type: freebsd-zfs

index: 2

end: 7814037127

start: 4194432

Consumers:

1. Name: da3

Mediasize: 4000787030016 (3.6T)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

Geom name: da1

modified: false

state: OK

fwheads: 255

fwsectors: 63

last: 7814037127

first: 40

entries: 128

scheme: GPT

Providers:

1. Name: da1p1

Mediasize: 2147483648 (2.0G)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

efimedia: HD(1,GPT,84a9ecb6-43da-11eb-bce6-000c2998f3af,0x80,0x400000)

rawuuid: 84a9ecb6-43da-11eb-bce6-000c2998f3af

rawtype: 516e7cb5-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 2147483648

offset: 65536

type: freebsd-swap

index: 1

end: 4194431

start: 128

2. Name: da1p2

Mediasize: 3998639460352 (3.6T)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0

efimedia: HD(2,GPT,8508d98d-43da-11eb-bce6-000c2998f3af,0x400080,0x1d180be08)

rawuuid: 8508d98d-43da-11eb-bce6-000c2998f3af

rawtype: 516e7cba-6ecf-11d6-8ff8-00022d09712b

label: (null)

length: 3998639460352

offset: 2147549184

type: freebsd-zfs

index: 2

end: 7814037127

start: 4194432

Consumers:

1. Name: da1

Mediasize: 4000787030016 (3.6T)

Sectorsize: 512

Stripesize: 4096

Stripeoffset: 0

Mode: r0w0e0 - ZFS Import

Code:# zpool import -F -n -R /mnt master root@nas[~]# zpool import -F -R /mnt master cannot import 'master': one or more devices is currently unavailable

- In Linux(as this was I was accoustomed to more):

Code:

zpool replace master /dev/gptid/28fbc45a-a51e-11eb-8d62-000c2998f3af /dev/gptid/6e82fe61-6a0a-4940-aef0-b961bc6a23ab cannot open 'master': no such pool

What do you suggest I do next?

Any help is really appriciated.

Tnx.

Last edited: