is42theanswer

Cadet

- Joined

- Aug 20, 2023

- Messages

- 4

Hi,

I've spent a considerable amount of time reading and researching to get to the point where I have finally built my first ZFS system, although I have been building and working with computers and networks for a long time. I worked from the ground up to make sure the hardware was all up to snuff and that the LSI HBA card was flashed to IT mode, looked at the data block diagram on my motherboard to separate PCIe lane usage between my cards, have split my vdev equally between 2 backplanes, followed the 1GB of RAM per 1TB of storage space guidance, upgraded all the firmware I could (including the HDs), and used SeaChest to set 4k native sectors on all the hard drives. I believe I did a decent job creating a stable and solid system to run TrueNAS/ZFS on. But at the same time, I am new to the world of ZFS and its myriad of tunables, etc. so I could definitely use some help.

As the title states, I am having an issue where read performance falls to approximately 16Mb/s when doing a SFV (simple file validation) check on a file. The speed doesn't appreciably change whether I'm doing the SFV check over a 1gbit connection, or a 10gbit connection, and is consistently in the 16Mb/s range; not, 15, not 17, but always 16.something. I've just started filling this thing (12tb of data on there with a capacity of 200tb), and before I spend a couple weeks filling this thing up only to find out that I have to do something to fix this that requires destroying the pool and starting again, I wanted to ask the experts what could be going on. Checking a file with SFV is something I've been doing for loooooong time (not to date myself, but I just did), and is something I do on a regular basis (creating and checking). You could say it's part of my workflow; and to have it automatically take 3 to 4 times longer on ZFS is simply unacceptable.

Copying a file from the TrueNAS gives speeds that seem to be quite good (over 100mb/s+ to a computer with a 1gbit NIC, over 400mb/s+ to a computer with a 10gbit NIC) to the target computer which has a single SSD drive. I am specifically doing comparisons of SFV checks on largeish (4gb+) video files (not that it should matter). I am doing an apples to apples comparison of the same files between the TrueNAS, an old NAS I've had since 2017, a Windows server, and my desktop. The TrueNAS system, the Windows server, and my desktop all have Mellanox ConnectX-3 2 port NICs (all flashed with the same firmware). They also have 1gbit NICs as well. The old NAS's hardware specs are an Intel Atom C2338 CPU, 16gb RAM, 2x 1Gbit NICs, and is a linux mdadm RAID6 with 6HDs (10tb each), so as you can see, not exactly a powerhouse. The Windows server's hardware specs are 2x Intel Xeon X5675 @ 3Ghz, 256GB RAM, both 1Gbit and 10Gbit NICs, with a 4x10TB hardware RAID5 through a H700 RAID card. The TrueNAS's specs are:

TrueNAS-SCALE-22.12.2

Supermicro X9DRH-7F m/b

2x Intel Xeon E5-2690 v2 @ 3.00GHz

256GB ECC RAM

LSI SAS9207-8i SAS HBA (IT Mode)

Mellanox ConnectX-3 Dual Port 10Gb Ethernet

2 x 240Gb Enterprise Class Solid State Disks (Intel DC S3510) (boot mirror)

Pool: 16 disk RAIDZ3 (ST140000NM0001G 14TB) with SLOG (mirror) and special VDEV (mirror)

Yet, running a SFV check on a file on the old NAS from my desktop is three to four times faster than the TrueNAS. Running a SFV check on the Windows machine from my desktop is seven times faster than the TrueNAS. So far, this system is not in "use"; there's only one user on there (me), and nothing else is going on when I'm doing these tests.

I took a bunch of screenshots to illustrate my point. The first two are simply SFV checking the same file using the same program (ilSFV), from my old NAS and the TrueNAS:

View attachment 69462

View attachment 69463

View attachment 69466

I have used random files so that they're not cached on any of the systems; I know I could do some "underneath the hood" tuning and run specific programs to disable caching as much as possible, but I'm trying to get a sense of real-world performance. Another test, 3 different files from all 3 devices from the same target, TrueNAS in last place (no, the estimates of speed aren't perfect, but it's still last and by a significant margin):

View attachment 69467

I've used these particular SFV programs for years and they're both quite lightweight and do things the way I like. I've always noticed that doing a SFV check - even though it's just reading the file to calculate the checksum - will be slower than simply copying a file over, which is also a read operation. Here are copy operations from the TrueNAS through the desktop's 1gbit NIC and 10gbit NIC with

View attachment 69468

View attachment 69469

View attachment 69470

View attachment 69471

Everything looks good when simply copying. So why the great disparity when SFV checking??

So now, I did two SFV checks, one through the 1gbit NIC on the desktop, and the other through the 10gbit NIC, while running

View attachment 69472

View attachment 69474

View attachment 69475

A whopping .1 megabyte per second improvement through the 10gbit NIC lol.

I am including a second set of

View attachment 69477

Here's a screenshot of the dashboard while SFV checking:

View attachment 69478

Not exactly taxing this thing.

To conclude, all of these tests are performed from the same pool and dataset. Options used are ashift=12, recordsize=1MB, and LZ4 compression. Dedup is off. The only tunables I've set are "zfs_ddt_data_is_special" (to keep it off the special vdev) and "zfs_special_class_metadata_reserve_pct". All of these tests are through a SMB share (for all machines to keep things uniform). All machines are connected to the same switch, and all the 10gbit NICs have jumbo frames enabled (as does the switch). All the 10gbit NICs are on their own subnet except one of the 2 NICs on the TrueNAS (one is on the "regular" subnet).

Thank you if you've gotten to the bottom of this post, I know it's long but I wanted to be thorough so that I could prevent replying to a lot of sentence questions about what I did for this or that. The only thing I didn't talk about is creating a SFV file, and that's because the behaviour is exactly the same; about 16.something megs per second. So, I didn't bother to make this even longer by posting a bunch of screenshots showing that... I know some of you frown on such wide vdevs but I honestly don't believe that's the bottleneck here given regular read (copy) speeds are so disparate.

I've spent a considerable amount of time reading and researching to get to the point where I have finally built my first ZFS system, although I have been building and working with computers and networks for a long time. I worked from the ground up to make sure the hardware was all up to snuff and that the LSI HBA card was flashed to IT mode, looked at the data block diagram on my motherboard to separate PCIe lane usage between my cards, have split my vdev equally between 2 backplanes, followed the 1GB of RAM per 1TB of storage space guidance, upgraded all the firmware I could (including the HDs), and used SeaChest to set 4k native sectors on all the hard drives. I believe I did a decent job creating a stable and solid system to run TrueNAS/ZFS on. But at the same time, I am new to the world of ZFS and its myriad of tunables, etc. so I could definitely use some help.

As the title states, I am having an issue where read performance falls to approximately 16Mb/s when doing a SFV (simple file validation) check on a file. The speed doesn't appreciably change whether I'm doing the SFV check over a 1gbit connection, or a 10gbit connection, and is consistently in the 16Mb/s range; not, 15, not 17, but always 16.something. I've just started filling this thing (12tb of data on there with a capacity of 200tb), and before I spend a couple weeks filling this thing up only to find out that I have to do something to fix this that requires destroying the pool and starting again, I wanted to ask the experts what could be going on. Checking a file with SFV is something I've been doing for loooooong time (not to date myself, but I just did), and is something I do on a regular basis (creating and checking). You could say it's part of my workflow; and to have it automatically take 3 to 4 times longer on ZFS is simply unacceptable.

Copying a file from the TrueNAS gives speeds that seem to be quite good (over 100mb/s+ to a computer with a 1gbit NIC, over 400mb/s+ to a computer with a 10gbit NIC) to the target computer which has a single SSD drive. I am specifically doing comparisons of SFV checks on largeish (4gb+) video files (not that it should matter). I am doing an apples to apples comparison of the same files between the TrueNAS, an old NAS I've had since 2017, a Windows server, and my desktop. The TrueNAS system, the Windows server, and my desktop all have Mellanox ConnectX-3 2 port NICs (all flashed with the same firmware). They also have 1gbit NICs as well. The old NAS's hardware specs are an Intel Atom C2338 CPU, 16gb RAM, 2x 1Gbit NICs, and is a linux mdadm RAID6 with 6HDs (10tb each), so as you can see, not exactly a powerhouse. The Windows server's hardware specs are 2x Intel Xeon X5675 @ 3Ghz, 256GB RAM, both 1Gbit and 10Gbit NICs, with a 4x10TB hardware RAID5 through a H700 RAID card. The TrueNAS's specs are:

TrueNAS-SCALE-22.12.2

Supermicro X9DRH-7F m/b

2x Intel Xeon E5-2690 v2 @ 3.00GHz

256GB ECC RAM

LSI SAS9207-8i SAS HBA (IT Mode)

Mellanox ConnectX-3 Dual Port 10Gb Ethernet

2 x 240Gb Enterprise Class Solid State Disks (Intel DC S3510) (boot mirror)

Pool: 16 disk RAIDZ3 (ST140000NM0001G 14TB) with SLOG (mirror) and special VDEV (mirror)

Yet, running a SFV check on a file on the old NAS from my desktop is three to four times faster than the TrueNAS. Running a SFV check on the Windows machine from my desktop is seven times faster than the TrueNAS. So far, this system is not in "use"; there's only one user on there (me), and nothing else is going on when I'm doing these tests.

I took a bunch of screenshots to illustrate my point. The first two are simply SFV checking the same file using the same program (ilSFV), from my old NAS and the TrueNAS:

View attachment 69462

View attachment 69463

View attachment 69466

I have used random files so that they're not cached on any of the systems; I know I could do some "underneath the hood" tuning and run specific programs to disable caching as much as possible, but I'm trying to get a sense of real-world performance. Another test, 3 different files from all 3 devices from the same target, TrueNAS in last place (no, the estimates of speed aren't perfect, but it's still last and by a significant margin):

View attachment 69467

I've used these particular SFV programs for years and they're both quite lightweight and do things the way I like. I've always noticed that doing a SFV check - even though it's just reading the file to calculate the checksum - will be slower than simply copying a file over, which is also a read operation. Here are copy operations from the TrueNAS through the desktop's 1gbit NIC and 10gbit NIC with

zpool iostat output:View attachment 69468

View attachment 69469

View attachment 69470

View attachment 69471

Everything looks good when simply copying. So why the great disparity when SFV checking??

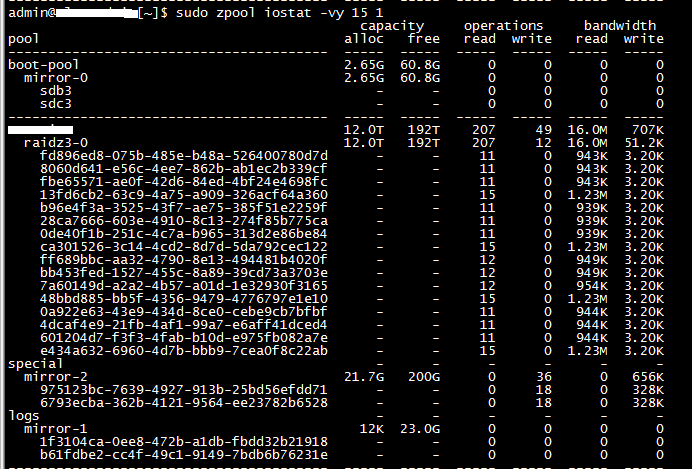

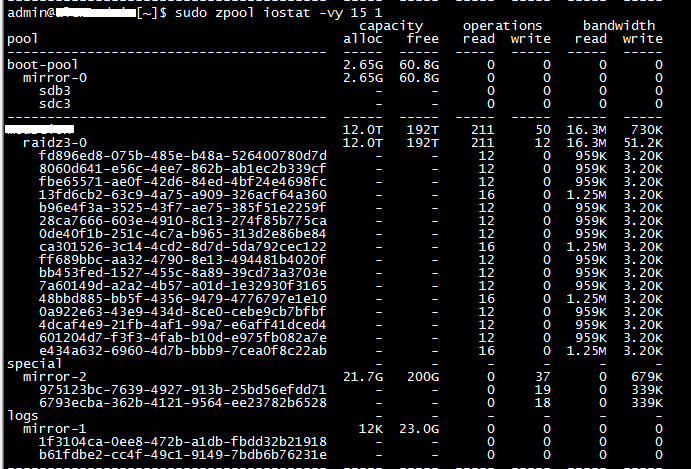

So now, I did two SFV checks, one through the 1gbit NIC on the desktop, and the other through the 10gbit NIC, while running

zpool iostat. Notice how the output is practically identical:View attachment 69472

View attachment 69474

View attachment 69475

A whopping .1 megabyte per second improvement through the 10gbit NIC lol.

I am including a second set of

zpool iostat output; the top is through the 1gbit NIC, the second through the 10gbit NIC. Again, the readings are nearly identical (I actually did this over 20 times, but I'm not going to paste all that):View attachment 69477

Here's a screenshot of the dashboard while SFV checking:

View attachment 69478

Not exactly taxing this thing.

To conclude, all of these tests are performed from the same pool and dataset. Options used are ashift=12, recordsize=1MB, and LZ4 compression. Dedup is off. The only tunables I've set are "zfs_ddt_data_is_special" (to keep it off the special vdev) and "zfs_special_class_metadata_reserve_pct". All of these tests are through a SMB share (for all machines to keep things uniform). All machines are connected to the same switch, and all the 10gbit NICs have jumbo frames enabled (as does the switch). All the 10gbit NICs are on their own subnet except one of the 2 NICs on the TrueNAS (one is on the "regular" subnet).

Thank you if you've gotten to the bottom of this post, I know it's long but I wanted to be thorough so that I could prevent replying to a lot of sentence questions about what I did for this or that. The only thing I didn't talk about is creating a SFV file, and that's because the behaviour is exactly the same; about 16.something megs per second. So, I didn't bother to make this even longer by posting a bunch of screenshots showing that... I know some of you frown on such wide vdevs but I honestly don't believe that's the bottleneck here given regular read (copy) speeds are so disparate.