itsjustfrank

Dabbler

- Joined

- Mar 25, 2023

- Messages

- 10

Hello everyone,

Firstly this is my first post so apologies in advance if there's something not up to spec in this post!

I've been trying to research this issue for a few days now and despite my best efforts, I'm at a loss as to a solution or possible cause.

Specs:

TrueNAS-12.0-U8.1 Core

Motherboard make and model: Acer Aspire M3910

Intel H57 Chipset

CPU make and model: Intel i7 870 2.93Ghz

RAM quantity: 12GB @ 1333MT/s 2x4GB 2x2GB

Hard drives, quantity, model numbers, and RAID configuration, Including boot drives:

6 drives total

3x 6TB WD Red Plus 5640RPM 6Gb/s CMR 128MB Cache WD60EFZX

2x 6TB Toshiba N300 7200RPM 6GB/s 256MB Cache HDWG160XZSTA

1x 128GB Silicon Power SSD Boot drive SU128GBSS3A58A25CA

RAIDZ-1 config

Network controller: Realtek RTL8111E

The Issue:

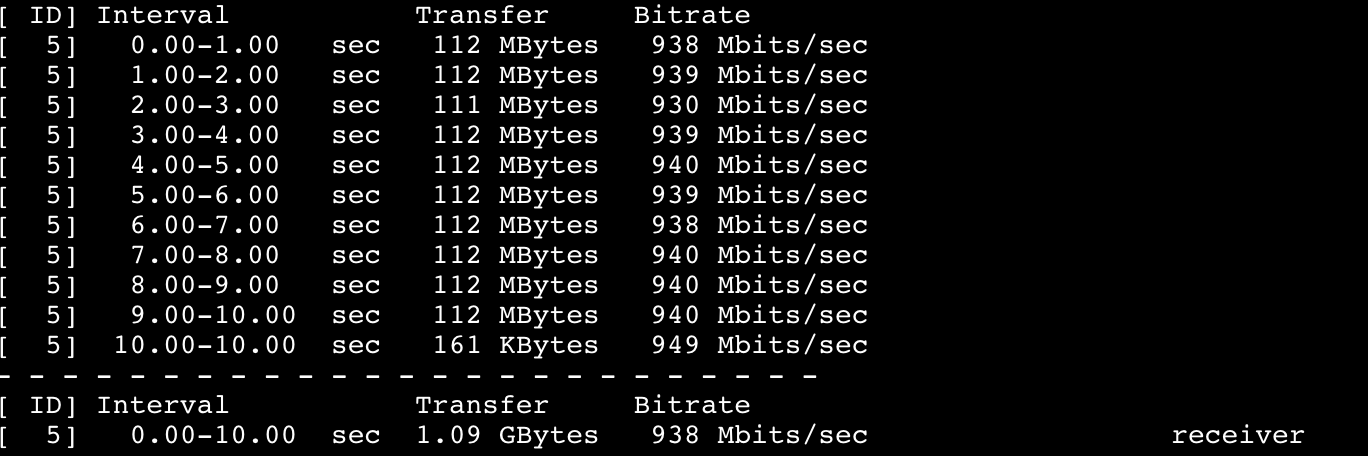

Over the past few days, I've been trying to offload files from an old iMac to my NAS via SMB share. Whenever I get to a folder with many small files, transfer speeds can get to sub 1MB/s speeds. Conversely, when I transfer folders with large files I'm getting a consistent throughput of around 110MB/s. I also did an iperf3 test

I understand that with small files the transfer times will naturally get longer and I don't expect full gigabit speeds, but the current speeds which can range between a few KB/s to maybe 8MB/s at their best seem unusually low. I'm not sure if this is normal behavior or if there is something I can do to fix/improve it. For example, the same folder when sent to another external drive would range between around 20-50MB/s.

If anyone has any tips on what might be causing this please let me know. I recognize my hardware is less than ideal, especially the Realtek network controller. I am willing to invest a small amount into small hardware upgrades such as a decent NIC or maybe bump the RAM to the max 16GB but if I do so I'd like to have some confidence that that would actually improve the situation—I don't want to spend much on this machine as I plan to build a proper 10gigabit system later this year. I essentially just want to see these speeds improve in the interim to make writing data less painful. Thank you all for your time and assistance!

Iperf3 results:

Firstly this is my first post so apologies in advance if there's something not up to spec in this post!

I've been trying to research this issue for a few days now and despite my best efforts, I'm at a loss as to a solution or possible cause.

Specs:

TrueNAS-12.0-U8.1 Core

Motherboard make and model: Acer Aspire M3910

Intel H57 Chipset

CPU make and model: Intel i7 870 2.93Ghz

RAM quantity: 12GB @ 1333MT/s 2x4GB 2x2GB

Hard drives, quantity, model numbers, and RAID configuration, Including boot drives:

6 drives total

3x 6TB WD Red Plus 5640RPM 6Gb/s CMR 128MB Cache WD60EFZX

2x 6TB Toshiba N300 7200RPM 6GB/s 256MB Cache HDWG160XZSTA

1x 128GB Silicon Power SSD Boot drive SU128GBSS3A58A25CA

RAIDZ-1 config

Network controller: Realtek RTL8111E

The Issue:

Over the past few days, I've been trying to offload files from an old iMac to my NAS via SMB share. Whenever I get to a folder with many small files, transfer speeds can get to sub 1MB/s speeds. Conversely, when I transfer folders with large files I'm getting a consistent throughput of around 110MB/s. I also did an iperf3 test

I understand that with small files the transfer times will naturally get longer and I don't expect full gigabit speeds, but the current speeds which can range between a few KB/s to maybe 8MB/s at their best seem unusually low. I'm not sure if this is normal behavior or if there is something I can do to fix/improve it. For example, the same folder when sent to another external drive would range between around 20-50MB/s.

If anyone has any tips on what might be causing this please let me know. I recognize my hardware is less than ideal, especially the Realtek network controller. I am willing to invest a small amount into small hardware upgrades such as a decent NIC or maybe bump the RAM to the max 16GB but if I do so I'd like to have some confidence that that would actually improve the situation—I don't want to spend much on this machine as I plan to build a proper 10gigabit system later this year. I essentially just want to see these speeds improve in the interim to make writing data less painful. Thank you all for your time and assistance!

Iperf3 results: