Hello,

I have a some simple questions:

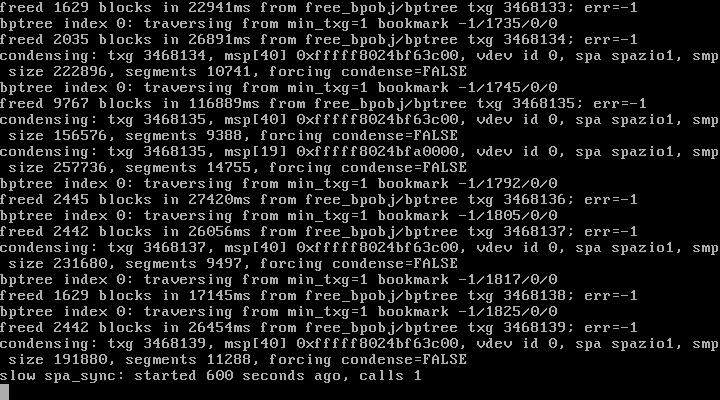

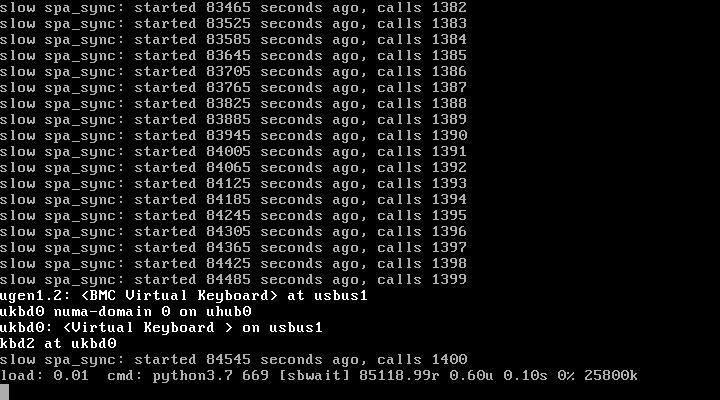

1) When the system is saying "slow spa_sync: ..." is the server doing some procedure or is doing nothing/waiting?

2) if i do a crtl+t I see a load of 0.00 and the state [sbwait] seems the server is doing nothing, there is a way to see what is going on?

3) I have deleted a 3Tb dataset which has deduplication on an I know this could be the cause, but why with 16cpu/32thread and 128Gb the server has to be down for days for a work (scrub or deletion) that can be done in background?

Freenas: 11.3-U2

Machine: HP dl360e

2xCPU: E5-2450L (8cores/16thread)

Ram: 128Gb (64Gb x cpu) 1333MHz

Storage.

1 Pool RaidZ1

+

500GB nvme L2ARC

Boot status:

I have a some simple questions:

1) When the system is saying "slow spa_sync: ..." is the server doing some procedure or is doing nothing/waiting?

2) if i do a crtl+t I see a load of 0.00 and the state [sbwait] seems the server is doing nothing, there is a way to see what is going on?

3) I have deleted a 3Tb dataset which has deduplication on an I know this could be the cause, but why with 16cpu/32thread and 128Gb the server has to be down for days for a work (scrub or deletion) that can be done in background?

Freenas: 11.3-U2

Machine: HP dl360e

2xCPU: E5-2450L (8cores/16thread)

Ram: 128Gb (64Gb x cpu) 1333MHz

Storage.

1 Pool RaidZ1

+

500GB nvme L2ARC

- -Physical Drive in Port 1I Box 1 Bay 1

Status OK Serial Number WFG0M610 Model ST4000LM024-2AN1 Media Type HDD Capacity 4000 GB Location Port 1I Box 1 Bay 1 Firmware Version 0001 Drive Configuration Unconfigured Encryption Status Not Encrypted

- -Physical Drive in Port 1I Box 1 Bay 2

Status OK Serial Number WFG0X4CB Model ST4000LM024-2AN1 Media Type HDD Capacity 4000 GB Location Port 1I Box 1 Bay 2 Firmware Version 0001 Drive Configuration Unconfigured Encryption Status Not Encrypted

- -Physical Drive in Port 1I Box 1 Bay 3

Status OK Serial Number WFF0V8VK Model ST4000LM024-2AN1 Media Type HDD Capacity 4000 GB Location Port 1I Box 1 Bay 3 Firmware Version 0001 Drive Configuration Unconfigured Encryption Status Not Encrypted

- -Physical Drive in Port 1I Box 1 Bay 4

Status OK Serial Number WFG148M6 Model ST4000LM024-2AN1 Media Type HDD Capacity 4000 GB Location Port 1I Box 1 Bay 4 Firmware Version 0001 Drive Configuration Unconfigured Encryption Status Not Encrypted

Boot status: