I had a single 4Tb drive that was just starting to give me read errors so I got a new 5Tb drive for a Pool that uses only 1 drive (This is for Time Machine Mac backups so I dont need more than 1 drive)

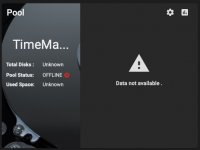

I used the replace drive option from the dashboard, it took a while for truenas to resilver the pool, after the process was done I removed the old drive but the pool is now offline and I cannot import it, I tried a few things I read here but no luck, at this point I dont care about the data, all I will like to do is to add the new 5Tb disk to that existing pool and continue to make my TimeMachine backups, I know I can just create a new Pool but the existing one already has the permissions and storage limits for the 2 Macs I backup to.

Thanks in advanced!

I used the replace drive option from the dashboard, it took a while for truenas to resilver the pool, after the process was done I removed the old drive but the pool is now offline and I cannot import it, I tried a few things I read here but no luck, at this point I dont care about the data, all I will like to do is to add the new 5Tb disk to that existing pool and continue to make my TimeMachine backups, I know I can just create a new Pool but the existing one already has the permissions and storage limits for the 2 Macs I backup to.

Thanks in advanced!