ndboost

Explorer

- Joined

- Mar 17, 2013

- Messages

- 78

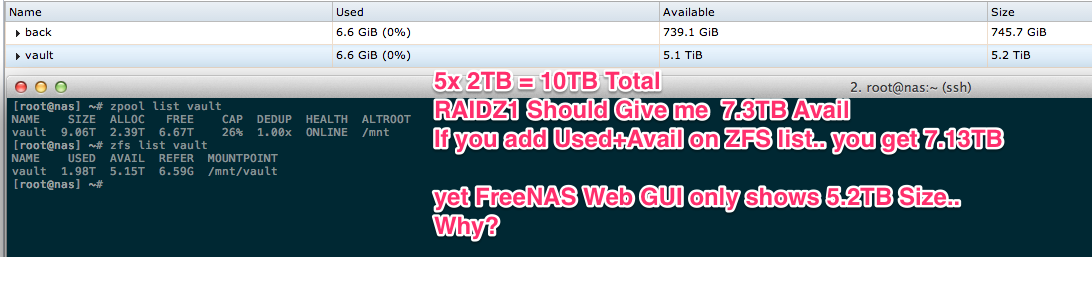

I am running FreeNAS 9.2.0-RELEASE.

I originally had a 4x - 2TB Z1 configuration. Which would give me ~5.5TB of useable space.

I initially tried to add a 5th disk, and it added it as a striped vdev to the pool, this is bad. So i took a snapshot of the root volume /vault (With recursive on).

I then sent that snapshot to another array of disks on the machine temporarily (completely separate pool). This pool is called "back", you can see it in the top screenshot..

I then rebuilt the "Vault" pool with 5x - 2TB Z1 configuration. Once that was done, I restored the snapshot back onto this vault to give me all my data and vol's back. That all worked fine and dandy.

I stumbled across a strange inconsistency though. Doing the math, I should expect the following sizing:

With this being noticed i have three questions

I originally had a 4x - 2TB Z1 configuration. Which would give me ~5.5TB of useable space.

I initially tried to add a 5th disk, and it added it as a striped vdev to the pool, this is bad. So i took a snapshot of the root volume /vault (With recursive on).

I then sent that snapshot to another array of disks on the machine temporarily (completely separate pool). This pool is called "back", you can see it in the top screenshot..

I then rebuilt the "Vault" pool with 5x - 2TB Z1 configuration. Once that was done, I restored the snapshot back onto this vault to give me all my data and vol's back. That all worked fine and dandy.

I stumbled across a strange inconsistency though. Doing the math, I should expect the following sizing:

- 4x - 2TB Z1 = ~5.5TB of space usable

- 5x - 2TB Z1 = ~7.3TB of space usable

With this being noticed i have three questions

- Why is this happening

- How can I fix it

- Why doesn't FreeNAS Web GUI show Space Used as a sum of all of its sub volumes?