briandm81

Dabbler

- Joined

- Jun 8, 2016

- Messages

- 33

So I've set up a couple of NFS shares to test with on my ESXi sand box. I've configured a pair of data sets to share. One dataset is on a stipped set of mirrors with eight 2TB 7200RPM drives. The other is a dataset on a P3605 1.6TB NVMe SSD. I have the ESXi box connected to the FreeNAS box with a DAC and a Intel 10GB X520. I added a new VMDK to one of my VM's and just run a few baseline's to see how things looked. Here are the results:

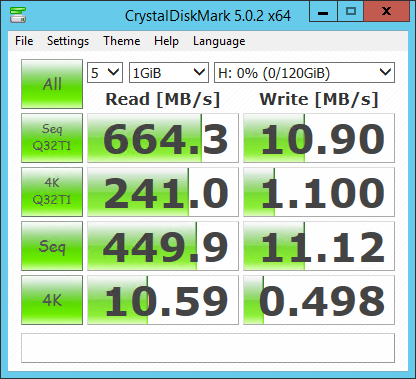

The hard drive config:

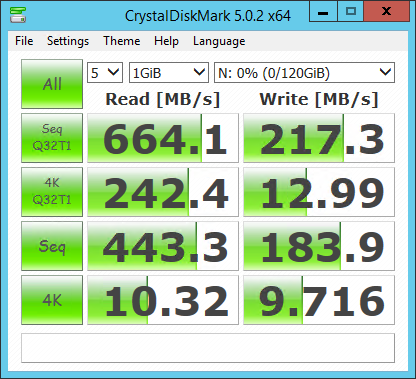

The NVMe:

The hard drive configuration looks good on the reads. Honestly, better than I expected. The write however look glacial. I'm not sure if this is a sync issue, or more likely...a Brian (me) doesn't know what he's doing issue. I'll be adding a SLOG and L2ARC to this setup as well, but I wanted a baseline before I started to get too fancy. ;)

The NVMe configuration looked disappointing. I would have expected much faster reads. I don't know that I expected it to saturate the 10GB link, but it surely has the capability of doing so. The write are much better than the hard drive configuration, but still very slow for what the drive is. I'm starting to research settings and tweaking, but I was hoping for some feedback before I dig too deep in the wrong places. Thanks in advance!

Oh, and here are my specs:

Processor(s) (2) Intel Xeon E5-2670 @ 2.6 GHz

Motherboard Supermicro X9DR7-LNF4-JBOD

Memory 256 GB - (16) Samsung 16 GB ECC Registered DDR3 @ 1600 MHz

Chassis Supermicro CSE-846TQ-R900B

HBA (2) Supermicro AOC-2308-l8e

NVMe Intel P3600 1.6TB NVMe SSD

Solid State Storage (2) Intel S3700 200GB SSD (Not in use yet)

Hard Drive Storage (9) HGST Ultrastar 7K3000 2TB Hard Drives

Network Adapter (2) Intel X520-DA2 Dual Port 10 Gbps Network Adapters

And here's the overall lab environment. I'm working with HyperionFN (the freeNAS box) and HyperionESXi2 (the sand box running ESXi 6.0)

The hard drive config:

The NVMe:

The hard drive configuration looks good on the reads. Honestly, better than I expected. The write however look glacial. I'm not sure if this is a sync issue, or more likely...a Brian (me) doesn't know what he's doing issue. I'll be adding a SLOG and L2ARC to this setup as well, but I wanted a baseline before I started to get too fancy. ;)

The NVMe configuration looked disappointing. I would have expected much faster reads. I don't know that I expected it to saturate the 10GB link, but it surely has the capability of doing so. The write are much better than the hard drive configuration, but still very slow for what the drive is. I'm starting to research settings and tweaking, but I was hoping for some feedback before I dig too deep in the wrong places. Thanks in advance!

Oh, and here are my specs:

Processor(s) (2) Intel Xeon E5-2670 @ 2.6 GHz

Motherboard Supermicro X9DR7-LNF4-JBOD

Memory 256 GB - (16) Samsung 16 GB ECC Registered DDR3 @ 1600 MHz

Chassis Supermicro CSE-846TQ-R900B

HBA (2) Supermicro AOC-2308-l8e

NVMe Intel P3600 1.6TB NVMe SSD

Solid State Storage (2) Intel S3700 200GB SSD (Not in use yet)

Hard Drive Storage (9) HGST Ultrastar 7K3000 2TB Hard Drives

Network Adapter (2) Intel X520-DA2 Dual Port 10 Gbps Network Adapters

And here's the overall lab environment. I'm working with HyperionFN (the freeNAS box) and HyperionESXi2 (the sand box running ESXi 6.0)