briandm81

Dabbler

- Joined

- Jun 8, 2016

- Messages

- 33

I am in the process of doing some benchmarking for a database using various different storage mechanisms. One of those mechanisms is iSCSI which I am providing using my FreeNAS server. I am running a fresh installation of 11.0 on the following hardware:

Processor(s) (2) Intel Xeon E5-2670 @ 2.6 GHz

Motherboard Supermicro X9DR7-LNF4-JBOD

Memory 256 GB - (16) Samsung 16 GB ECC Registered DDR3 @ 1600 MHz

Chassis Supermicro CSE-846TQ-R900B

Chassis Supermicro CSE-847E16-RJBOD1

HBA (1) Supermicro AOC-2308-l8e

HBA (1) LSI 9200-8e

NVMe Intel P3600 1.6TB NVMe SSD

Solid State Storage (2) Intel S3700 200GB SSD

Hard Drive Storage (9) HGST Ultrastar 7K3000 2TB Hard Drives

Hard Drive Storage (17) HGST 3TB 7K4000 Hard Drives

Network Adapter (2) Intel X520-DA2 Dual Port 10 Gbps Network Adapters

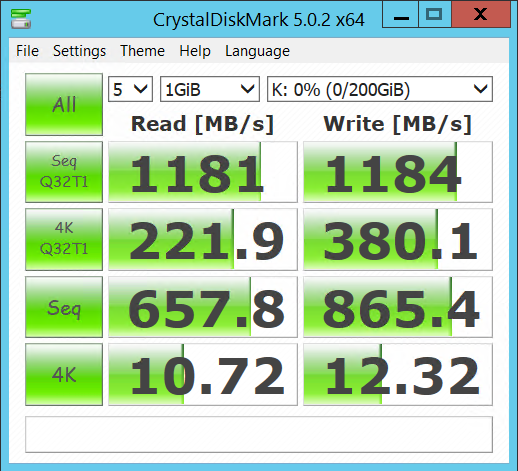

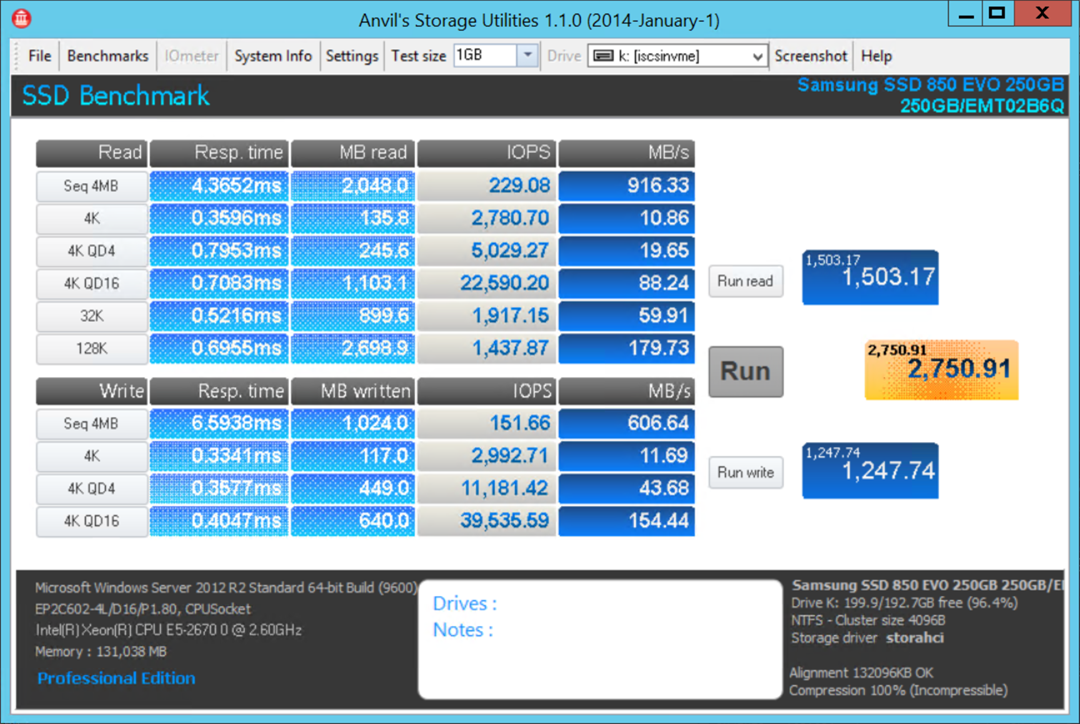

I have created a zvol on my NVMe drive directly and have this server connected to my physical windows server via copper DAC (3 meter). I have tried with and without jumbo frames, but the performance doesn't seem to improve. 4K performance is just bad:

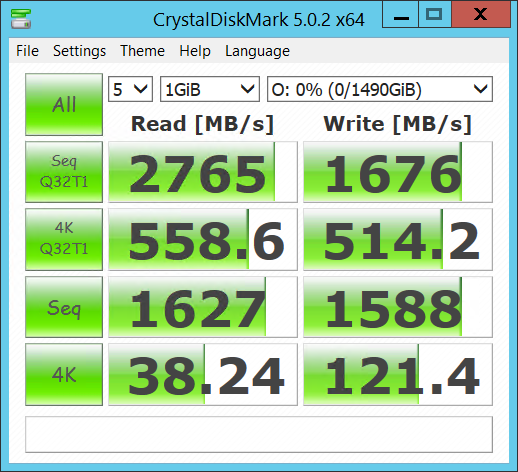

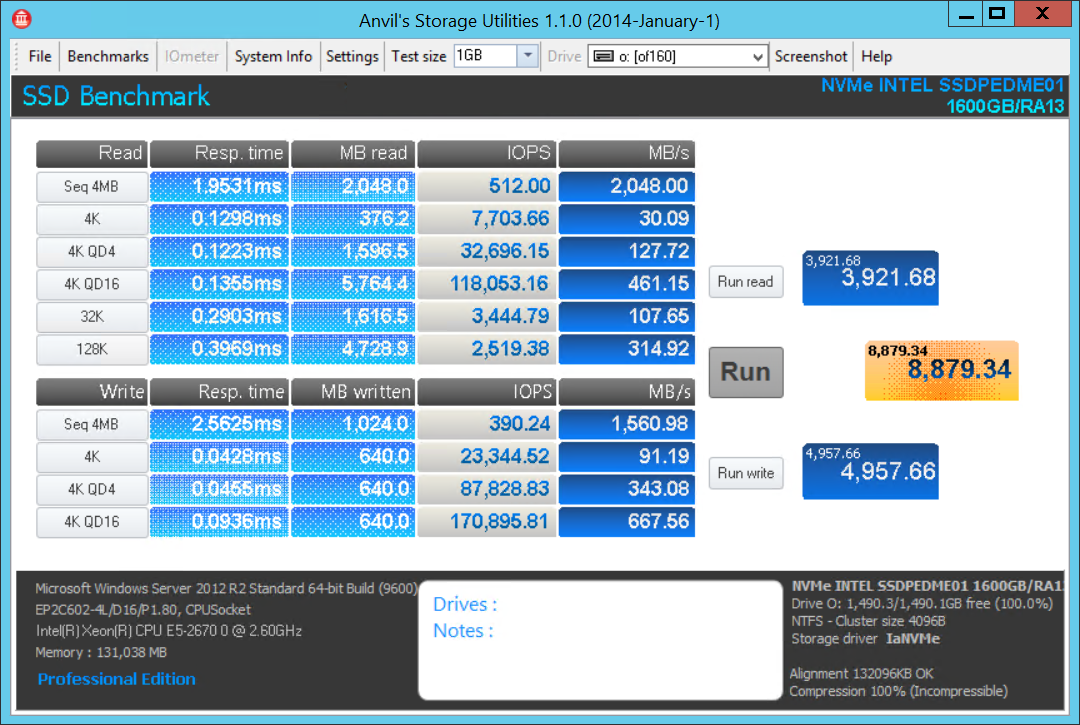

Here is the same type of drive in the same physical box that connects to FreeNAS:

Am I missing something super simple? It seems like I should have plenty of horsepower to make this lightning fast. I really need QD1 to waaay faster to get decent performance on my DB.

Thanks in advance!

Processor(s) (2) Intel Xeon E5-2670 @ 2.6 GHz

Motherboard Supermicro X9DR7-LNF4-JBOD

Memory 256 GB - (16) Samsung 16 GB ECC Registered DDR3 @ 1600 MHz

Chassis Supermicro CSE-846TQ-R900B

Chassis Supermicro CSE-847E16-RJBOD1

HBA (1) Supermicro AOC-2308-l8e

HBA (1) LSI 9200-8e

NVMe Intel P3600 1.6TB NVMe SSD

Solid State Storage (2) Intel S3700 200GB SSD

Hard Drive Storage (9) HGST Ultrastar 7K3000 2TB Hard Drives

Hard Drive Storage (17) HGST 3TB 7K4000 Hard Drives

Network Adapter (2) Intel X520-DA2 Dual Port 10 Gbps Network Adapters

I have created a zvol on my NVMe drive directly and have this server connected to my physical windows server via copper DAC (3 meter). I have tried with and without jumbo frames, but the performance doesn't seem to improve. 4K performance is just bad:

Here is the same type of drive in the same physical box that connects to FreeNAS:

Am I missing something super simple? It seems like I should have plenty of horsepower to make this lightning fast. I really need QD1 to waaay faster to get decent performance on my DB.

Thanks in advance!