Squigley

Dabbler

- Joined

- May 13, 2020

- Messages

- 19

I have just done a brand new fresh install of TrueNAS12.0 using the ISO I downloaded an hour ago, on a VM running under KVM, on a Dell R710 (very similar software and hardware config to another R710 I have, which runs FreeNAS with no issues). VM is configured with 16 CPUs/copy host config (CPUs are E5520) and 10GB RAM.

The "disks" I have attached are 3 partitions on an SSD on the SATA controller. TrueNAS was installed on the first "disk".

Host OS is Ubuntu 20.04 server.

The VM has 3 PCI passthrough devices attached, 2 NICs (the onboard ones), and an LSI RAID controller with a 2008 chip crossflashed into HBA mode. IOMMU and all that is configured correctly, as far as I can tell, based on having done it on the other r710, and seeing that the vfio module is bound to the devices and the DMARR output etc.

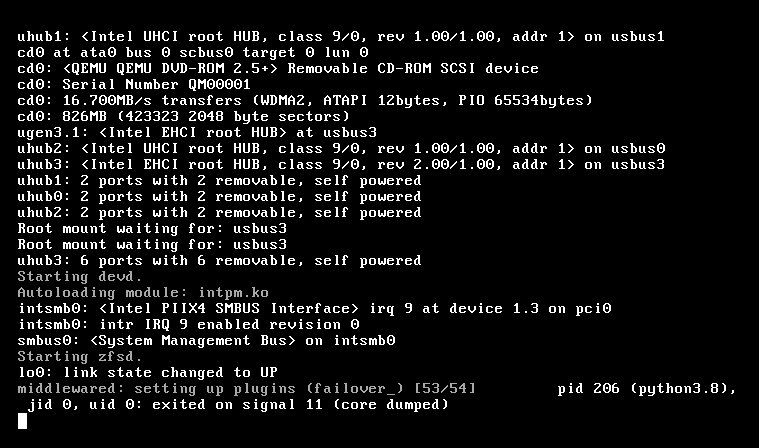

On the first (and every) boot, it gets to a point where it is loading the plugins, and plugin 53/54 fails, saying "setting up plugins (failover_) pid XXX (python 3.8), jid 0, uid 0: exited on signal 11 (core dumped).

The pid changes slightly each time, but is around 200 each time (202, 206, 210, 211 etc).

Screenshot:

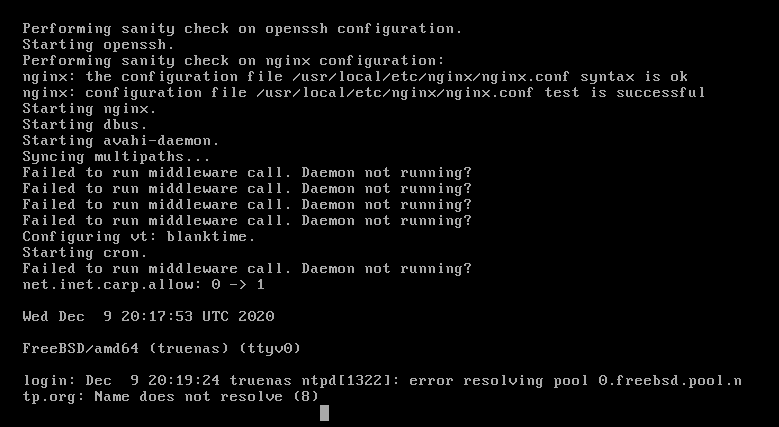

It then hangs here for a minute or 2, before continuing, and I see it output the..

##############################################################

MIDDLEWARED FAILED TO START, SYSTEM WILL NOT BEHAVE CORRECTLY!

##############################################################

like I have seen in other people's posts (when searching for this issue).

It then keeps whipping along outputting stuff, before some more "failed to run middleware call. Daemon not running?" errors.

I login as root, and get a python stack trace:

This appears that it's not able to connect to itself?

I tried disconnecting the RAID controller PCI device (as it was also causing "gptzfsboot: error 128 lba <some block #> " error), in case it was related, which made no difference, to either problem.

I also tried connecting a bridged ethernet adapter, as well as the 2 real PCI NICs, but that made no difference.

I also tried with only the bridged ethernet adapter attached, and no PCI devices (NICs nor RAID) attached, but it made no difference, other than when the failed start timed out, it gets an IP via DHCP, generated an SSH host key etc: (the PCI NICs are not yet patched, so DHCP would have failed, but I don't think that is related, since the middlewared failure occurs before DHCP is attempted).

The DNS error occurred after leaving the system at the login prompt for a little while. Strange, since it should have valid DNS as I saw it get a valid IP via DHCP earlier in the boot process.

Logging in as root gives the identical python stack trace as before, including the "self.sock.connect(self.bind_addr) error.

I'm at a loss. Is there something dumb I have misconfigured, or not configured at all, as necessary?

The "disks" I have attached are 3 partitions on an SSD on the SATA controller. TrueNAS was installed on the first "disk".

Host OS is Ubuntu 20.04 server.

The VM has 3 PCI passthrough devices attached, 2 NICs (the onboard ones), and an LSI RAID controller with a 2008 chip crossflashed into HBA mode. IOMMU and all that is configured correctly, as far as I can tell, based on having done it on the other r710, and seeing that the vfio module is bound to the devices and the DMARR output etc.

On the first (and every) boot, it gets to a point where it is loading the plugins, and plugin 53/54 fails, saying "setting up plugins (failover_) pid XXX (python 3.8), jid 0, uid 0: exited on signal 11 (core dumped).

The pid changes slightly each time, but is around 200 each time (202, 206, 210, 211 etc).

Screenshot:

It then hangs here for a minute or 2, before continuing, and I see it output the..

##############################################################

MIDDLEWARED FAILED TO START, SYSTEM WILL NOT BEHAVE CORRECTLY!

##############################################################

like I have seen in other people's posts (when searching for this issue).

It then keeps whipping along outputting stuff, before some more "failed to run middleware call. Daemon not running?" errors.

I login as root, and get a python stack trace:

This appears that it's not able to connect to itself?

I tried disconnecting the RAID controller PCI device (as it was also causing "gptzfsboot: error 128 lba <some block #> " error), in case it was related, which made no difference, to either problem.

I also tried connecting a bridged ethernet adapter, as well as the 2 real PCI NICs, but that made no difference.

I also tried with only the bridged ethernet adapter attached, and no PCI devices (NICs nor RAID) attached, but it made no difference, other than when the failed start timed out, it gets an IP via DHCP, generated an SSH host key etc: (the PCI NICs are not yet patched, so DHCP would have failed, but I don't think that is related, since the middlewared failure occurs before DHCP is attempted).

The DNS error occurred after leaving the system at the login prompt for a little while. Strange, since it should have valid DNS as I saw it get a valid IP via DHCP earlier in the boot process.

Logging in as root gives the identical python stack trace as before, including the "self.sock.connect(self.bind_addr) error.

I'm at a loss. Is there something dumb I have misconfigured, or not configured at all, as necessary?