Hi all.

Help solve the problem with low sequential read speed. It is 2 times lower than the write speed.

Installed TrueNAS Scale:

OS Version:TrueNAS-SCALE-22.02.4

Product:ProLiant XL420 Gen9 (HP Apollo 4200 Gen9)

Model:Intel(R) Xeon(R) CPU E5-2643 v3 @ 3.40GHz

Memory: 1 TiB

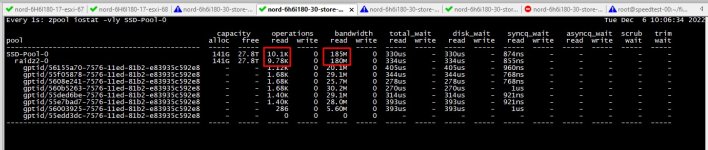

disk:

pool 1: 2x7.68Tb PCIe NMVe Intel , mirror

pool 2: 16x3.84Tb SATA Intel,Toshoba, raid-z2

pool 3: 16x3.84Tb SATA Intel, Toshoba, raid-z2

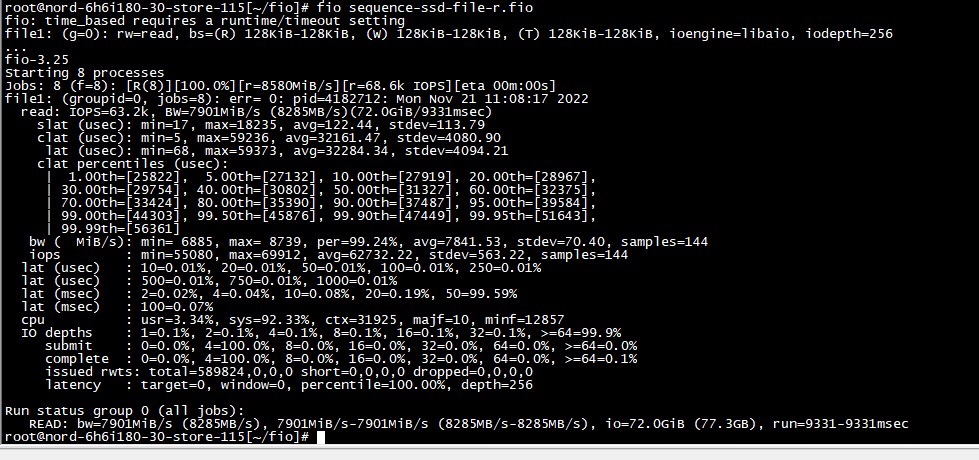

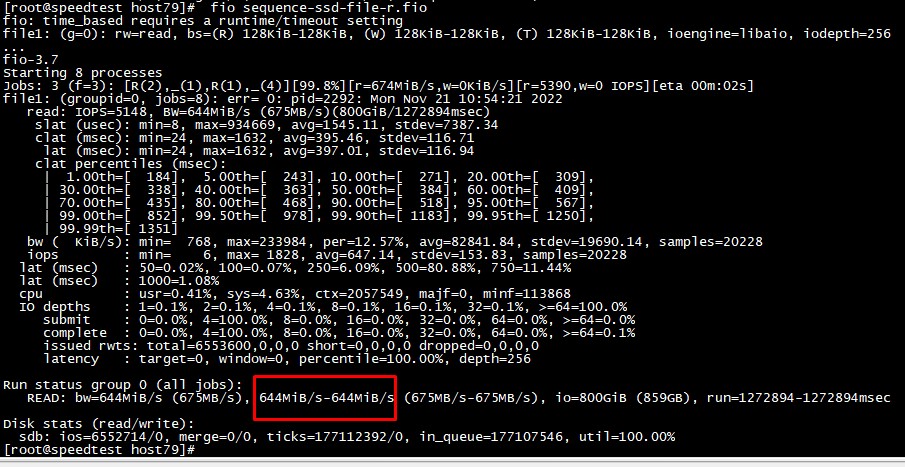

fio test results on the server itself:

Read:

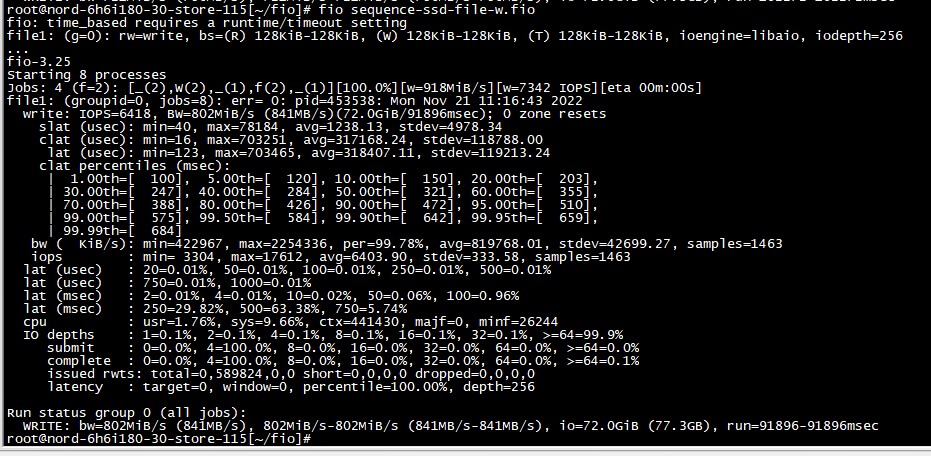

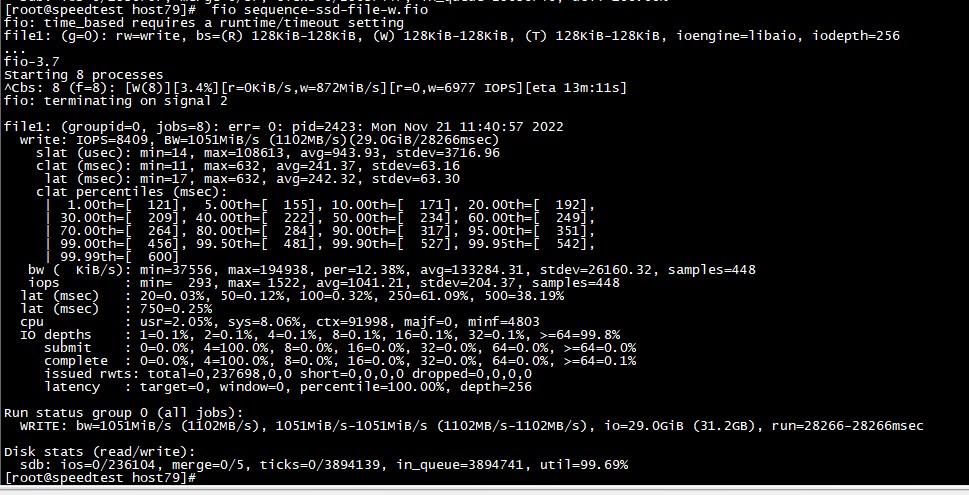

Write:

The record is low because pool 99% busy.

No need to pay attention to the record now.

ESXi and TrueNAS Connection Diagram

TrueNAS(4x10Gb)LACP:4 IP address <=> Cisco Nexus 5672UP <=> ESXi6.7 2x10Gb (no LACP),2 IP address

LACP: IP+MAC

MTU: 9000

The packet loss switch does not fix.

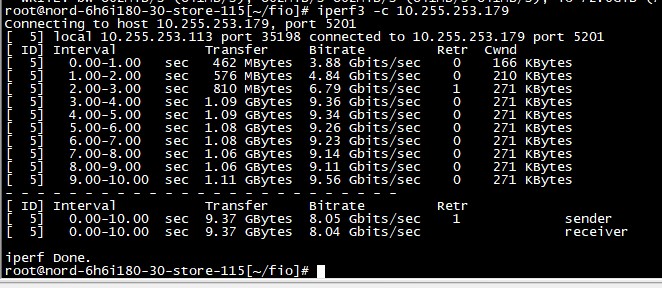

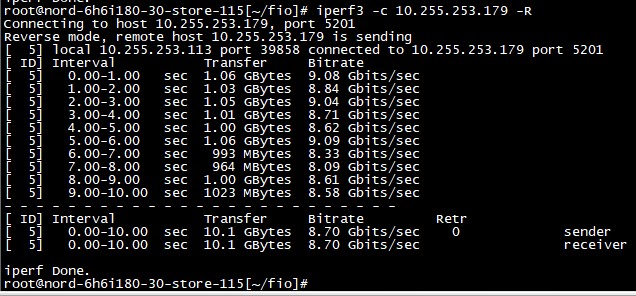

Link speed 10Gbit. iperf3

TrueNAS => ESXi

ESXi => TrueNAS

When you test the disk speed from a Linux CentOS 7 virtual machine, you get the following results:

Only this virtual machine runs on ESXi. No Storage policies are used.

iSCSI: No multipath

Read:

Write:

Here you can see that the read speed is almost 2 times lower than the write speed.

If you use multithreading ( 4ip * 2ip = 8 threads), then the read speed will increase to 1600-1700Mb/s, the write speed will increase to 2200Mb/s

while the read speed of each stream is no more than 200-220Mb/s

The switch does not fix interface congestion.

I suspect that iSCSI-scst + ZFS needs to be tuned.

Please help me figure it out.

Help solve the problem with low sequential read speed. It is 2 times lower than the write speed.

Installed TrueNAS Scale:

OS Version:TrueNAS-SCALE-22.02.4

Product:ProLiant XL420 Gen9 (HP Apollo 4200 Gen9)

Model:Intel(R) Xeon(R) CPU E5-2643 v3 @ 3.40GHz

Memory: 1 TiB

disk:

pool 1: 2x7.68Tb PCIe NMVe Intel , mirror

pool 2: 16x3.84Tb SATA Intel,Toshoba, raid-z2

pool 3: 16x3.84Tb SATA Intel, Toshoba, raid-z2

fio test results on the server itself:

Read:

Write:

The record is low because pool 99% busy.

No need to pay attention to the record now.

ESXi and TrueNAS Connection Diagram

TrueNAS(4x10Gb)LACP:4 IP address <=> Cisco Nexus 5672UP <=> ESXi6.7 2x10Gb (no LACP),2 IP address

LACP: IP+MAC

MTU: 9000

The packet loss switch does not fix.

Link speed 10Gbit. iperf3

TrueNAS => ESXi

ESXi => TrueNAS

When you test the disk speed from a Linux CentOS 7 virtual machine, you get the following results:

Only this virtual machine runs on ESXi. No Storage policies are used.

iSCSI: No multipath

Read:

Write:

Here you can see that the read speed is almost 2 times lower than the write speed.

If you use multithreading ( 4ip * 2ip = 8 threads), then the read speed will increase to 1600-1700Mb/s, the write speed will increase to 2200Mb/s

while the read speed of each stream is no more than 200-220Mb/s

The switch does not fix interface congestion.

I suspect that iSCSI-scst + ZFS needs to be tuned.

Please help me figure it out.