My FreeNAS 11.3U5 system with a 5 drive raidZ2 has changed to DEGRADED state, but no drives are marked failed. However, one drive did fail sometime in the last few months (yes, I should pay more attention to it). It failed by powering itself off and refusing to power on, so it is just not there at all. I think it was the hot spare (yes, I have since read the posts which suggest a hot spare is not a good idea), not a data drive, but I am not sure Here is the zpool status output:

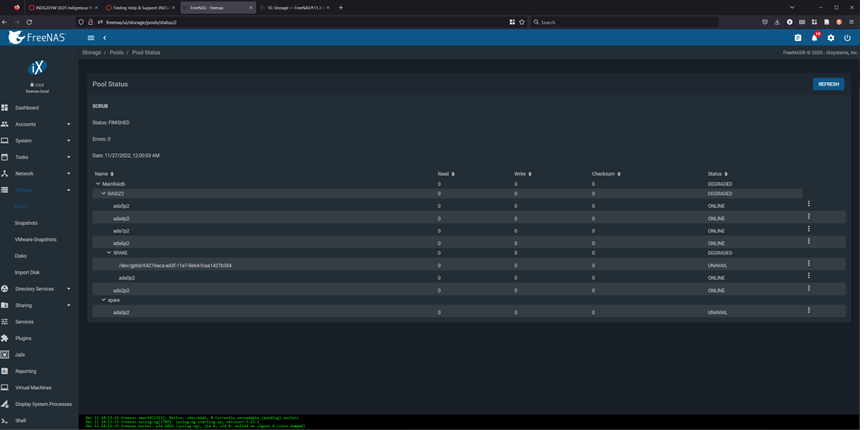

and the GUI shows:

I have since unplugged the failed drive and put in a new one, which I erased. I suspect what I need to do now is simply do a replace, but the GUI display with ada0p2 listed twice is confusing me as is the "INUSE" output from zpool status. The suggested action from zpool status is impossible since the missing device is totally dead. Thank you very much for any assistance.

Code:

zpool status -x

pool: MainRaid6

state: DEGRADED

status: One or more devices could not be opened. Sufficient replicas exist for

the pool to continue functioning in a degraded state.

action: Attach the missing device and online it using 'zpool online'.

see: http://illumos.org/msg/ZFS-8000-2Q

scan: scrub repaired 256K in 0 days 10:48:53 with 0 errors on Sun Nov 27 10:48:56 2022

config:

NAME STATE READ WRITE CKSUM

MainRaid6 DEGRADED 0 0 0

raidz2-0 DEGRADED 0 0 0

gptid/613abf2f-ad3f-11e7-8e64-fcaa1427b384 ONLINE 0 0 0

gptid/61e338a2-ad3f-11e7-8e64-fcaa1427b384 ONLINE 0 0 0

gptid/62a5a502-ad3f-11e7-8e64-fcaa1427b384 ONLINE 0 0 0

gptid/63652114-ad3f-11e7-8e64-fcaa1427b384 ONLINE 0 0 0

spare-4 DEGRADED 0 0 0

3550455103967566402 UNAVAIL 0 0 0 was /dev/gptid/64276aca-ad3f-11e7-8e64-fcaa1427b384

gptid/70ea7543-eece-11ea-9987-fcaa1427b384 ONLINE 0 0 0

gptid/64e4d84c-ad3f-11e7-8e64-fcaa1427b384 ONLINE 0 0 0

spares

16295930666946285623 INUSE was /dev/gptid/70ea7543-eece-11ea-9987-fcaa1427b384

errors: No known data errorsand the GUI shows:

I have since unplugged the failed drive and put in a new one, which I erased. I suspect what I need to do now is simply do a replace, but the GUI display with ada0p2 listed twice is confusing me as is the "INUSE" output from zpool status. The suggested action from zpool status is impossible since the missing device is totally dead. Thank you very much for any assistance.