Hi Everyone,

I am doing the typical noob NAS load balancing style as I only currently have 1gbps NICs associated with my NAS, now I have gotten the load balancing to work with no issues but there is one hassle. The connection speed is load balanced between the NICs but the iSCSI target will only hit a max of 110mb/s~ (1gbps). (Both NICs pumping out 500mbps each). Makes 0 sense I suspected a duplex mismatch at first but if I am to disconnect one of the ethernets during the live environment all the data is then routed via that single NIC at full speed, I am wondering if anyone else here has experienced this or is this typical behavior for a Single host associated with the iSCSI LUN. I been reading through the forums and see people either mention they achieve the full load balance or they aggregate on a single interface and increase only total capacity instead. Or am I missing something stupid with my configuration, this is my first FreeNAS setup and use of FreeBSD so my understanding on how everything works is zilch.

System Breakdown:

FreeNAS Host:

ESXi Host:

Here are my Reports:

Now to explain my network configuration, no middle man L2 switch the FreeNAS is directly attached via two patch cables into my Server

MTU Sizes: 9000

NIC Negotiation hard-set: 1000/FULL

iSCSI IOPS Set to: 1 (For testing purposes)

FreeNAS:

NIC0: 172.16.10.3/29

NIC1: 172.16.10.11/29

ESXi Host:

NIC2: 172.16.10.2/29

NIC3: 172.16.10.10/29

ESXi Host configuration involves vSwitch1 handling the NICs, with overridden default NIC teaming with active VMKs applied to a single interface to reach esxi's iSCSi compliance.

My NETStat shows expected results from the FreeBSD and so does my ESXi host

tcp4 0 0 172.16.10.11.3260 172.16.10.10.53636 ESTABLISHED

tcp4 0 0 172.16.10.3.3260 172.16.10.2.48036 ESTABLISHED

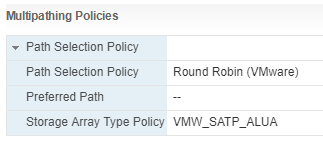

I am thinking it might have something to do with my Storage Array Type Policy for the P420i controller, as I know Dell for their controllers use a specific policy for their controllers to support MPIO.

I am doing the typical noob NAS load balancing style as I only currently have 1gbps NICs associated with my NAS, now I have gotten the load balancing to work with no issues but there is one hassle. The connection speed is load balanced between the NICs but the iSCSI target will only hit a max of 110mb/s~ (1gbps). (Both NICs pumping out 500mbps each). Makes 0 sense I suspected a duplex mismatch at first but if I am to disconnect one of the ethernets during the live environment all the data is then routed via that single NIC at full speed, I am wondering if anyone else here has experienced this or is this typical behavior for a Single host associated with the iSCSI LUN. I been reading through the forums and see people either mention they achieve the full load balance or they aggregate on a single interface and increase only total capacity instead. Or am I missing something stupid with my configuration, this is my first FreeNAS setup and use of FreeBSD so my understanding on how everything works is zilch.

System Breakdown:

FreeNAS Host:

Code:

Build: FreeNAS-11.0-U3 (c5dcf4416) Platform Intel(R) Pentium(R) CPU G3258 @ 3.20GHz Motherboard: ASUS H97M-E Memory 16GB DDR3 1600mhz Patriot Non-ecc (please mercy) Boot Drive: 16gb Flash Drive HDD 0: 4TB WD RED HDD 1: 8TB Seagate NIC 0: On-board Realtek 8111GR NIC 1: PCI Expansion card with a Realtek 8168 Chipset

ESXi Host:

Code:

ESXi 6.5 Update 1 (Build 5969303) Server: HP ProLiant DL360P 2x CPUs: Intel Xeon E5-2643 RAM: 64GBs Samsung DDR3 1600mhz ECC RDIMMs RAID Controller: Smart Array P420i LUN0: 4x 900GB 10k Official HP SAS Drives LUN1: 1x 250GB Samsung Evo 850 SSD 4x NICS: Broadcom chipsets using ntg3 drivers.

Here are my Reports:

Now to explain my network configuration, no middle man L2 switch the FreeNAS is directly attached via two patch cables into my Server

MTU Sizes: 9000

NIC Negotiation hard-set: 1000/FULL

iSCSI IOPS Set to: 1 (For testing purposes)

FreeNAS:

NIC0: 172.16.10.3/29

NIC1: 172.16.10.11/29

ESXi Host:

NIC2: 172.16.10.2/29

NIC3: 172.16.10.10/29

ESXi Host configuration involves vSwitch1 handling the NICs, with overridden default NIC teaming with active VMKs applied to a single interface to reach esxi's iSCSi compliance.

My NETStat shows expected results from the FreeBSD and so does my ESXi host

tcp4 0 0 172.16.10.11.3260 172.16.10.10.53636 ESTABLISHED

tcp4 0 0 172.16.10.3.3260 172.16.10.2.48036 ESTABLISHED

I am thinking it might have something to do with my Storage Array Type Policy for the P420i controller, as I know Dell for their controllers use a specific policy for their controllers to support MPIO.

Last edited: