Hi,

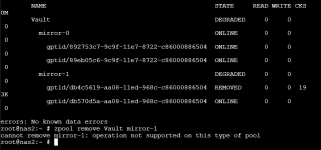

My NAS has a single Pool, originally made up of a single mirror set (2 drives), but several months ago, I added a second mirror set (another 2 drives) and now one drive of the second set has failed. Is there a way to check if their is data on the second mirror set to know if I can remove it? (possibly I don't grasp how data is distributed across mirror sets for a single pool)

My hopes on next steps:

My NAS has a single Pool, originally made up of a single mirror set (2 drives), but several months ago, I added a second mirror set (another 2 drives) and now one drive of the second set has failed. Is there a way to check if their is data on the second mirror set to know if I can remove it? (possibly I don't grasp how data is distributed across mirror sets for a single pool)

My hopes on next steps:

- Possibly remove the (newer) second mirror set all together, hence asking about checking if data is on it. (Finances limitation - later will add another set)

- Purchase a new replacement drive - I assume should be the same size? Therefore if I wanted to upgrade, I would have two buy two hard drives?