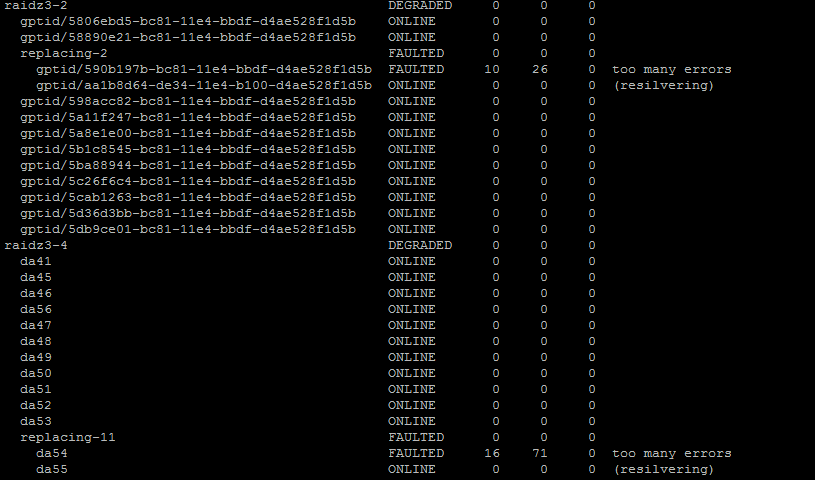

This might be a GEOM related issue. So basically I had a zpool with three 12 disk raidz3s (all 4TB disks) and I went to add another 12 disk raidz3 to it (this one with 6TB disks). zpool add storage01 da51 da52 da53 etc,.

It added them without issue although instead of "GPTID" I get just "DAXX".

Anyways I went to replace a failed 6TB disk from the gui and it failed saying "Apr 8 15:32:18 fs05 manage.py: [middleware.exceptions:38] [MiddlewareError: Disk replacement failed: "cannot replace da54 with gptid/1c18ceeb-de3f-11e4-b100-d4ae528f1d5b: device is too small, "]

I SSH'd in and did a zpool replace /dev/da54 /dev/da55 and off it went ( see S/S below).

It added them without issue although instead of "GPTID" I get just "DAXX".

Anyways I went to replace a failed 6TB disk from the gui and it failed saying "Apr 8 15:32:18 fs05 manage.py: [middleware.exceptions:38] [MiddlewareError: Disk replacement failed: "cannot replace da54 with gptid/1c18ceeb-de3f-11e4-b100-d4ae528f1d5b: device is too small, "]

I SSH'd in and did a zpool replace /dev/da54 /dev/da55 and off it went ( see S/S below).