ThEnGI

Contributor

- Joined

- Oct 14, 2023

- Messages

- 140

DoneIf you run the script using-dump emailit will email me a copy of your drive SMART data and I can look into it.

when i run htop, no swap is usedAs for your original problem, have you looked into your SWAP file? Is any being used? If yes, then where is your SWAP file located?

And then 1GB/h of log

so let's write 1TB/h (perhaps a typo?)

regarding the TB/h I was replying to Whattteva, who simplified it by saying that it was enough to use enterprise-grade SSD with a high TBW

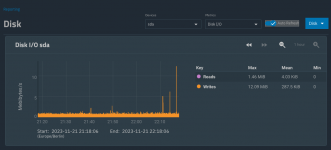

So, the problem is you have 1GB/h being written to the boot pool, is that correct? That's your sda graph, sda is your boot pool? And it's the wear level report. I got all that but started reading other posts and got mixed up. What drive is your application pool on?

sda is the 128GB boot ssd,whit costant writing of 256 kB/s or about 1GB/h. apps are on "FAST" (2TB NVMe).

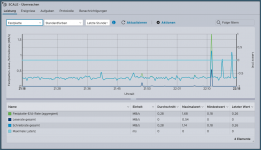

If you have 1GB/h being written, can you not determine which files as a hint which some monitoring? All logging goes to /var/log directory so would think the file(s) are there. Might help the ticket. And that was before you had kubernetes active if I understand correctly from post 28. That's a lot of data and cannot possibly be expected.

The problem occurred with or without kubernet. if that was the question

To answer the other question, don't see it answered, there isn't really a need per se to mirror the boot pool. You can for uptime which I do as I don't want downtime. As long as you download the config every so often you just reinstall Scale on a new boot drive and restore the config file and you are back same as before.