TrueNAS & ZFS do not support hardware RAID controllers:

1) An HBA is a Host Bus Adapter. This is a controller that allows SAS and SATA devices to be attached to, and communicate directly with, a server. RAID controllers typically aggregate several disks into a Virtual Disk abstraction of some sort...

www.truenas.com

Most of the time when such a controller is replaced with a plain HBA, the problems go away.

You are mistaken. my HBA card is in IT mode so it is not raid card.

I played around HBA cards before and upgrade their firmware with sasXflash or ircu.

My firmware is old, I accept that but I used this version before and had no problem.

As I told before, I don't have any problem whith these scnearios:

1- I can create any pool without any problem via Truenas Scale Dashboard.

2- I write 10TB with 10 parallel fio workers, had no problem or I did not get any task abort or timeout response from mpt3sas.

3- When I reboot the server, problem starts!

- After Linux and kernel boot sequence, Truenas services starts,

- First truenas tries to mount md swap partition and it is successful. no problem...

- After or before I'm not sure yet, truenas imports zfs pool.

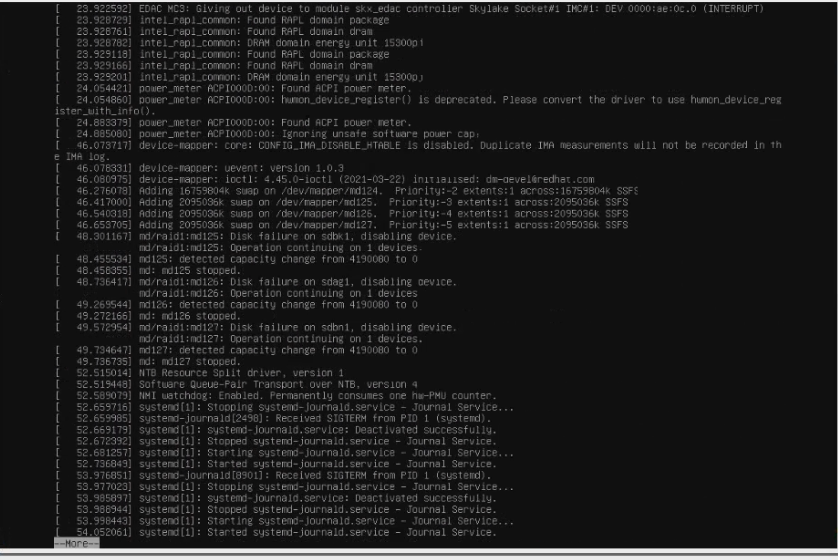

- Few seconds later md swap mount fails and you or mdadm starts a check and the drives being used by mdadm (I think this is the cause of timeout) because I start to see mpt3sas task abort logs.

- After too many mpt3sas task aborts because of the drive timeout, LSI firmware decides to reset the HBA card and we lose all of the drives for 5-10 seconds.

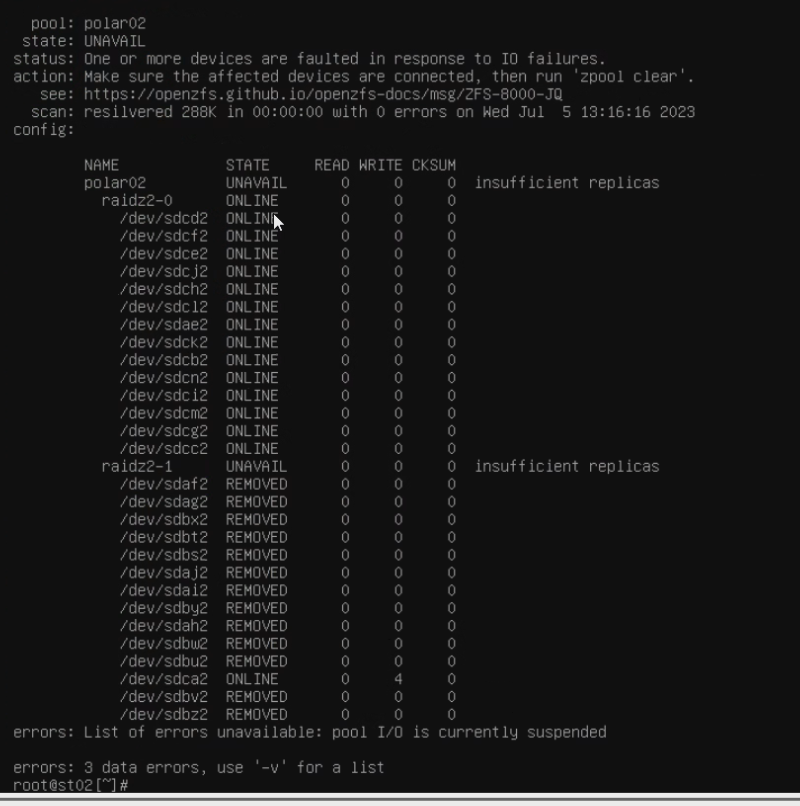

- Zpool suspends the pool as expected and do not continue automaticly. A lot of devices becomes unavaliable for the pool even they exist on the kernel.

- After the HBA reset, When I type zpool clear, I get all the drives but start to see the task aborts again, few sec later hba resets again. This turns to an endless loop. I can not clear the pool, I can not destroy or export.

- After few min later Linux kernel crashes and do not respondes new processes but we can use the shell via kvm. (I'm not sure about crash I have to check dmesg output one more time)

At this point, I reset the server via ipmi and I choosed the second option in the Truenas boot menu (initial install if I remember correctly)

1- Kernel starts without any issue, any task abort or any problem.

2- No mdadm service, no auto md mount on initial-install image.

3- No auto pool import.

4- When I try to zpool import $poolname, the import is successful and I dont see any task abort or timeout.

5- The pool is healthy, the test data is %100 correct, I'm able to write data and saturate the IOPS without any issue.

So I'm %100 sure the root cause is something with Truenas boot sequence.

I did not check the sequence yet I was busy. Tomorrow I'm going to find that and cut its had.

I really wanted to use Truenas Core to be more stable but my network card driver was not exist.

So I'm thinking now which choice will be better for me in this case.

1- Fix the Scale hba reset issue

2- Do not trust Scale if it has these kind of simple and not logical problems. Use Truenas Core, Import the network driver.