mkarwin

Dabbler

- Joined

- Jun 10, 2021

- Messages

- 40

Dear team and community,

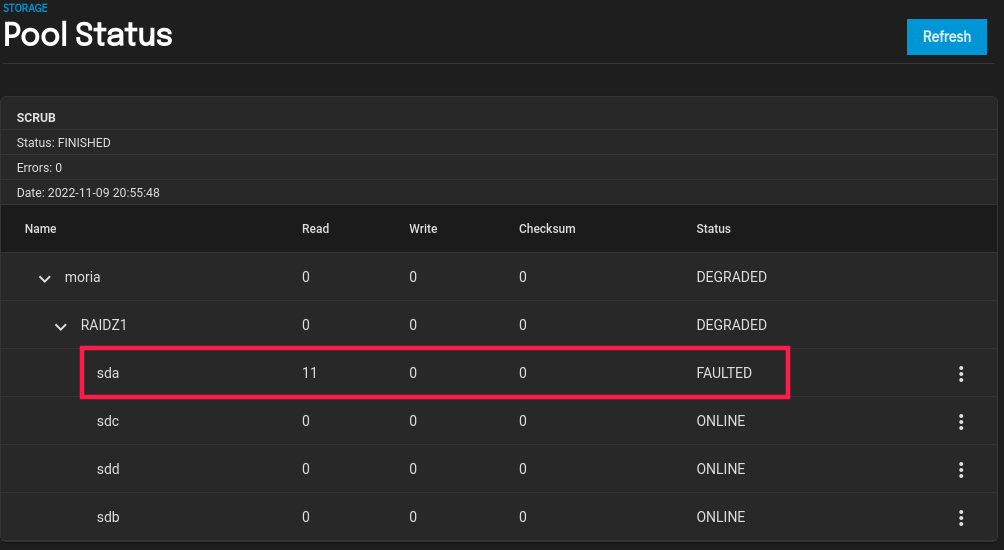

the dreaded moment in every data hoarder life has finally arrived for me on the TrueNAS 22.02.4. In my HPE Microserver G10+ one of the drives kept failing read phase of SMART tests (failure at 90% remaining, 8 unreadable sectors), as a result TrueNAS marked the device as FAULTED and the pool as DEGRADED:

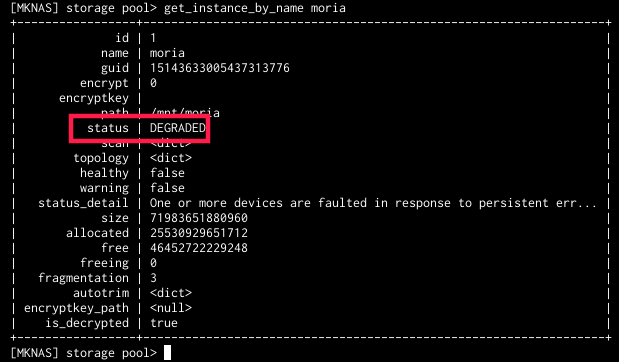

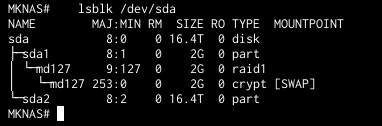

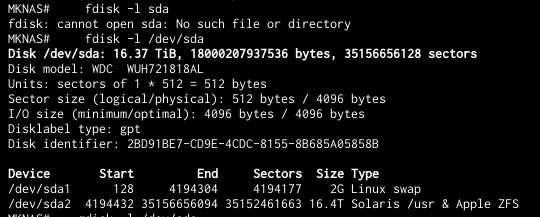

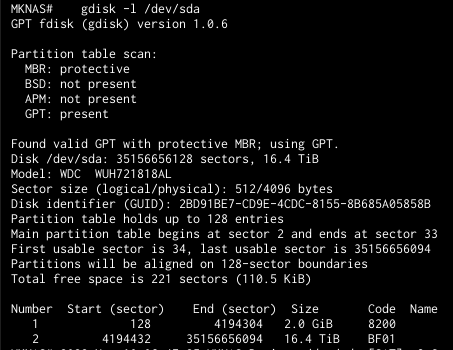

Same is seen in CLI:

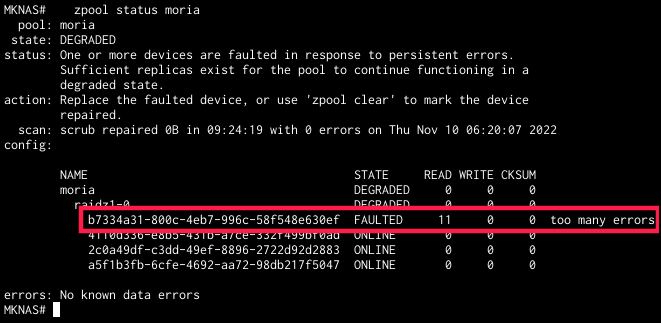

and directly in zpool query:

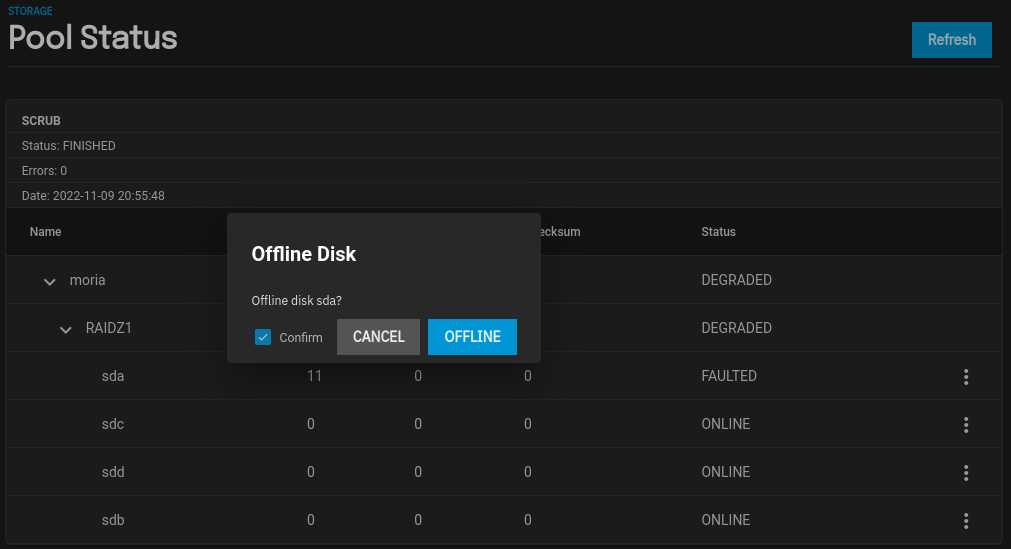

However, since I already have a replacement drive, upon following the Docs Hub instruction for drive replacement (https://www.truenas.com/docs/scale/scaletutorials/storage/disks/replacingdisks/) I went through with the usual/expected route of vertical elipsis menu of the drive -> offline:

So far so good, but this does not seem to do anything - a pop-up pane with a revolving wheel of in-progress/working-on "Please wait..." shows for a moment (~2secs), then disappears without any message in web UI and without the drive actually being set to OFFLINE status. The DocsHub entry speaks of running a scrub in case offlining fails with an error (I haven't yet seen one), so I did, as shown in the webUI pool pane, without any change to the effect of the action/command.

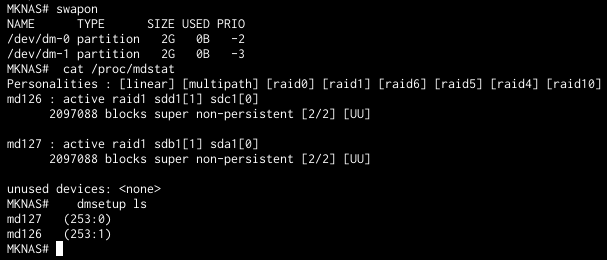

What's more, upon OS installation a SWAP partition has been created by TrueNAS automatically on this drive (actually across all the drives in the storage pool used for system_datatset, I think this might have something to do with the "system" option during installation where one could pick either boot-pool or one of the storage pools) - 2GB in size:

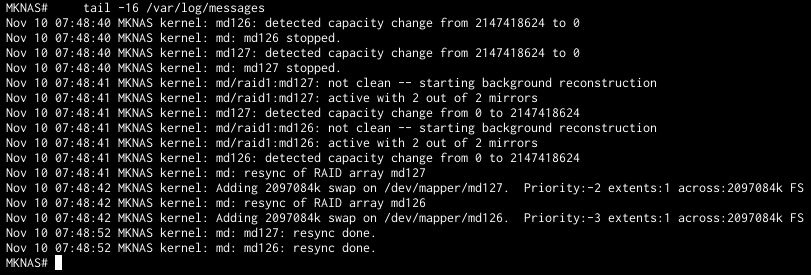

I don't think this could be impacting the disk offlining in moria pool directly, since it's sda[1] partition, when sda[0] is used for the SWAP as set by TrueNAS, but it might be problematic nonetheless during the overall offlining part. Or at least it seems so, cause when the offline command is issued the logs show that MDADM responsible for SWAP created by TrueNAS upon installation kind of blocks/reverts the action by reinstating the SWAP MD devices:

Please bear in mind that upon installation SWAP was supposed to kind of be placed on the boot-pool flash device, TrueNAS even created 16GB partition there, so I'm not sure why these on the storage drives SWAP devices are used... Again, I can only venture guessing it's related to that system_dataset config. Still, they're there, I'm not SWAPping in or out for most of the time, so most of the time no harm done. Until now, it seems...

Regardless, the DocsHub approach fails on me, as I cannot offline the FAULTED drive using the UI right now.

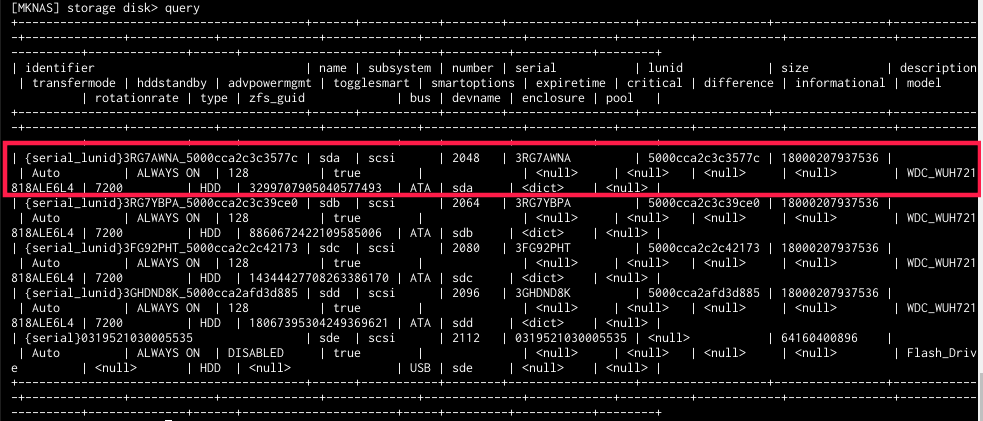

So how do I proceed? How do I replace the "faulted" disk (both in pool and SWAP) with a new one? I mean the whole sda (3RG7AWNA):

with a new one. Am I to power down the server, swap-out the old "faulted" drive, swap-in a new one and TrueNAS will be able to pickup new drive to automatically apply its partitioning scheme along with fixing memberships and resilvering the pool automatically? My guess is not, so how to proceed?

Please bear in mind this is a 4-slot device and all 4 are currently occupied by the members of the Z1 storage pool (and the aforementioned SWAP) so I cannot add my new extra disk inside and do replacements through the UI (though even if I had, I'm still expected to offline the faulty drive first, which currently seems to not work).

As such, how do I replace the drive?

Is there an UI (webUI or trueNAS CLI) option I'm missing that needs to be set/reset? Or maybe some shell commands I need to run to get it working?

I really need your help with this.

the dreaded moment in every data hoarder life has finally arrived for me on the TrueNAS 22.02.4. In my HPE Microserver G10+ one of the drives kept failing read phase of SMART tests (failure at 90% remaining, 8 unreadable sectors), as a result TrueNAS marked the device as FAULTED and the pool as DEGRADED:

Same is seen in CLI:

and directly in zpool query:

However, since I already have a replacement drive, upon following the Docs Hub instruction for drive replacement (https://www.truenas.com/docs/scale/scaletutorials/storage/disks/replacingdisks/) I went through with the usual/expected route of vertical elipsis menu of the drive -> offline:

So far so good, but this does not seem to do anything - a pop-up pane with a revolving wheel of in-progress/working-on "Please wait..." shows for a moment (~2secs), then disappears without any message in web UI and without the drive actually being set to OFFLINE status. The DocsHub entry speaks of running a scrub in case offlining fails with an error (I haven't yet seen one), so I did, as shown in the webUI pool pane, without any change to the effect of the action/command.

What's more, upon OS installation a SWAP partition has been created by TrueNAS automatically on this drive (actually across all the drives in the storage pool used for system_datatset, I think this might have something to do with the "system" option during installation where one could pick either boot-pool or one of the storage pools) - 2GB in size:

I don't think this could be impacting the disk offlining in moria pool directly, since it's sda[1] partition, when sda[0] is used for the SWAP as set by TrueNAS, but it might be problematic nonetheless during the overall offlining part. Or at least it seems so, cause when the offline command is issued the logs show that MDADM responsible for SWAP created by TrueNAS upon installation kind of blocks/reverts the action by reinstating the SWAP MD devices:

Please bear in mind that upon installation SWAP was supposed to kind of be placed on the boot-pool flash device, TrueNAS even created 16GB partition there, so I'm not sure why these on the storage drives SWAP devices are used... Again, I can only venture guessing it's related to that system_dataset config. Still, they're there, I'm not SWAPping in or out for most of the time, so most of the time no harm done. Until now, it seems...

Regardless, the DocsHub approach fails on me, as I cannot offline the FAULTED drive using the UI right now.

So how do I proceed? How do I replace the "faulted" disk (both in pool and SWAP) with a new one? I mean the whole sda (3RG7AWNA):

with a new one. Am I to power down the server, swap-out the old "faulted" drive, swap-in a new one and TrueNAS will be able to pickup new drive to automatically apply its partitioning scheme along with fixing memberships and resilvering the pool automatically? My guess is not, so how to proceed?

Please bear in mind this is a 4-slot device and all 4 are currently occupied by the members of the Z1 storage pool (and the aforementioned SWAP) so I cannot add my new extra disk inside and do replacements through the UI (though even if I had, I'm still expected to offline the faulty drive first, which currently seems to not work).

As such, how do I replace the drive?

Is there an UI (webUI or trueNAS CLI) option I'm missing that needs to be set/reset? Or maybe some shell commands I need to run to get it working?

I really need your help with this.