RchGrav

Dabbler

- Joined

- Feb 21, 2014

- Messages

- 36

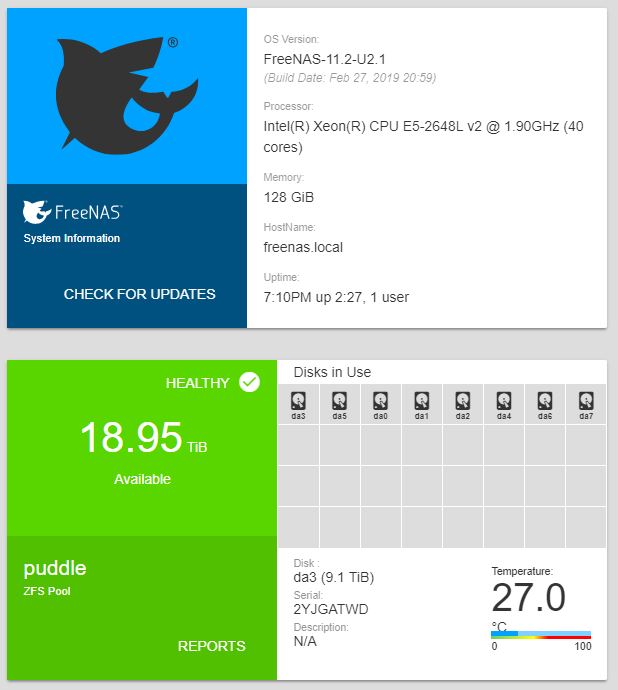

V. FreeNAS-11.2-U2.1

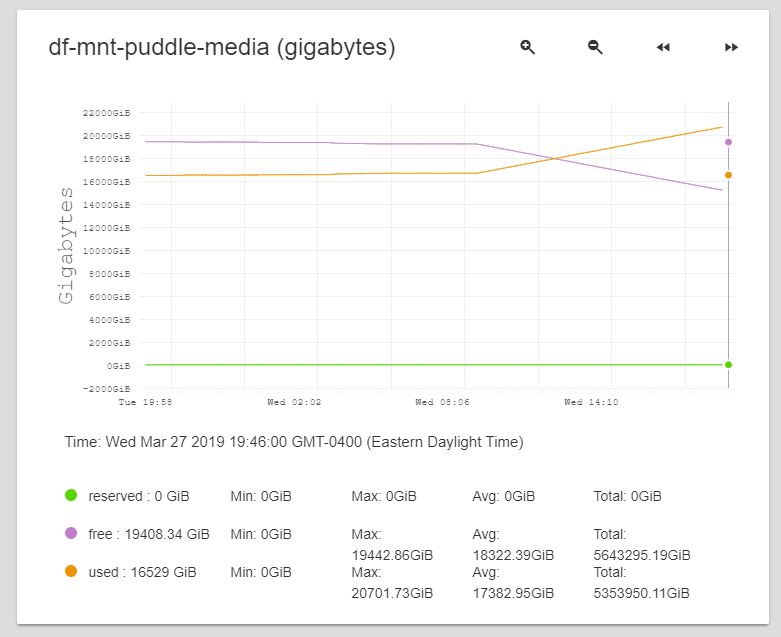

I'll let the pictures speak for themselves.. but ZFS shows 4TB being eaten away in a very short amount of time.. Over this period of time I was generating Plex thumbnails for existing movies.. However the dataset holding these thumbnails does not show any anomalies.. Well.. aside from the fact that when I get the properties in Windows there is a HUGE disparity between Size vs Size on disk, but this is logical to me since size matches with ZFS Statistics. I ran a few commands at the console to eliminate the possibility of a GUI issue.. the reports in the legacy and new GUI indicate the same progressive loss of space over the past 12 hours..

Aside from the Thumbnail calculation there is also a RSYNC configured to replicate approx 7TB of data, but it has been complete.. I haven't shut it off yet, but it has been done for a day or so. There was some file renaming done on the receive size for organization purposes in my plex server.

Now following a reboot the space available does seem to correct itself. So my question is this.. What exactly about ZFS causes this MASSIVE drift in available space being reported correctly.. is there some kind of temp files for RSYNC, or some hidden metadata gradually taking 4TB of temporary space, or what? Is there some command capable of uncovering where this space is going, or what is consuming it? Can this space be reclaimed without needing to reboot the system via a command? Would this space return on its own if ZFS was just left to its own devices? There MUST be some command or something to uncover what is going on here.

I've searched the posts here and see other people posting similar threads regarding space disparity being resolved from a reboot, but there isn't really a clear answer.. I'm interested in getting to the bottom of this. 4TB of disparity is big.. there has to be some command capable of showing what is temporarily using this space, and reclaiming it without rebooting?

I was about to disable the thumbnail process, and the rsync to stop this from happening, but that MAY not be the best approach here.. I'll leave everything configured alone for the moment so I can test any suggestions if anyone has any.

#1 Command or method to show what is temporarily using the space and how much?

#2 Command or method to reclaim free space without rebooting.

At the rate my free space was being consumed I would have been out of free space in a very short time period.

I haven't setup snapshots yet... My space went from 19.5TB to 15.5TB overnight without adding any data...

Space being eaten overnight then returning after a reboot....

No Snapshots to speak of

I'll let the pictures speak for themselves.. but ZFS shows 4TB being eaten away in a very short amount of time.. Over this period of time I was generating Plex thumbnails for existing movies.. However the dataset holding these thumbnails does not show any anomalies.. Well.. aside from the fact that when I get the properties in Windows there is a HUGE disparity between Size vs Size on disk, but this is logical to me since size matches with ZFS Statistics. I ran a few commands at the console to eliminate the possibility of a GUI issue.. the reports in the legacy and new GUI indicate the same progressive loss of space over the past 12 hours..

Aside from the Thumbnail calculation there is also a RSYNC configured to replicate approx 7TB of data, but it has been complete.. I haven't shut it off yet, but it has been done for a day or so. There was some file renaming done on the receive size for organization purposes in my plex server.

Now following a reboot the space available does seem to correct itself. So my question is this.. What exactly about ZFS causes this MASSIVE drift in available space being reported correctly.. is there some kind of temp files for RSYNC, or some hidden metadata gradually taking 4TB of temporary space, or what? Is there some command capable of uncovering where this space is going, or what is consuming it? Can this space be reclaimed without needing to reboot the system via a command? Would this space return on its own if ZFS was just left to its own devices? There MUST be some command or something to uncover what is going on here.

I've searched the posts here and see other people posting similar threads regarding space disparity being resolved from a reboot, but there isn't really a clear answer.. I'm interested in getting to the bottom of this. 4TB of disparity is big.. there has to be some command capable of showing what is temporarily using this space, and reclaiming it without rebooting?

I was about to disable the thumbnail process, and the rsync to stop this from happening, but that MAY not be the best approach here.. I'll leave everything configured alone for the moment so I can test any suggestions if anyone has any.

#1 Command or method to show what is temporarily using the space and how much?

#2 Command or method to reclaim free space without rebooting.

At the rate my free space was being consumed I would have been out of free space in a very short time period.

I haven't setup snapshots yet... My space went from 19.5TB to 15.5TB overnight without adding any data...

Space being eaten overnight then returning after a reboot....

Code:

NAME AVAIL USED USEDSNAP USEDDS USEDREFRESERV USEDCHILD puddle 14.9T 20.2T 0 88K 0 20.2T puddle/.system 14.9T 46.7M 0 96K 0 46.6M puddle/.system/configs-02e83c25ec534b63a4d545000fc9a19d 14.9T 3.40M 0 3.40M 0 0 puddle/.system/cores 14.9T 5.26M 0 5.26M 0 0 puddle/.system/rrd-02e83c25ec534b63a4d545000fc9a19d 14.9T 36.9M 0 36.9M 0 0 puddle/.system/samba4 14.9T 276K 0 276K 0 0 puddle/.system/syslog-02e83c25ec534b63a4d545000fc9a19d 14.9T 724K 0 724K 0 0 puddle/.system/webui 14.9T 88K 0 88K 0 0 puddle/apps 14.9T 20.0G 0 20.0G 0 0 puddle/home 14.9T 88K 0 88K 0 0 puddle/iocage 14.9T 2.81G 0 3.70M 0 2.81G puddle/iocage/download 14.9T 272M 0 88K 0 272M puddle/iocage/download/11.2-RELEASE 14.9T 272M 0 272M 0 0 puddle/iocage/images 14.9T 88K 0 88K 0 0 puddle/iocage/jails 14.9T 1.54G 0 96K 0 1.54G puddle/iocage/jails/plex 14.9T 296M 0 96K 0 295M puddle/iocage/jails/plex/root 14.9T 295M 0 295M 0 0 puddle/iocage/jails/radarr 14.9T 484M 0 96K 0 484M puddle/iocage/jails/radarr/root 14.9T 484M 0 484M 0 0 puddle/iocage/jails/sabnzbd 14.9T 245M 0 96K 0 245M puddle/iocage/jails/sabnzbd/root 14.9T 245M 0 245M 0 0 puddle/iocage/jails/sonarr 14.9T 458M 0 96K 0 458M puddle/iocage/jails/sonarr/root 14.9T 458M 0 458M 0 0 puddle/iocage/jails/syncovery 14.9T 96.4M 0 92K 0 96.3M puddle/iocage/jails/syncovery/root 14.9T 96.3M 0 96.3M 0 0 puddle/iocage/log 14.9T 116K 0 116K 0 0 puddle/iocage/releases 14.9T 1.00G 0 88K 0 1.00G puddle/iocage/releases/11.2-RELEASE 14.9T 1.00G 0 88K 0 1.00G puddle/iocage/releases/11.2-RELEASE/root 14.9T 1.00G 1.08M 1024M 0 0 puddle/iocage/templates 14.9T 88K 0 88K 0 0 puddle/media 14.9T 20.2T 0 20.2T 0 0

No Snapshots to speak of

Code:

NAME USED AVAIL REFER MOUNTPOINT freenas-boot/ROOT/11.2-U2.1@2005-01-01-01:49:47 2.19M - 755M - freenas-boot/ROOT/11.2-U2.1@2019-03-25-22:36:53 2.29M - 755M - puddle/iocage/releases/11.2-RELEASE/root@plex 240K - 1024M - puddle/iocage/releases/11.2-RELEASE/root@sonarr 240K - 1024M - puddle/iocage/releases/11.2-RELEASE/root@radarr 240K - 1024M - puddle/iocage/releases/11.2-RELEASE/root@syncovery 240K - 1024M - puddle/iocage/releases/11.2-RELEASE/root@sabnzbd 144K - 1024M -

Attachments

Last edited: