I have an encrypted pool, attached a SLOG, which I removed physically (no longer in my hands), and zpool remove doesn't work. FreeNAS won't auto import the pool due to the missing device. See detail below.

I tried a zpool replace but get an error message about the replacement device being too small (removed SLOG was 800GB - I didn't use a chunk because I was in a hurry, figured it didn't matter and it was just a test), replacement SLOG available is 280 GB, 12 x 3.5 bays are full, but I suppose I could attach a 3.5" drive via SATA, and pass through via RDM (temporarily), to replace with a large enough device. That is if I'm interpreting the too small message correctly.

Signature with hardware is correct (except for an unused Optane 900p), which I want to pass through to FreeNAS (for use as a SLOG).

Please help, I dont want to have to blow up my pool, copy 30 TB, blah blah (end game = replicate to server B daily, but still working on getting server A provisioned / stable).

Attempt to unlock pool via gui ... results in this:

OK, so no pool imported ... Let's try ...

OK, so that worked, but I can't see my pool in the gui, nor does it show up under /mnt only /Tank1 ...

Attempts to zpool remove Tank1 2240279982886824239 fail.

I've already run a scrub.

I tried a zpool replace but get an error message about the replacement device being too small (removed SLOG was 800GB - I didn't use a chunk because I was in a hurry, figured it didn't matter and it was just a test), replacement SLOG available is 280 GB, 12 x 3.5 bays are full, but I suppose I could attach a 3.5" drive via SATA, and pass through via RDM (temporarily), to replace with a large enough device. That is if I'm interpreting the too small message correctly.

Signature with hardware is correct (except for an unused Optane 900p), which I want to pass through to FreeNAS (for use as a SLOG).

Please help, I dont want to have to blow up my pool, copy 30 TB, blah blah (end game = replicate to server B daily, but still working on getting server A provisioned / stable).

Attempt to unlock pool via gui ... results in this:

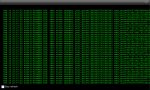

Code:

node-A-FreeNAS# zpool status pool: freenas-boot state: ONLINE scan: scrub repaired 0 in 0 days 00:00:01 with 0 errors on Tue Sep 18 03:45:01 2018 config: NAME STATE READ WRITE CKSUM freenas-boot ONLINE 0 0 0 da0p2 ONLINE 0 0 0 errors: No known data errors

OK, so no pool imported ... Let's try ...

Code:

node-A-FreeNAS# zpool import -m Tank1

Code:

node-A-FreeNAS# zpool status pool: Tank1 state: DEGRADED status: One or more devices could not be opened. Sufficient replicas exist for the pool to continue functioning in a degraded state. action: Attach the missing device and online it using 'zpool online'. see: http://illumos.org/msg/ZFS-8000-2Q scan: resilvered 96K in 0 days 00:00:01 with 0 errors on Tue Sep 18 15:12:36 2018 config: NAME STATE READ WRITE CKSUM Tank1 DEGRADED 0 0 0 raidz2-0 ONLINE 0 0 0 gptid/b2a4631f-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/b32f3d84-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/b3ac7c81-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/b42f2d96-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/b4afbc60-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/b52dfe57-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 raidz2-1 ONLINE 0 0 0 gptid/b63c8107-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/b6cf4e29-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/b760d773-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/b7f232c5-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/b8782967-9431-11e8-974d-000c296707ef.eli ONLINE 0 0 0 gptid/caef1f42-a56d-11e8-a14d-000c29cd5f5a.eli ONLINE 0 0 0 logs 2240279982886824239 UNAVAIL 0 0 0 was /dev/gptid/92a829ca-bab2-11e8-8ab9-000c29d08afe.eli errors: No known data errors pool: freenas-boot state: ONLINE scan: scrub repaired 0 in 0 days 00:00:01 with 0 errors on Tue Sep 18 03:45:01 2018 config: NAME STATE READ WRITE CKSUM freenas-boot ONLINE 0 0 0 da0p2 ONLINE 0 0 0 errors: No known data errors

OK, so that worked, but I can't see my pool in the gui, nor does it show up under /mnt only /Tank1 ...

Attempts to zpool remove Tank1 2240279982886824239 fail.

I've already run a scrub.