phatfish

Dabbler

- Joined

- Sep 20, 2015

- Messages

- 16

Dear all,

I have a setup running for a few years now. A mirror pool hosting VHD for a number of VMs, and a raidz pool for a file share that is mainly static read only files.

Freenas is on 11.2-U7, hardware is Intel Supermicro X10SDV-4C board, 32GB RAM, WD RED 3TB HDD all connected to the on board SATA ports. There is also an SLOG device for the mirror volume which is an Intel SSD connected to a HBA card.

The mirror pool (x2 3TB) performs fine, it maxes the 1GB network i have for read and write, the VMs all run normally.

The raidz pool (x4 3TB) is fine as well, until you write a larger file, eg. to a Samba share. After around 4-5GB the transfer pauses for 10 to 30 seconds, then starts again, and depending on the size of the file it can pause again, and start again until it finishes. The transfer speed is maxing my 1GB network at 90-110mb/sec

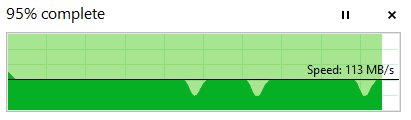

The dips here are where the transfer speed goes to 0.

The issue also occurs for local file copy, if i copy a ~8GB file from the mirror pool to the raidz pool i can see it copy at 150-200mb/s for the first ~4-5GB then then it pauses completly for 10-30 seconds then writes again. Same behaviour as writing over SMB from a Windows box. I used rsync locally with --progress to see what was happening.

Copying over FTP just times out the transfer when the pause happens, and recently it has also started intermittently causing a 0x8007003b "network error" on Windows. I did upgrade from 11.2-U3 to 11.2-U7 in the last week, so maybe something changed there to make Samba/Windows more sensitive to this issue.

The dataset on the raidz pool that i write to the most does have de-duplication enabled, but if i copy to a dataset without dedupe i get the same behaviour. The strange thing is, copying the exact same file again a second time will work without any pauses in the copy or slow down at all.

Does anyone have advice on what might cause this behviour? It feels like a cache is being filled and then when it has to commit to disk it has so much data to flush that it stalls writes for a long time? Maybe some settings can be tuned, but none of the logs i checked showed any errors, so its difficult to know where to start.

Thanks in advance.

I have a setup running for a few years now. A mirror pool hosting VHD for a number of VMs, and a raidz pool for a file share that is mainly static read only files.

Freenas is on 11.2-U7, hardware is Intel Supermicro X10SDV-4C board, 32GB RAM, WD RED 3TB HDD all connected to the on board SATA ports. There is also an SLOG device for the mirror volume which is an Intel SSD connected to a HBA card.

The mirror pool (x2 3TB) performs fine, it maxes the 1GB network i have for read and write, the VMs all run normally.

The raidz pool (x4 3TB) is fine as well, until you write a larger file, eg. to a Samba share. After around 4-5GB the transfer pauses for 10 to 30 seconds, then starts again, and depending on the size of the file it can pause again, and start again until it finishes. The transfer speed is maxing my 1GB network at 90-110mb/sec

The dips here are where the transfer speed goes to 0.

The issue also occurs for local file copy, if i copy a ~8GB file from the mirror pool to the raidz pool i can see it copy at 150-200mb/s for the first ~4-5GB then then it pauses completly for 10-30 seconds then writes again. Same behaviour as writing over SMB from a Windows box. I used rsync locally with --progress to see what was happening.

Copying over FTP just times out the transfer when the pause happens, and recently it has also started intermittently causing a 0x8007003b "network error" on Windows. I did upgrade from 11.2-U3 to 11.2-U7 in the last week, so maybe something changed there to make Samba/Windows more sensitive to this issue.

The dataset on the raidz pool that i write to the most does have de-duplication enabled, but if i copy to a dataset without dedupe i get the same behaviour. The strange thing is, copying the exact same file again a second time will work without any pauses in the copy or slow down at all.

Does anyone have advice on what might cause this behviour? It feels like a cache is being filled and then when it has to commit to disk it has so much data to flush that it stalls writes for a long time? Maybe some settings can be tuned, but none of the logs i checked showed any errors, so its difficult to know where to start.

Thanks in advance.