Hi there, wondering if someone may be able to help me. I've created a Windows 10 VM on Freenas 11-U2 but am unable to allocate more than two cores to it (I can allocate them in freenas but windows shows max of 2 cores). I understand this as a result of Win 10s 2 CPU limitation and byhve's process of allocating a core as an entire CPU.

From my searches around here I found that you have to add the following tunables to get around this issue:

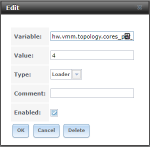

hw.vmm.topology.cores_per_package=4

hw.vmm.topology.threads_per_core=1

However I get an error when submitting those tunables :

The GUI says the tunable is enabled even after the error, but there is no change in the number of cores in the VM; even after restarts.

My CPU is a Intel(R) Xeon(R) CPU E3-1231 v3 if that makes a difference.

Thanks in advance

From my searches around here I found that you have to add the following tunables to get around this issue:

hw.vmm.topology.cores_per_package=4

hw.vmm.topology.threads_per_core=1

However I get an error when submitting those tunables :

Code:

Request Method:POST Request URL:http://192.168.1.104/admin/system/tunable/add/ Software Version:FreeNAS-11.0-U2 (e417d8aa5) Exception Type:CallTimeoutException Value:Call timeout Exception Location:/usr/local/lib/python3.6/site-packages/middlewared/client/client.py in call, line 233Server time:Tue, 8 Aug 2017 17:44:31 +0200

Code:

Environment:

Software Version: FreeNAS-11.0-U2 (e417d8aa5)

Request Method: POST

Request URL: http://192.168.1.104/admin/system/tunable/add/

Traceback:

File "/usr/local/lib/python3.6/site-packages/django/core/handlers/exception.py" in inner

39. response = get_response(request)

File "/usr/local/lib/python3.6/site-packages/django/core/handlers/base.py" in _legacy_get_response

249. response = self._get_response(request)

File "/usr/local/lib/python3.6/site-packages/django/core/handlers/base.py" in _get_response

178. response = middleware_method(request, callback, callback_args, callback_kwargs)

File "./freenasUI/freeadmin/middleware.py" in process_view

162. return login_required(view_func)(request, *view_args, **view_kwargs)

File "/usr/local/lib/python3.6/site-packages/django/contrib/auth/decorators.py" in _wrapped_view

23. return view_func(request, *args, **kwargs)

File "./freenasUI/freeadmin/options.py" in wrapper

208. return self._admin.admin_view(view)(*args, **kwargs)

File "/usr/local/lib/python3.6/site-packages/django/utils/decorators.py" in _wrapped_view

149. response = view_func(request, *args, **kwargs)

File "/usr/local/lib/python3.6/site-packages/django/views/decorators/cache.py" in _wrapped_view_func

57. response = view_func(request, *args, **kwargs)

File "./freenasUI/freeadmin/site.py" in inner

142. return view(request, *args, **kwargs)

File "./freenasUI/freeadmin/options.py" in add

362. mf.save()

File "./freenasUI/system/forms.py" in save

1423. notifier().reload("loader")

File "./freenasUI/middleware/notifier.py" in reload

280. return c.call('service.reload', what, {'onetime': onetime}, **kwargs)

File "./freenasUI/middleware/notifier.py" in reload

280. return c.call('service.reload', what, {'onetime': onetime}, **kwargs)

File "/usr/local/lib/python3.6/site-packages/middlewared/client/client.py" in call

233. raise CallTimeout("Call timeout")

Exception Type: CallTimeout at /admin/system/tunable/add/

Exception Value: Call timeout

Code:

Request information GET No GET data POST Variable Value __all__ '' tun_var 'hw.vmm.topology.cores_per_package' tun_value '4' tun_type 'loader' tun_comment '' tun_enabled 'on' __form_id 'dialogForm_tunable' FILES No FILES data COOKIES Variable Value sessionid '9bd3x820j99tjo3jh75ku9xp502dai1z' fntreeSaveStateCookie 'root' csrftoken 'jIL3pfVYzTEm2jVp81N3dHRvqSy7fkod9mK7rGD6QRuk58ugDfGyj10g51FgKu2s' META Variable Value

The GUI says the tunable is enabled even after the error, but there is no change in the number of cores in the VM; even after restarts.

My CPU is a Intel(R) Xeon(R) CPU E3-1231 v3 if that makes a difference.

Thanks in advance