scurrier

Patron

- Joined

- Jan 2, 2014

- Messages

- 297

Hello,

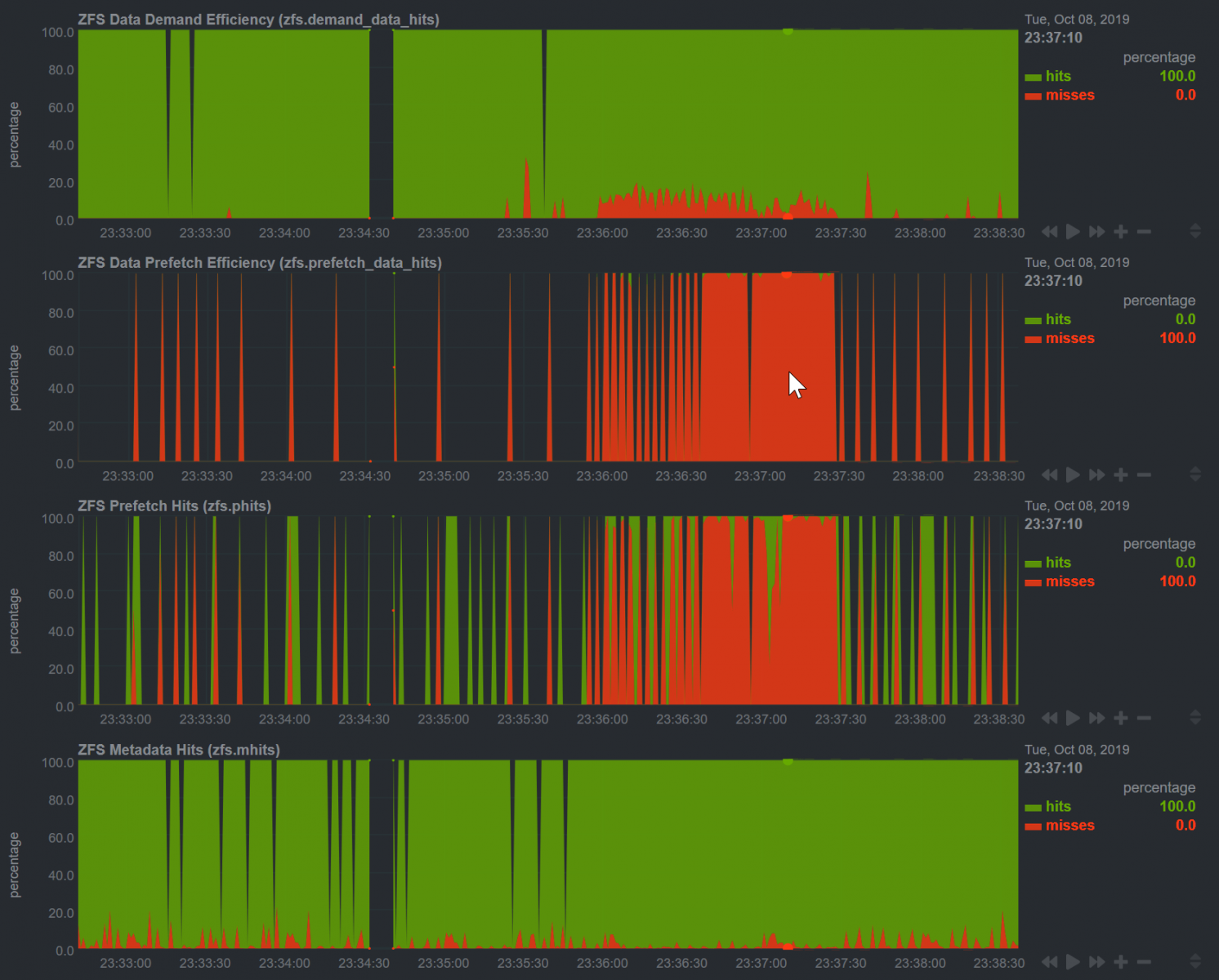

I have the system shown in my signature. It has a 3x 4TB mirrors pool on it which has a 20GB large file hosted via SMB. When transferring this file, I noticed performance was not what I would expect from the six disk pool - in the low 200 MB/s over 10 GBE to a pcie SSD on the receiving machine. I checked netdata and saw that prefetch efficiency was very low. Any idea why this would be? I thought that sequential transfers are ideal for prefetch, so I was expecting much higher efficiency.

In the screenshot below, the transfer started around 23:36:00 and ended around 23:37:30. Note that prefetch efficiency is the second chart. I included the other charts for some more context.

I have the system shown in my signature. It has a 3x 4TB mirrors pool on it which has a 20GB large file hosted via SMB. When transferring this file, I noticed performance was not what I would expect from the six disk pool - in the low 200 MB/s over 10 GBE to a pcie SSD on the receiving machine. I checked netdata and saw that prefetch efficiency was very low. Any idea why this would be? I thought that sequential transfers are ideal for prefetch, so I was expecting much higher efficiency.

In the screenshot below, the transfer started around 23:36:00 and ended around 23:37:30. Note that prefetch efficiency is the second chart. I included the other charts for some more context.