Stanri010

Explorer

- Joined

- Apr 15, 2014

- Messages

- 81

So the general recommendation for the number of disks goes like this

4-disk RAID-Z2 = 128KiB / 2 = 64KiB = good

5-disk RAID-Z2 = 128KiB / 3 = ~43KiB = BAD!

6-disk RAID-Z2 = 128KiB / 4 = 32KiB = good

10-disk RAID-Z2 = 128KiB / 8 = 16KiB = good

However, there's articles floating around that pretty much says that recommendation is not all that important because of either compression or it's just not that big of a impact due to different block sizes.

ZFS stripe width: http://blog.delphix.com/matt/2014/06/06/zfs-stripe-width/

4k overhead: https://web.archive.org/web/2014040...s.org/ritk/zfs-4k-aligned-space-overhead.html

Calculator: https://jsfiddle.net/Biduleohm/hfqdpbLm/8/embedded/result/

So am I correct to understand that if I run a 8 drive raidz2 vdev, the only downside is that I might be losing out on a little bit of theoretical space. There shouldn't be any throughput or IOPS performance hits right?

EDIT: I guess my question stems from the enclosure I've got are generally in multiples of 4 per cage and the HBA has 1 SAS breakout to 4 SATA. For future dependability and using raidz2, multiples of 8 is ideal for my situation.

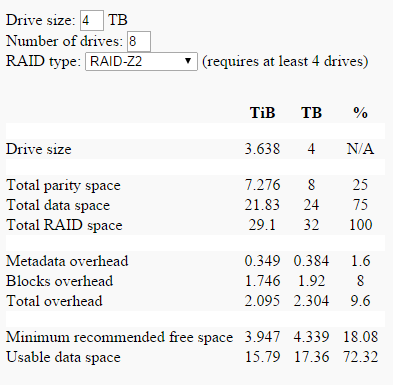

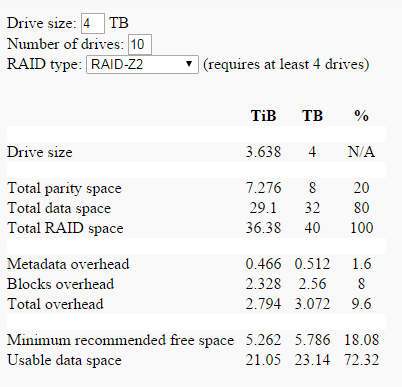

Between the following two configuartions, 8 and 10 drives, the overhead difference doesn't seem to be that much. Or is that calculator wrong?

- Start a RAIDZ1 at at 3, 5, or 9, disks.

- Start a RAIDZ2 at 4, 6, or 10 disks.

- Start a RAIDZ3 at 5, 7, or 11 disks.

4-disk RAID-Z2 = 128KiB / 2 = 64KiB = good

5-disk RAID-Z2 = 128KiB / 3 = ~43KiB = BAD!

6-disk RAID-Z2 = 128KiB / 4 = 32KiB = good

10-disk RAID-Z2 = 128KiB / 8 = 16KiB = good

However, there's articles floating around that pretty much says that recommendation is not all that important because of either compression or it's just not that big of a impact due to different block sizes.

ZFS stripe width: http://blog.delphix.com/matt/2014/06/06/zfs-stripe-width/

4k overhead: https://web.archive.org/web/2014040...s.org/ritk/zfs-4k-aligned-space-overhead.html

Calculator: https://jsfiddle.net/Biduleohm/hfqdpbLm/8/embedded/result/

So am I correct to understand that if I run a 8 drive raidz2 vdev, the only downside is that I might be losing out on a little bit of theoretical space. There shouldn't be any throughput or IOPS performance hits right?

EDIT: I guess my question stems from the enclosure I've got are generally in multiples of 4 per cage and the HBA has 1 SAS breakout to 4 SATA. For future dependability and using raidz2, multiples of 8 is ideal for my situation.

Between the following two configuartions, 8 and 10 drives, the overhead difference doesn't seem to be that much. Or is that calculator wrong?

Last edited: