Hi All,

Complete FreeNAS new kid here and this is my first post! :) I've had a real interest in FreeNAS over the past few months and, after reading into it further, I have gone and built myself a dedicated storage system (please see sig for h/w details).

I've done the stage of creating a volume using the disk I currently have within the box, which are 4x 4TB WD Reds in a RAID-Z configuration. (I have read that implementing RAID-Z with disks of this size is highly un-recommended and that I should ideally use RAID-Z2 or Z3 - I understand the risk taken)

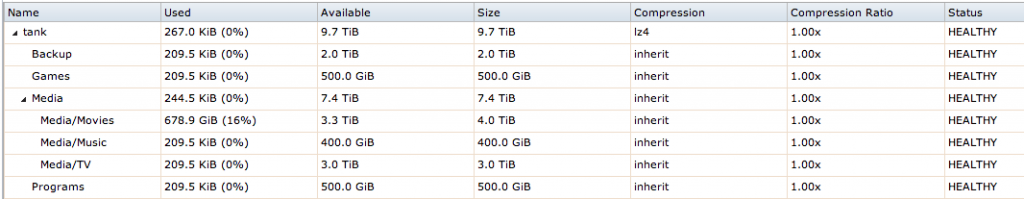

After setting up the RAID-Z volume, I had 10.4TB available to me which I was expecting (shown within the storage tab)...

The problem came shortly after when I began to copy data from my old Windows server to the datasets I had created within the pool...

I set the quota for Volume1 as 10.4T

I gave quotas for the following datasets:

Backup - 1.2T

Movies - 6.4T

Music - 400G

TV Shows - 2.4T

-------------------

Which all equals to 10.4T

-------------------

1) I then filled the datasets to a point with some data, and the space used within each dataset is not reflected in the volume's space used - it always reports at 0%. I can't seem to find anything in the manual that explains this.

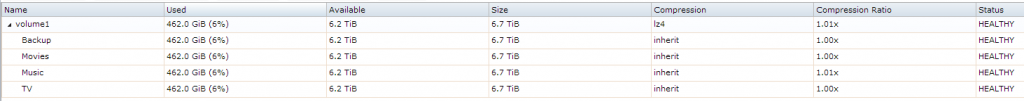

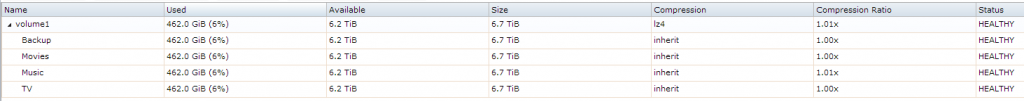

2) Then, after copying more data across, all of a sudden the size of the volume dropped down from 10.4TB to 7.2TB...and then again to 6.7TB. I can still read/write/edit all the data within the volume.

3) Now the Used/Available/Size columns for all the datasets and the volume are showing values that I have no idea about or where they are coming from. I am using way more than 462GB and something more along the lines of 4TB.

I'm not sure whether this is an issue with FreeNAS or because I am doing something silly and just didn't read the manual thoroughly enough when creating volumes and datasets. I have read a few other threads with the same issue but nothing like this on 9.2.1 RELEASE.

Tonight I did an upgrade to 9.2.1.1-RELEASE Upgrade x64 seeing if it would resolve the issue but it didn't

After reading from other similar threads, doing "zfs list" will give much more accurate information. And reassuringly, if I add 4.13 and 6.24 together I get 10.37, which essentially equals 10.4TB.

I have also done "zpool list"

I don't really understand this, but does zpool list show the raw storage available?

So 16TB of disk is actually showing as 14.5TB?

And here is df -h...

I hope I have been as concise as possible by giving all the relevant information.

Not too fussed if I have to delete the pool and destroy all data, but if I can avoid it I would obviously like to - I was warned that newbies can mess up the first few times so I still have everything on my old server.

I would be happy even if the answer was just some reassurance that I can still continue using my FreeNAS system without having to start all over again, and just wait for a bug fix if that's all it takes...

Really appreciate you reading this guys and gals!

Complete FreeNAS new kid here and this is my first post! :) I've had a real interest in FreeNAS over the past few months and, after reading into it further, I have gone and built myself a dedicated storage system (please see sig for h/w details).

I've done the stage of creating a volume using the disk I currently have within the box, which are 4x 4TB WD Reds in a RAID-Z configuration. (I have read that implementing RAID-Z with disks of this size is highly un-recommended and that I should ideally use RAID-Z2 or Z3 - I understand the risk taken)

After setting up the RAID-Z volume, I had 10.4TB available to me which I was expecting (shown within the storage tab)...

The problem came shortly after when I began to copy data from my old Windows server to the datasets I had created within the pool...

I set the quota for Volume1 as 10.4T

I gave quotas for the following datasets:

Backup - 1.2T

Movies - 6.4T

Music - 400G

TV Shows - 2.4T

-------------------

Which all equals to 10.4T

-------------------

1) I then filled the datasets to a point with some data, and the space used within each dataset is not reflected in the volume's space used - it always reports at 0%. I can't seem to find anything in the manual that explains this.

2) Then, after copying more data across, all of a sudden the size of the volume dropped down from 10.4TB to 7.2TB...and then again to 6.7TB. I can still read/write/edit all the data within the volume.

3) Now the Used/Available/Size columns for all the datasets and the volume are showing values that I have no idea about or where they are coming from. I am using way more than 462GB and something more along the lines of 4TB.

I'm not sure whether this is an issue with FreeNAS or because I am doing something silly and just didn't read the manual thoroughly enough when creating volumes and datasets. I have read a few other threads with the same issue but nothing like this on 9.2.1 RELEASE.

Tonight I did an upgrade to 9.2.1.1-RELEASE Upgrade x64 seeing if it would resolve the issue but it didn't

After reading from other similar threads, doing "zfs list" will give much more accurate information. And reassuringly, if I add 4.13 and 6.24 together I get 10.37, which essentially equals 10.4TB.

Code:

[root@ODYSSEY ~]# zfs list NAME USED AVAIL REFER MOUNTPOINT volume1 4.13T 6.24T 462G /mnt/volume1 volume1/.samba4 2.25M 6.24T 2.25M /mnt/volume1/.samba4 volume1/.system 3.69M 6.24T 244K /mnt/volume1/.system volume1/.system/cores 209K 6.24T 209K /mnt/volume1/.system/cores volume1/.system/samba4 2.84M 6.24T 2.84M /mnt/volume1/.system/samba4 volume1/.system/syslog 413K 6.24T 413K /mnt/volume1/.system/syslog volume1/Backup 209K 1.20T 209K /mnt/volume1/Backup volume1/Movies 2.45T 3.95T 2.45T /mnt/volume1/Movies volume1/Music 63.3G 337G 63.3G /mnt/volume1/Music volume1/TV 1.17T 1.23T 1.17T /mnt/volume1/TV

I have also done "zpool list"

Code:

[root@ODYSSEY ~]# zpool list NAME SIZE ALLOC FREE CAP DEDUP HEALTH ALTROOT volume1 14.5T 5.69T 8.81T 39% 1.00x ONLINE /mnt

I don't really understand this, but does zpool list show the raw storage available?

So 16TB of disk is actually showing as 14.5TB?

And here is df -h...

Code:

[root@ODYSSEY ~]# df -h Filesystem Size Used Avail Capacity Mounted on /dev/ufs/FreeNASs2a 926M 826M 26M 97% / devfs 1.0k 1.0k 0B 100% /dev /dev/md0 4.6M 3.3M 902k 79% /etc /dev/md1 823k 2.0k 756k 0% /mnt /dev/md2 149M 51M 86M 37% /var /dev/ufs/FreeNASs4 19M 3M 15M 16% /data volume1 6.7T 462G 6.2T 7% /mnt/volume1 volume1/.samba4 6.2T 2.3M 6.2T 0% /mnt/volume1/.samba4 volume1/.system 6.2T 244k 6.2T 0% /mnt/volume1/.system volume1/.system/samba4 6.2T 2.9M 6.2T 0% /mnt/volume1/.system/sa mba4 volume1/.system/syslog 6.2T 418k 6.2T 0% /mnt/volume1/.system/sy slog volume1/.system/cores 6.2T 209k 6.2T 0% /mnt/volume1/.system/co res

I hope I have been as concise as possible by giving all the relevant information.

Not too fussed if I have to delete the pool and destroy all data, but if I can avoid it I would obviously like to - I was warned that newbies can mess up the first few times so I still have everything on my old server.

I would be happy even if the answer was just some reassurance that I can still continue using my FreeNAS system without having to start all over again, and just wait for a bug fix if that's all it takes...

Really appreciate you reading this guys and gals!