I need help with adding usb passthrough to my windows VM.

I have been running Windows VM with USB PCI Device passthrought for about a year. In the older version of TrueNAS Scale I used PCI passthrough device, picked my controller and everything worked flawlessly. I recently updated to 22.12.3.3 TrueNAS Scale and my VM image disapeared, so I reinstalled windows VM. But then i started running into this error:

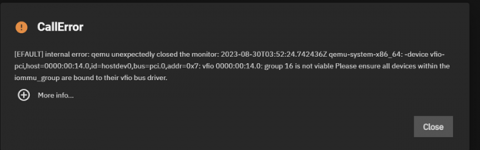

Error: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 172, in start if self.domain.create() < 0: File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create raise libvirtError('virDomainCreate() failed') libvirt.libvirtError: internal error: qemu unexpectedly closed the monitor: 2023-08-30T05:36:29.717177Z qemu-system-x86_64: -device vfio-pci,host=0000:00:14.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:00:14.0: group 16 is not viable Please ensure all devices within the iommu_group are bound to their vfio bus driver. During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/main.py", line 204, in call_method result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1344, in _call return await methodobj(*prepared_call.args) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1378, in nf return await func(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1246, in nf res = await f(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 46, in start await self.middleware.run_in_thread(self._start, vm['name']) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1261, in run_in_thread return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1258, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run result = self.fn(*self.args, **self.kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 68, in _start self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name)) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 181, in start raise CallError('\n'.join(errors)) middlewared.service_exception.CallError: [EFAULT] internal error: qemu unexpectedly closed the monitor: 2023-08-30T05:36:29.717177Z qemu-system-x86_64: -device vfio-pci,host=0000:00:14.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:00:14.0: group 16 is not viable Please ensure all devices within the iommu_group are bound to their vfio bus driver.

I have created another VM instance, and added usb passthrough via PCI device passthrough. But I get the same error. If I remove pci pass throught then VM starts up just fine.

I noticed that there is new option "USB Passthrought Device" in addition to "PCI Passthrought Device". When i triend configuring USB passthrough option, i run into the following issue:

1. I don't know what Controller type I have. So i tried selecting each option.

2. I don't know what Device I have because for every option I pick In Congtroler type I only get "Specify custome" option under Device.

3. For specify custom option i have to type Vendor ID and Product ID, where do i get this? - I tried typing into shell "lsusb" and I get the following "zsh: command not found: lsusb"

In Summary:

1. I don't know why PCI Passthrought device doesn't work anymore and i get the error above.

2. New option for USB passthrough doesn't give me divice options and I don't know Controller type, Vendor ID, and Product ID.

Any help with this would by highly appreciated.

I am running

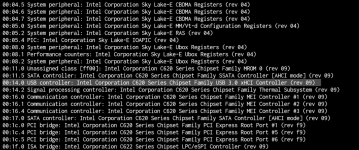

- SUPERMICRO 6049P-ECR36H.

-00:14.0 USB controller: Intel Corporation C620 Series Chpset Family USB 3.0 xHCI Controller (rev09).

I have been running Windows VM with USB PCI Device passthrought for about a year. In the older version of TrueNAS Scale I used PCI passthrough device, picked my controller and everything worked flawlessly. I recently updated to 22.12.3.3 TrueNAS Scale and my VM image disapeared, so I reinstalled windows VM. But then i started running into this error:

Error: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 172, in start if self.domain.create() < 0: File "/usr/lib/python3/dist-packages/libvirt.py", line 1353, in create raise libvirtError('virDomainCreate() failed') libvirt.libvirtError: internal error: qemu unexpectedly closed the monitor: 2023-08-30T05:36:29.717177Z qemu-system-x86_64: -device vfio-pci,host=0000:00:14.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:00:14.0: group 16 is not viable Please ensure all devices within the iommu_group are bound to their vfio bus driver. During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/lib/python3/dist-packages/middlewared/main.py", line 204, in call_method result = await self.middleware._call(message['method'], serviceobj, methodobj, params, app=self) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1344, in _call return await methodobj(*prepared_call.args) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1378, in nf return await func(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/schema.py", line 1246, in nf res = await f(*args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_lifecycle.py", line 46, in start await self.middleware.run_in_thread(self._start, vm['name']) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1261, in run_in_thread return await self.run_in_executor(self.thread_pool_executor, method, *args, **kwargs) File "/usr/lib/python3/dist-packages/middlewared/main.py", line 1258, in run_in_executor return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs)) File "/usr/lib/python3.9/concurrent/futures/thread.py", line 52, in run result = self.fn(*self.args, **self.kwargs) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/vm_supervisor.py", line 68, in _start self.vms[vm_name].start(vm_data=self._vm_from_name(vm_name)) File "/usr/lib/python3/dist-packages/middlewared/plugins/vm/supervisor/supervisor.py", line 181, in start raise CallError('\n'.join(errors)) middlewared.service_exception.CallError: [EFAULT] internal error: qemu unexpectedly closed the monitor: 2023-08-30T05:36:29.717177Z qemu-system-x86_64: -device vfio-pci,host=0000:00:14.0,id=hostdev0,bus=pci.0,addr=0x7: vfio 0000:00:14.0: group 16 is not viable Please ensure all devices within the iommu_group are bound to their vfio bus driver.

I have created another VM instance, and added usb passthrough via PCI device passthrough. But I get the same error. If I remove pci pass throught then VM starts up just fine.

I noticed that there is new option "USB Passthrought Device" in addition to "PCI Passthrought Device". When i triend configuring USB passthrough option, i run into the following issue:

1. I don't know what Controller type I have. So i tried selecting each option.

2. I don't know what Device I have because for every option I pick In Congtroler type I only get "Specify custome" option under Device.

3. For specify custom option i have to type Vendor ID and Product ID, where do i get this? - I tried typing into shell "lsusb" and I get the following "zsh: command not found: lsusb"

In Summary:

1. I don't know why PCI Passthrought device doesn't work anymore and i get the error above.

2. New option for USB passthrough doesn't give me divice options and I don't know Controller type, Vendor ID, and Product ID.

Any help with this would by highly appreciated.

I am running

- SUPERMICRO 6049P-ECR36H.

-00:14.0 USB controller: Intel Corporation C620 Series Chpset Family USB 3.0 xHCI Controller (rev09).