Egon Olieux

Dabbler

- Joined

- Oct 7, 2014

- Messages

- 20

Hi all,

My FreeNAS server (version 9.3-STABLE-201502271818) has 2 zpools, one for storage containing a mirror (zpool0) and one containing a single SSD (zpool1) for jails and the system dataset.

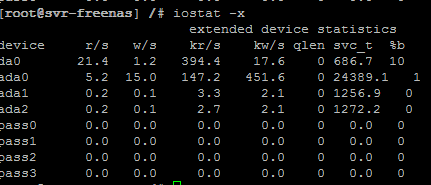

Recently I noticed disk I/O to zpool1 (the SSD) is abnormally high, averaging around 500kB/s write and 200kB/s read. My first thought was that the system dataset might be the cause (collectd and syslog), but after moving it to zpool0, the disk writes only dropped by +- 150kB/s. The FreeNAS reports, iostat, zpool iostat and smartctl (LBA written) all seem to report the same numbers.

ada0 (SSD):

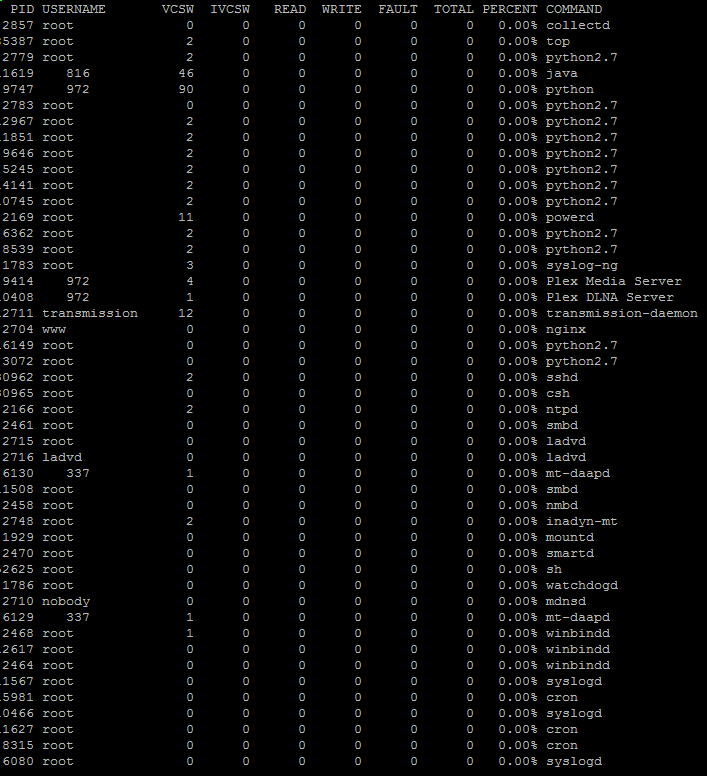

I've been trying to find the cause of this high I/O (particularly the writes, since it will degrade my SSD lifespan) without any success. Top seems to report idle writes for all processes (except for collectd). To further narrow down the problem, I individually stopped all active jails, but the I/O remains unchanged.

Top I/O stats:

Other details which might help:

Installed jails (& plugins):

- Subsonic

- Plexmediaserver

- Transmission

- Firefly

- Xonarr

- Owncloud

- BTSync

- Nginx (as reverse proxy for SSL)

System specs:

- CPU: Xeon E3-1220

- RAM: 16GB ECC

- Mobo: AsRock Rack E3C226D2I

- Samsung 840 Evo 120GB

- Western Digital Red 4TB (2x)

Thanks in advance.

My FreeNAS server (version 9.3-STABLE-201502271818) has 2 zpools, one for storage containing a mirror (zpool0) and one containing a single SSD (zpool1) for jails and the system dataset.

Recently I noticed disk I/O to zpool1 (the SSD) is abnormally high, averaging around 500kB/s write and 200kB/s read. My first thought was that the system dataset might be the cause (collectd and syslog), but after moving it to zpool0, the disk writes only dropped by +- 150kB/s. The FreeNAS reports, iostat, zpool iostat and smartctl (LBA written) all seem to report the same numbers.

ada0 (SSD):

I've been trying to find the cause of this high I/O (particularly the writes, since it will degrade my SSD lifespan) without any success. Top seems to report idle writes for all processes (except for collectd). To further narrow down the problem, I individually stopped all active jails, but the I/O remains unchanged.

Top I/O stats:

Other details which might help:

Installed jails (& plugins):

- Subsonic

- Plexmediaserver

- Transmission

- Firefly

- Xonarr

- Owncloud

- BTSync

- Nginx (as reverse proxy for SSL)

System specs:

- CPU: Xeon E3-1220

- RAM: 16GB ECC

- Mobo: AsRock Rack E3C226D2I

- Samsung 840 Evo 120GB

- Western Digital Red 4TB (2x)

Thanks in advance.

Last edited: