MrGuvernment

Patron

- Joined

- Jun 15, 2017

- Messages

- 268

Hello All,

As I lurk these forums often and read far too much, I finally got some time to do some testing on my set up (in sig)

Along with that, I added 2 more NVMe's to my VM pool.

Using FIO (all tests run local on TrueNAS via SSH), I began doing some testing of each NVMe to get an idea of performance before making my pool (4 NVMe 2x vdev for vms over NFS)

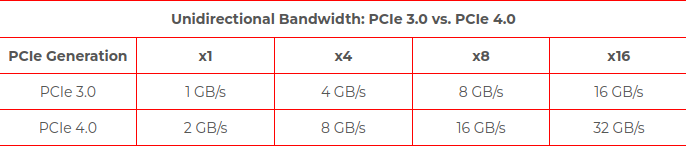

Note, the HP Z6 G4, is PCIe3 based, the part that has me stumped, if that I am getting faster than PCIe3 x4 speeds, when 3 of my NVMe are connected at that, as well, the BIOS is reporting some interesting numbers for the slots that seem wrong.

TLDR;Perhaps I am missing something, or completely am having a brain fart, but seems the speeds are reporting faster than physically possible on some ports.

HP Bios example:

Slot 3 PCIe x4 = Shows "Current PCIe Speed Gen3 (8Gbps)" - How is that possible when according to the manual Slot 3: PCI Express Gen3 x4 - PCH ?

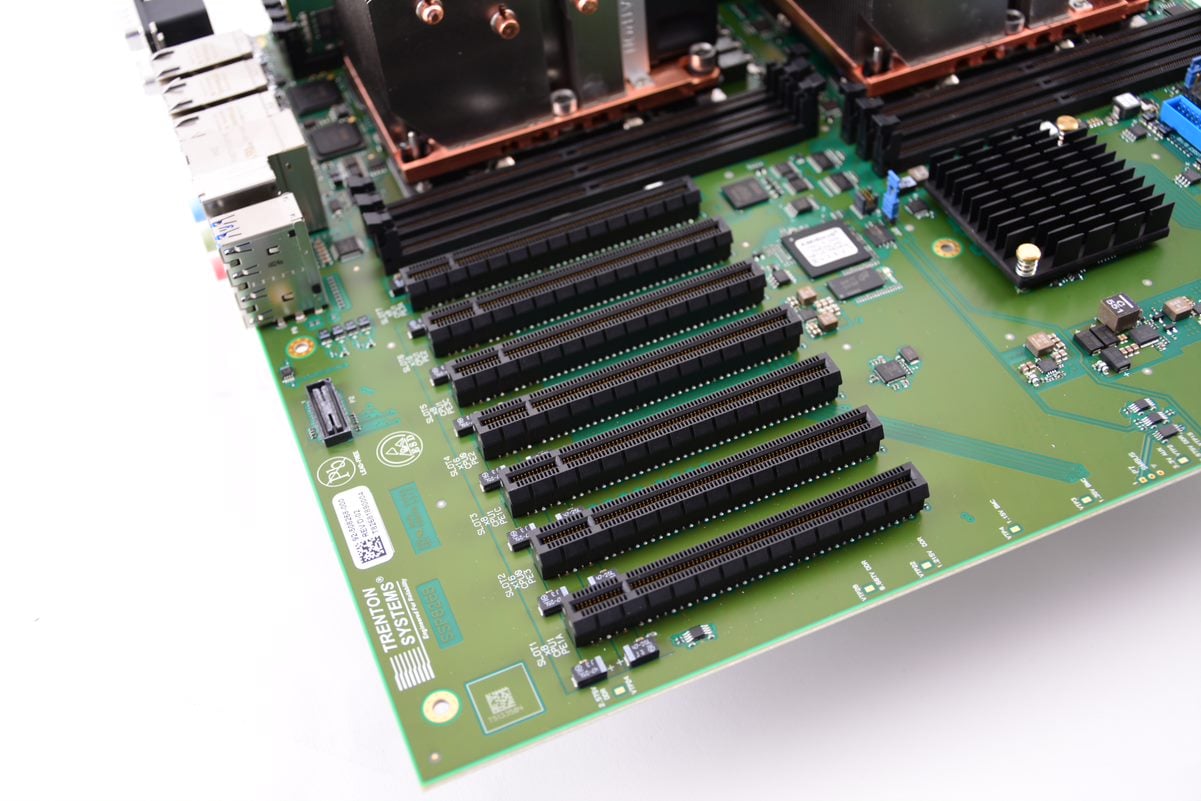

The motherboard of my rig PCIe Slot config

PCI Express Connectors

My NVMe's are connected into: (those in PCIe slots using Sabrent adapter - https://sabrent.com/products/EC-PCIE)

So, on to the testing and what I used. Using Fio with some methods listed from the below link. Each drive tested as a solo drive.

askubuntu.com

askubuntu.com

### Sequential READ speed with big blocks QD32 (this should be near the number you see in the specifications for your drive):

### Sequential WRITE speed with big blocks QD32 (this should be near the number you see in the specifications for your drive):

### Random 4K read QD1 (this is the number that really matters for real world performance unless you know better for sure):

### Mixed random 4K read and write QD1 with sync (this is worst case performance you should ever expect from your drive, usually less than 1% of the numbers listed in the spec sheet):

Now, when I created a pool with all 4 drives.. I seem capped to a single drives performance for some tests

VMs Pool - 4 NVMe Drives - 2 VDEVs mirrored

As I lurk these forums often and read far too much, I finally got some time to do some testing on my set up (in sig)

Along with that, I added 2 more NVMe's to my VM pool.

Using FIO (all tests run local on TrueNAS via SSH), I began doing some testing of each NVMe to get an idea of performance before making my pool (4 NVMe 2x vdev for vms over NFS)

Note, the HP Z6 G4, is PCIe3 based, the part that has me stumped, if that I am getting faster than PCIe3 x4 speeds, when 3 of my NVMe are connected at that, as well, the BIOS is reporting some interesting numbers for the slots that seem wrong.

TLDR;Perhaps I am missing something, or completely am having a brain fart, but seems the speeds are reporting faster than physically possible on some ports.

HP Bios example:

Slot 3 PCIe x4 = Shows "Current PCIe Speed Gen3 (8Gbps)" - How is that possible when according to the manual Slot 3: PCI Express Gen3 x4 - PCH ?

The motherboard of my rig PCIe Slot config

PCI Express Connectors

Code:

Slot 0: Mechanical-only (2nd CPU connector) Slot 1: PCI Express Gen3 x4 - CPU Slot 2: PCI Express Gen3 x16 - CPU Slot 3: PCI Express Gen3 x4 - PCH Slot 4: PCI Express Gen3 x8 – CPU (slot converts to x4 electrical when SSD is installed in 2nd M.2 slot)* Slot 5: PCI Express Gen3 x16 - CPU Slot 6: PCI Express Gen3 x4 - PCH M.2 Slot 1: M.2 PCIe Gen 3 x4 - CPU M.2 Slot 2: M.2 PCIe Gen 3 x4 - CPU

My NVMe's are connected into: (those in PCIe slots using Sabrent adapter - https://sabrent.com/products/EC-PCIE)

Code:

Slot 3: PCI Express Gen3 x4 - PCH - nvd4 - Boot Drive - 970 Evo NVMe Slot 4: PCI Express Gen3 x8 - CPU - nvd0 - XPG 2TB GAMMIX S70 Blade - Should be at x4 because I have both m.2 slots populated Slot 6: PCI Express Gen3 x4 - PCH - nvd1 XPG 2TB GAMMIX S70 Blade M.2 Slot 1: M.2 PCIe Gen 3 x4 - CPU - nvd2 - Samsung 980 PRO 2TB NVMe M.2 Slot 2: M.2 PCIe Gen 3 x4 - CPU - nvd3 - Samsung 980 PRO 2TB NVMe

So, on to the testing and what I used. Using Fio with some methods listed from the below link. Each drive tested as a solo drive.

How to check hard disk performance

How to check the performance of a hard drive (Either via terminal or GUI). The write speed. The read speed. Cache size and speed. Random speed.

### Sequential READ speed with big blocks QD32 (this should be near the number you see in the specifications for your drive):

Code:

fio --name TESTSeqRead --eta-newline=5s --filename=fio-tempfile-RSeq1.dat --rw=read --size=500m --io_size=50g --blocksize=1024k --fsync=10000 --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting ## Each NVMe one at a time - 100% CPU usage single core nvd0 READ: bw=7170MiB/s (7518MB/s), 7170MiB/s-7170MiB/s (7518MB/s-7518MB/s), io=50.0GiB (53.7GB), run=7141-7141msec nvd1 READ: bw=7168MiB/s (7516MB/s), 7168MiB/s-7168MiB/s (7516MB/s-7516MB/s), io=50.0GiB (53.7GB), run=7143-7143msec nvd2 READ: bw=7238MiB/s (7589MB/s), 7238MiB/s-7238MiB/s (7589MB/s-7589MB/s), io=50.0GiB (53.7GB), run=7074-7074msec nvd3 READ: bw=7156MiB/s (7503MB/s), 7156MiB/s-7156MiB/s (7503MB/s-7503MB/s), io=50.0GiB (53.7GB), run=7155-7155msec ## All 4 NVMe tested at the same time (script run against all 4 at the same time) - 100% CPU usage single core for each nvd0 READ: bw=6882MiB/s (7216MB/s), 6882MiB/s-6882MiB/s (7216MB/s-7216MB/s), io=50.0GiB (53.7GB), run=7440-7440msec nvd1 READ: bw=6919MiB/s (7255MB/s), 6919MiB/s-6919MiB/s (7255MB/s-7255MB/s), io=50.0GiB (53.7GB), run=7400-7400msec nvd2 READ: bw=6749MiB/s (7077MB/s), 6749MiB/s-6749MiB/s (7077MB/s-7077MB/s), io=50.0GiB (53.7GB), run=7586-7586msec nvd3 READ: bw=6797MiB/s (7127MB/s), 6797MiB/s-6797MiB/s (7127MB/s-7127MB/s), io=50.0GiB (53.7GB), run=7533-7533msec

### Sequential WRITE speed with big blocks QD32 (this should be near the number you see in the specifications for your drive):

Code:

fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeq.dat --rw=write --size=500m --io_size=50g --blocksize=1024k --fsync=10000 --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting ## Each NVMe one at a time nvd0 WRITE: bw=6465MiB/s (6780MB/s), 6465MiB/s-6465MiB/s (6780MB/s-6780MB/s), io=50.0GiB (53.7GB), run=7919-7919msec nvd1 WRITE: bw=6544MiB/s (6862MB/s), 6544MiB/s-6544MiB/s (6862MB/s-6862MB/s), io=50.0GiB (53.7GB), run=7824-7824msec nvd2 WRITE: bw=6278MiB/s (6583MB/s), 6278MiB/s-6278MiB/s (6583MB/s-6583MB/s), io=50.0GiB (53.7GB), run=8156-8156msec nvd3 WRITE: bw=6456MiB/s (6770MB/s), 6456MiB/s-6456MiB/s (6770MB/s-6770MB/s), io=50.0GiB (53.7GB), run=7930-7930msec ## All 4 NVMe tested at the same time avg 86% CPU usage per core nvd0 WRITE: bw=5988MiB/s (6278MB/s), 5988MiB/s-5988MiB/s (6278MB/s-6278MB/s), io=50.0GiB (53.7GB), run=8551-8551msec nvd1 WRITE: bw=5989MiB/s (6280MB/s), 5989MiB/s-5989MiB/s (6280MB/s-6280MB/s), io=50.0GiB (53.7GB), run=8549-8549msec nvd2 WRITE: bw=5467MiB/s (5733MB/s), 5467MiB/s-5467MiB/s (5733MB/s-5733MB/s), io=50.0GiB (53.7GB), run=9365-9365msec nvd3 WRITE: bw=5553MiB/s (5823MB/s), 5553MiB/s-5553MiB/s (5823MB/s-5823MB/s), io=50.0GiB (53.7GB), run=9220-9220msec

### Random 4K read QD1 (this is the number that really matters for real world performance unless you know better for sure):

Code:

fio --name TESTRandom4kRead --eta-newline=5s --filename=fio-tempfile-RanR4K.dat --rw=randread --size=500m --io_size=50g --blocksize=4k --fsync=1 --iodepth=1 --direct=1 --numjobs=1 --runtime=60 --group_reporting ## Each NVMe one at a time (script run against all 4 at the same time) - 100% CPU usage single core nvd0 READ: bw=1260MiB/s (1321MB/s), 1260MiB/s-1260MiB/s (1321MB/s-1321MB/s), io=50.0GiB (53.7GB), run=40627-40627msec nvd1 READ: bw=1230MiB/s (1289MB/s), 1230MiB/s-1230MiB/s (1289MB/s-1289MB/s), io=50.0GiB (53.7GB), run=41639-41639msec nvd2 READ: bw=1149MiB/s (1205MB/s), 1149MiB/s-1149MiB/s (1205MB/s-1205MB/s), io=50.0GiB (53.7GB), run=44552-44552msec nvd3 READ: bw=1184MiB/s (1241MB/s), 1184MiB/s-1184MiB/s (1241MB/s-1241MB/s), io=50.0GiB (53.7GB), run=43245-43245msec ## All 4 NVMe tested at the same time - 100% CPU usage single core for each nvd0 READ: bw=1087MiB/s (1140MB/s), 1087MiB/s-1087MiB/s (1140MB/s-1140MB/s), io=50.0GiB (53.7GB), run=47094-47094msec nvd1 READ: bw=1088MiB/s (1141MB/s), 1088MiB/s-1088MiB/s (1141MB/s-1141MB/s), io=50.0GiB (53.7GB), run=47048-47048msec nvd2 READ: bw=1114MiB/s (1168MB/s), 1114MiB/s-1114MiB/s (1168MB/s-1168MB/s), io=50.0GiB (53.7GB), run=45954-45954msec nvd3 READ: bw=1104MiB/s (1158MB/s), 1104MiB/s-1104MiB/s (1158MB/s-1158MB/s), io=50.0GiB (53.7GB), run=46360-46360msec

### Mixed random 4K read and write QD1 with sync (this is worst case performance you should ever expect from your drive, usually less than 1% of the numbers listed in the spec sheet):

Code:

fio --name TESTRandom4kRW --eta-newline=5s --filename=fio-tempfile-RanRW4k.dat --rw=randrw --size=500m --io_size=10g --blocksize=4k --fsync=1 --iodepth=1 --direct=1 --numjobs=1 --runtime=60 --group_reporting

## Each NVMe one at a time - 15% or lower CPU usage

nvd0 READ: bw=13.3MiB/s (13.9MB/s), 13.3MiB/s-13.3MiB/s (13.9MB/s-13.9MB/s), io=796MiB (835MB), run=60001-60001msec

WRITE: bw=13.2MiB/s (13.8MB/s), 13.2MiB/s-13.2MiB/s (13.8MB/s-13.8MB/s), io=792MiB (831MB), run=60001-60001msec

nvd1 READ: bw=13.5MiB/s (14.2MB/s), 13.5MiB/s-13.5MiB/s (14.2MB/s-14.2MB/s), io=810MiB (849MB), run=60000-60000msec

WRITE: bw=13.4MiB/s (14.1MB/s), 13.4MiB/s-13.4MiB/s (14.1MB/s-14.1MB/s), io=806MiB (845MB), run=60000-60000msec

nvd2 READ: bw=1800KiB/s (1843kB/s), 1800KiB/s-1800KiB/s (1843kB/s-1843kB/s), io=105MiB (111MB), run=60001-60001msec

WRITE: bw=1798KiB/s (1841kB/s), 1798KiB/s-1798KiB/s (1841kB/s-1841kB/s), io=105MiB (110MB), run=60001-60001msec

nvd3 READ: bw=1693KiB/s (1734kB/s), 1693KiB/s-1693KiB/s (1734kB/s-1734kB/s), io=99.2MiB (104MB), run=60002-60002msec

WRITE: bw=1691KiB/s (1731kB/s), 1691KiB/s-1691KiB/s (1731kB/s-1731kB/s), io=99.1MiB (104MB), run=60002-60002msec

## All 4 NVMe tested at the same time (script run against all 4 at the same time) - 15% or lower CPU usage

nvd0 READ: bw=13.3MiB/s (14.0MB/s), 13.3MiB/s-13.3MiB/s (14.0MB/s-14.0MB/s), io=800MiB (838MB), run=60001-60001msec

WRITE: bw=13.3MiB/s (13.9MB/s), 13.3MiB/s-13.3MiB/s (13.9MB/s-13.9MB/s), io=796MiB (834MB), run=60001-60001msec

nvd1 READ: bw=13.8MiB/s (14.4MB/s), 13.8MiB/s-13.8MiB/s (14.4MB/s-14.4MB/s), io=825MiB (866MB), run=60001-60001msec

WRITE: bw=13.7MiB/s (14.4MB/s), 13.7MiB/s-13.7MiB/s (14.4MB/s-14.4MB/s), io=822MiB (862MB), run=60001-60001msec

nvd2 READ: bw=1681KiB/s (1721kB/s), 1681KiB/s-1681KiB/s (1721kB/s-1721kB/s), io=98.5MiB (103MB), run=60002-60002msec

WRITE: bw=1678KiB/s (1719kB/s), 1678KiB/s-1678KiB/s (1719kB/s-1719kB/s), io=98.3MiB (103MB), run=60002-60002msec

nvd3 READ: bw=1765KiB/s (1808kB/s), 1765KiB/s-1765KiB/s (1808kB/s-1808kB/s), io=103MiB (108MB), run=60001-60001msec

WRITE: bw=1764KiB/s (1807kB/s), 1764KiB/s-1764KiB/s (1807kB/s-1807kB/s), io=103MiB (108MB), run=60001-60001msecNow, when I created a pool with all 4 drives.. I seem capped to a single drives performance for some tests

VMs Pool - 4 NVMe Drives - 2 VDEVs mirrored

Code:

### Sequential WRITE speed with big blocks QD32 (this should be near the number you see in the specifications for your drive): fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeq.dat --rw=write --size=500m --io_size=50g --blocksize=1024k --fsync=10000 --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting READ: bw=7407MiB/s (7767MB/s), 7407MiB/s-7407MiB/s (7767MB/s-7767MB/s), io=50.0GiB (53.7GB), run=6912-6912msec ### Sequential WRITE speed with big blocks QD32 (this should be near the number you see in the specifications for your drive): fio --name TESTSeqWrite --eta-newline=5s --filename=fio-tempfile-WSeq.dat --rw=write --size=500m --io_size=50g --blocksize=1024k --fsync=10000 --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting WRITE: bw=6207MiB/s (6508MB/s), 6207MiB/s-6207MiB/s (6508MB/s-6508MB/s), io=50.0GiB (53.7GB), run=8249-8249msec ### Random 4K read QD1 (this is the number that really matters for real world performance unless you know better for sure): fio --name TESTRandom4kRead --eta-newline=5s --filename=fio-tempfile-RanR4K.dat --rw=randread --size=500m --io_size=50g --blocksize=4k --fsync=1 --iodepth=1 --direct=1 --numjobs=1 --runtime=60 --group_reporting READ: bw=1157MiB/s (1213MB/s), 1157MiB/s-1157MiB/s (1213MB/s-1213MB/s), io=50.0GiB (53.7GB), run=44244-44244msec

Attachments

Last edited: